Zero-Shot, One-Shot, Few-Shot, More?

The "monkey see, monkey do" way to prompt LLMs and chatbots.

Happy Thursday, netizens!

Today, we’ll take our prompting journey a step further.

If you’ve followed my recent posts, you should feel pretty comfortable working with chatbots. You dive in using a Minimum Viable Prompt instead of overengineering things from the get-go.

You also know that you can get much better responses by getting chatbots to ask you questions before answering.

But what if you already know exactly what you’re after and want an LLM to follow a specific template?

That, friends, is where [insert-number-here]-shot prompting comes into play.

What’s with all these shots?

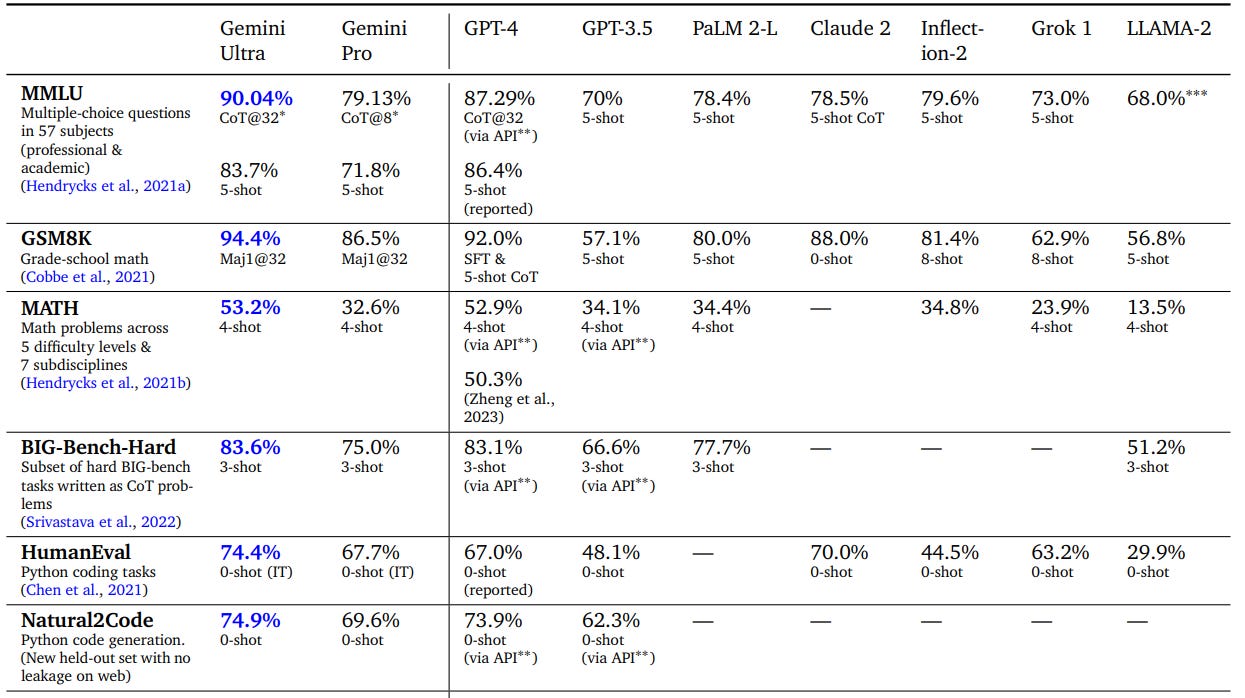

Scores for LLM benchmarks often list information about the way a given model was prompted.

Take the following table for the Google Gemini family:

Some of the above refer to “CoT”—short for “Chain of Thought” prompting—which I might cover separately.

But in most cases, you’ll see a number followed by “-shot”.

This tells you the amount of examples (or “shots”) provided to the model when requesting an answer.

Broadly speaking, there are three “shot”-related ways to prompt a model or a chatbot:

Zero-shot

One-shot

Few-shot

Let’s take a look at what those mean and work through a few examples.

This post might get cut off in some email clients. Click here to read it online.

Zero-shot prompt (or “Do as you want”)

This is how most of us are likely to interact with a chatbot for the first time.

We simply ask it a question or give it an instruction without showing it how we want the response to look.

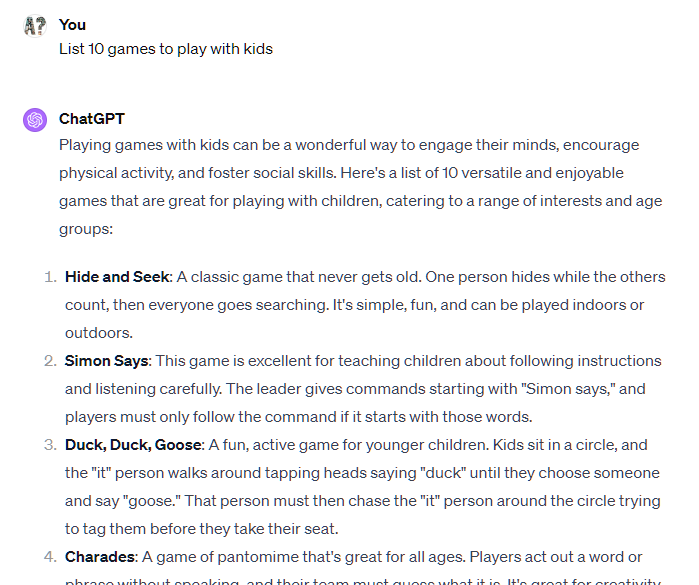

For instance, if I want to get ideas for games to play with kids, I might ask:

List 10 games to play with kids

Note that I didn’t mention anything about the types of games I want or the format for my list. I’m letting the model decide how to respond based on its training data.

In other words, I’ve given the model zero examples to work from, hence the “zero-shot” name.

Here’s what ChatGPT comes back with:

Letting the model take the wheel is perfectly fine for most everyday use cases. You get an answer quickly, without having to worry about detailed instructions.

But there are limitations to this zero-shot approach:

It’s not consistent. Each time you run the prompt, you’ll likely get a new set of response styles and formats.

It might be less relevant. Because you’re letting the chatbot decide what to focus on, it may come up with answers that aren’t what you’re after.

It’s more model-dependent. Since each LLM has its own behavior and underlying dataset, you might get differently structured responses from ChatGPT compared to Microsoft Copilot compared to Bard, etc.1

That brings us to one-shot prompting.

One-shot prompt (or “Do as I show”)

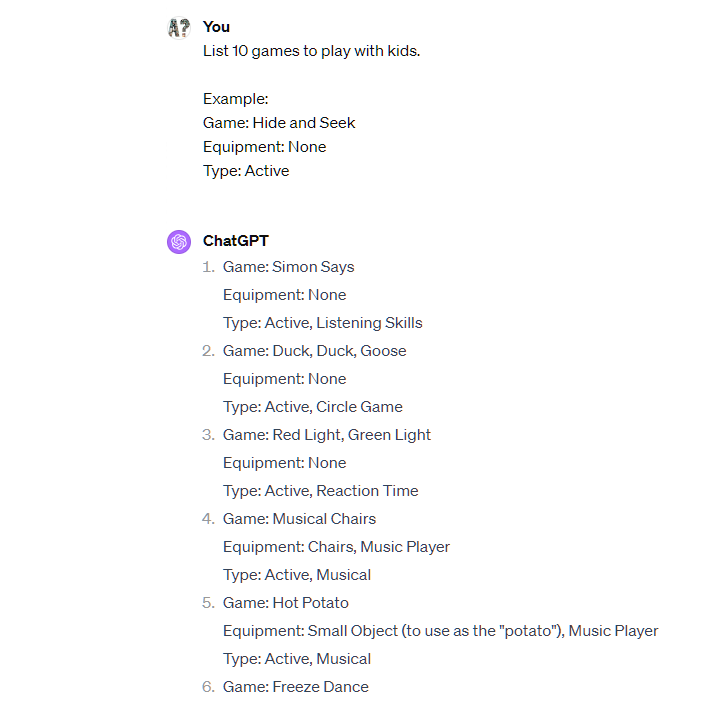

To help nudge a chatbot in a more predictable direction, you can use a one-shot prompt.

That’s when you offer the chatbot a template to follow by adding an example of the type of output you’re looking for.

Let’s return to our “kid games” prompt and pretend that we’re after a more structured list that specifies the type of game and any equipment needed.

We add an example to our initial request, turning it into a one-shot prompt, like so:

List 10 games to play with kids.

Example:

Game: Hide and Seek

Equipment: None

Type: Active

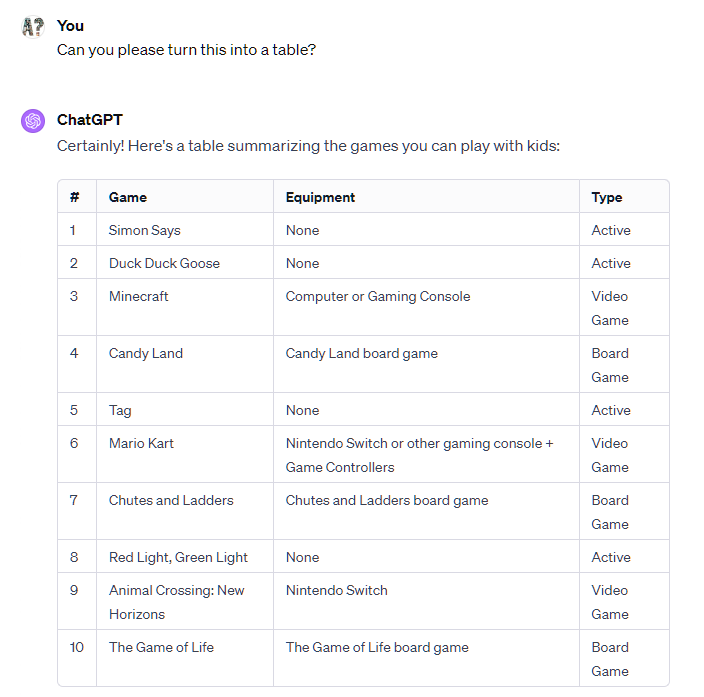

ChatGPT will now stick to our example when returning its answers:

As you can see, ChatGPT now follows our template and fills in the blanks. As a result, we get a more structured and categorized list.

But one-shot prompting has its own downsides.

First, your one-shot prompt might inadvertently steer the model toward a limited subset of answers. If you’re e.g. looking for baby names and your one-shot example uses “Alan,” the model might decide you’re only interested in boy names or prefer short names or those starting with “A.”

Our example: Notice how every game on the list involves moving around and using your body. But what about board games or video games? Well, our one-shot example had an active game in it, so maybe that’s what set ChatGPT down the “active” path.

Second, your one-shot prompt might not offer enough examples to properly showcase the format you’re after.

Our example: If we want “Active” to be a clean, standalone category, we might not want ChatGPT to add extra descriptors like "Musical” or “Passing,” as it did. (Maybe we want to create a table of games and categories, so having such long strings isn’t helpful.)

That’s why you might want to upgrade your one-shot prompt to a few-shot prompt.

Few-shot prompt (or “Do as I want”)

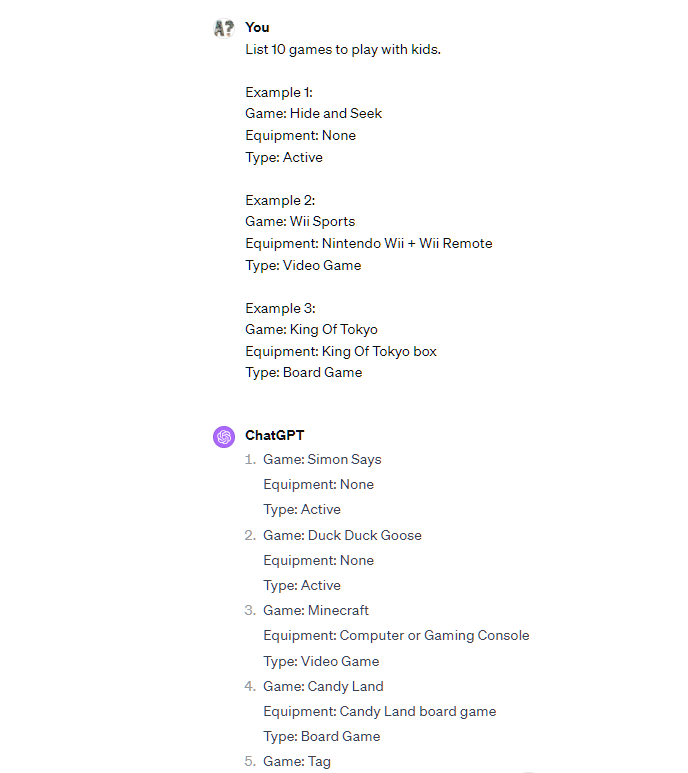

Few-shot prompting works the same way as one-shot prompting but feeds additional examples to the model.

Few-shot prompts typically use between two and five examples, but there’s no hard rule here. (Nothing is stopping you from using 127 examples, you absolute maniac.)

Let’s return to our case, where we want to nudge ChatGPT into considering board games and video games while cleaning up our category list.

Here’s what our few-shot prompt might look like:

List 10 games to play with kids.

Example 1:

Game: Hide and Seek

Equipment: None

Type: ActiveExample 2:

Game: Wii Sports

Equipment: Nintendo Wii + Wii Remote

Type: Video GameExample 3:

Game: King Of Tokyo

Equipment: King Of Tokyo box

Type: Board Game

This broadens the range of responses while streamlining the categories:

Pro tip: Where relevant, choose examples at the opposite ends of the response spectrum to help the chatbot get a better sense of the range of acceptable answers. For instance, if you’re asking an LLM to rate something, include an example of the worst possible rating (e.g. “Awful”) as well as the best one (e.g. “Outstanding”).

In our “kids games” case, using the few-shot prompt resulted in nicely structured responses that we can fit into a neat table:

That’s the power of few-shot prompting for steering and organizing an LLM’s output.

Over to you…

If you were in doubt about the zero/one/few-shot prompts, I hope this helped!

Do you already use one-shot or few-shot prompting? If so, I’d love to see real-world examples of successfully steering large language models using these approaches.

If you have any questions or additions, I’m happy to hear from you.

Leave a comment or shoot me an email at whytryai@substack.com.

Of course, this could also be an advantage if you’re looking for a broad range of perspectives on a topic.

Absolutely one of the most helpful articles I have read on this subject. Good job!

Thanks for clarifying this!

I am left with a question, though: why not call them "examples" instead of "shots"? Is it just so us fancy prompt engineer types can sneer at the rest of the population with our obviously superior knowledge, lording our power over the luddites of the world the way that the arcane academia of the ancient world controlled information?