It Takes Two to Tango. Why You Should Use GPT-4o and o1 in Tandem.

Get the best of both worlds by using GPT-4o to write briefs for o1.

Two weeks ago,

published this widely circulated article by Ben Hylak, which I encourage you to read in full:Ben is a self-proclaimed convert from an o1 skeptic to an o1 believer1. What it took was a simple mindset shift, captured in this quote of his:

“I was using o1 like a chat model — but o1 is not a chat model.”

After reading the article, I started digging into how o1 and similar reasoning models differ from traditional LLM-powered chatbots. Eventually, I’ve come to realize something: It makes a crapton of sense to use standard LLMs in tandem with reasoning models.

So follow me as I make the case for why and how you can use them together.

Note: While I focus on GPT-4o and o1, my findings and suggestions are largely applicable to other combinations of LLMs and reasoning models (e.g. DeepSeek-R1)

Forget what you know about prompting LLMs

Even though we can access the two via the same chat interface, reasoning models like o1 are different beasts compared to chat models like GPT-4o.

This means that many of the established prompting practices might not perform equally well with reasoning models.

So far, what we know about working with reasoning models comes primarily from the AI labs behind them and early adopters like Ben. If you want a solid intro to working with o1, I highly recommend this free 1-hour video course by OpenAI’s Colin Jarvis:

Based on these early insights, here are three “classic” LLM habits you might want to unlearn with reasoning models:

1. Don’t use chain-of-thought prompting

With older LLMs, chain-of-thought (CoT) prompting was a reliable way to make them think through harder problems and arrive at better results. Here’s my primer:

But with models like o1, CoT prompting might be unnecessary at best and detrimental at worst.

o1 is built to reason through hard questions out of the box. As such, asking it to “think step by step” or handing it your own reasoning framework might only sidetrack it and increase the thinking time without measurable improvements.

2. …and maybe also few-shot prompting?

Few-shot prompting is when you give an LLM a few response examples you want it to follow. My primer here:

When it comes to reasoning models, the guidance on this front is a bit less clear-cut.

On the one hand, DeepSeek’s technical paper on DeepSeek-R1 unambiguously states (emphasis mine):

When evaluating DeepSeek-R1, we observe that it is sensitive to prompts. Few-shot prompting consistently degrades its performance. Therefore, we recommend users directly describe the problem and specify the output format using a zero-shot setting for optimal results.

On the other hand, the above “Reasoning with o1” course by OpenAI mentions this key principle (emphasis mine):

Rather than using excessive explanation, give a contextual example to give the model understanding of the broad domain of your task.

Curiously, while the other prompting principles from the course are also mentioned on OpenAI’s “Reasoning models” documentation page, the above one about giving examples is missing.

For what it’s worth, my interpretation after completing the course and lots of background reading is this:

Examples that help the model better understand what your goal is = okay.

Examples that tell the model how to think = bad idea.

3. Reduce back-and-forth chatter

My typical advice with AI models has always been to start simple and iterate from there. We have all gotten used to this kind of dialogue with LLMs. They are called chatbots, after all.

Conversely, reasoning models are intended to take their time, work through a task, and arrive at the answer independently. You should aim to give them everything they need to do this upfront.

That’s the gist of Ben’s argument and is supported by anecdotal examples from people responding to this Substack Note of mine (with exceptions):

Beyond this, there are practical limitations in trying to “chat” with o1:

Cost: ChatGPT Plus users get only 50 weekly messages with o1. Want unlimited access? You’ll have to pay $200 / month for the ChatGPT Pro plan. As for API pricing, o1 output tokens are 12 times more expensive than GPT-4o.

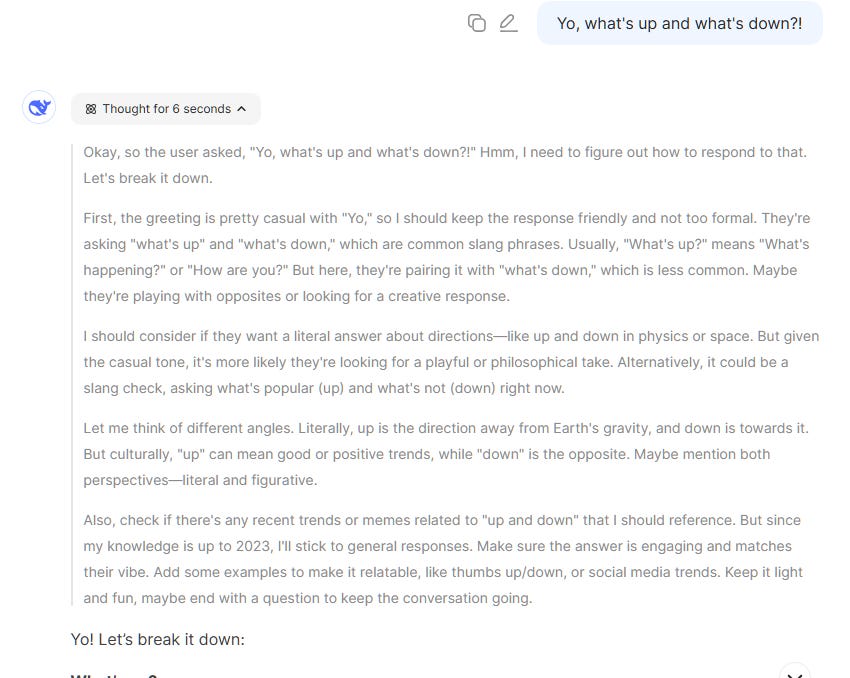

Latency: Reasoning models take time to think deeply about every request, no matter how simple. Here’s DeepSeek-R1 channeling its inner anxious teenager:

This makes them impractical for snappy casual chats.

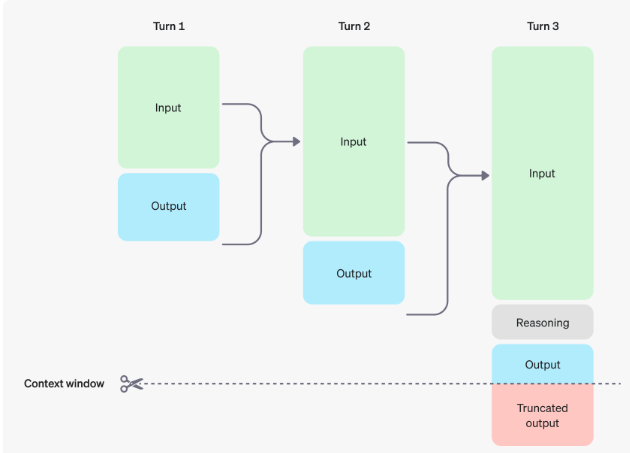

Context window: Reasoning tokens are still tokens. They take up space in the context window. Even though they’re discarded between chat turns, prolonged conversations may force o1 into truncating the visible output:

Source: OpenAI

In short, don’t tell o1 how to do its job, explain your goals well, and then get the Hell out of the way, Karen.

So how should you prompt reasoning models?

Right off the bat, I gotta make two things clear:

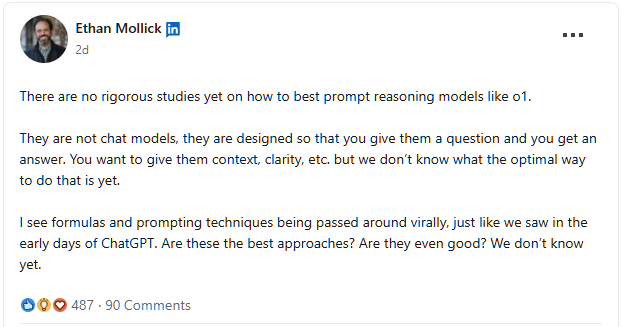

Yes, we do know that reasoning models are different, but…

No, we don’t yet know the “one best way” to prompt and work with them.

Ethan Mollick captured this perfectly:

As such, take any “Ultimate o1 Prompt Blueprint” you see with a handful of salt.

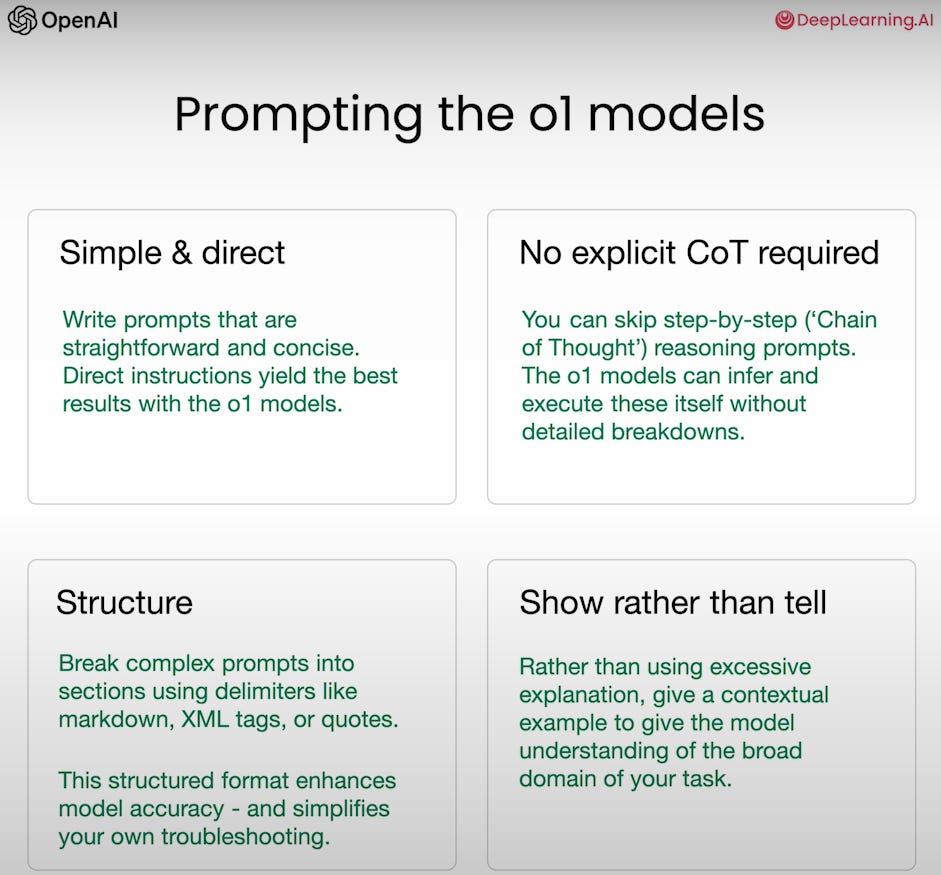

Having said that, there are certainly a few guiding principles emerging:

OpenAI also lists slight variations of these principles on the o1 documentation page:

Keep prompts simple and direct

Avoid CoT prompting

Use delimiters for structure and clarity

Give only the most relevant information and limit additional context2

But how exactly do you determine which information is the most relevant, what to exclude, and how to properly structure it with those fancy “delimiters”?

Well, I just so happen to know someone who can help…

GPT-4o: Your o1 briefing buddy

First off, let me share a recent success story.

A few days ago, o1 helped me create a genuinely useful tool for extracting and merging my entire Substack post history:

But the truth is, I ended up having to go back and forth with o1 about a dozen times before the tool was finished. Most of those extra turns were to add new features that popped into my head after trying the tool or tweak existing ones.

Had I spent a bit more time mapping out what the tool should include, I could’ve given o1 a much better brief from the get-go.

That’s when it hit me: GPT-4o is the ideal partner for this.

Built for chat: GPT-4o is all about chat. It’s quick to respond, has much higher rate limits, and you can even jump on a voice call to discuss your needs.

Tool access: Unlike o1, which currently only lets you upload images, GPT-4o can search the web, analyze uploaded documents, or create a “canvas” for you to work on together. This means you can give GPT-4o a lot more context, which it can then turn into a fleshed-out, clean brief for o1. Speaking of which…

Excels at structure: GPT-4o can take your rambling thoughts and organize them in any way you wish. It can even help you uncover stuff you might’ve overlooked via the “Ask me questions” approach. Then, GPT-4o can use whatever delimiters or formatting makes sense to create the o1 brief.

In fact, before you even start on your brief, GPT-4o can help you decide whether you need o1 for your task in the first place. Simply give it an idea of what use cases o1 is suited for and ask whether yours is worth it.

The coworker and the agency

Here’s a metaphor that helps me think about GPT-4o and o1.

GPT-4o: Your helpful coworker

Treat GPT-4o as your trusted office buddy.

He’s always around and happy to brainstorm with you.

Need to kick off a big project with an external agency? He’s got you. Just schedule a chat where you two can flesh out your request and pick the right agency to hire.

o1: The expert agency

o1 is that external agency.

They’re experts who specialize in your type of project. You invite them in for a kick-off meeting, explain your needs, hand them your brief…and then they disappear for a while. Eventually, they come back and deliver the final product.

Sure, you can always try asking them for tweaks, but it’s gonna cost you. So you want to avoid wasting time and money by making sure you’re on the same page from the start.

This “coworker vs. agency” mindset is a good way to think of LLMs vs. reasoning models in general.

Use each one in their intended role and give them a chance to shine!

🫵 Over to you…

Have you already used o1? If so, what’s been your impression?

What do you think of this hybrid “coworker vs. agency” approach? Do you know of other ways to get better results out of o1?

Leave a comment or drop me a line at whytryai@substack.com.

For a quick primer on OpenAI’s o1 reasoning model, check out this post.

This actually goes against Ben’s recommendation of throwing as much context as possible at o1, so I suggest experimenting with this to see what works for your particular case.

given that 50 weekly prompts limit for o1, your o1 and 4o strategy makes a lot of sense.

I recently moved from Claude 3.5 Sonnet to Gemini 2.0 Experimental Advanced, a reasoning model, and talking to it like a chatbot has been fine. I have alternated between Gemini 2.0 Flash and Gemini 2.0 Exp. but that ended up the bifurcating my workflow which was a little annoying.

Dang, this is almost something I could have written myself (though I would lose patience toward the end and rush the conclusion, then just ask the readers to weigh in and save the piece).

Seriously, this is exactly how I've started thinking about o1 and 4o (they are really trying to give physicists a run for that "worst at naming things" title, aren't they?). 4o and related LLMs are designed for conversations (or at least that's what they are best at), whereas you climb up to the top of the mountain to ask the guru a question once a year or whatever (o1). That's the right way to frame these.

I have not yet tried using a prompt designed from 4 for 1, but will make a conscious effort to try this out for tougher questions.