A Taste of Text-To-Video: Two Recent Tools

We now have at least two separate tools to make video clips with text input: Runway Gen-1 and ModelScope. You can try both of them for free right away.

In October last year, I briefly covered the video AI scene.

At the time, all we had was this unsettling demo from Meta AI.

Since then, we’ve moved from creepy demos to creepy try-it-yourself models.

Let me show you two recent tools that turn text prompts into videos.

Runway Gen-1: Text-prompted video-to-video

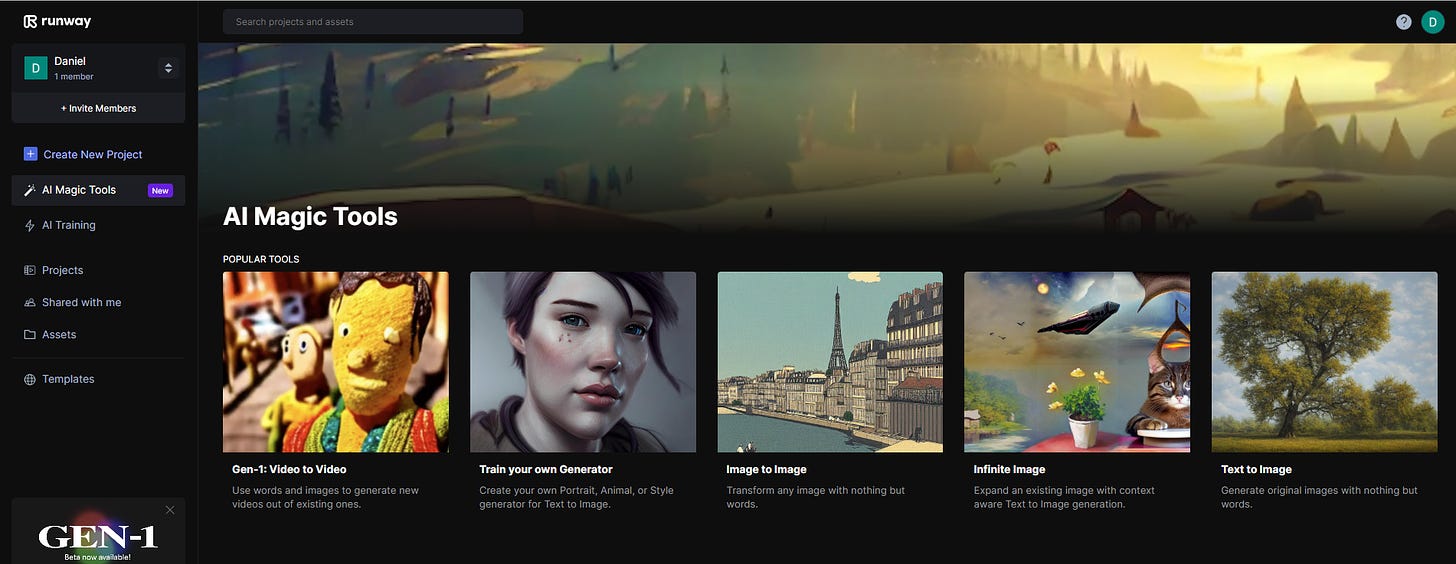

First off, we have Runway, which offers lots of AI-assisted tools for visual content creation. One of these is called Gen-1 video-to-video.

Gen-1 basically lets you “reskin” any video input using text prompts. It’s a bit like using a starting image in Stable Diffusion, but with a video instead.

To test it out, I recorded a short video with my son’s LEGO police airplane. I then asked Gen-1 to make two new versions:

A fighter jet flying into battle

A spaceship arriving to Mars

Here are the results:

I haven’t touched the default settings or tried optimizing my text prompts. You definitely have more options to get closer to your intended vision.

Runway gives all new accounts some free credits to test out its features, so you can check this out for yourself. Simply:

1. Create an account on their sign-up page.

2. In your dashboard, pick the “Gen-1: Video to Video” option:

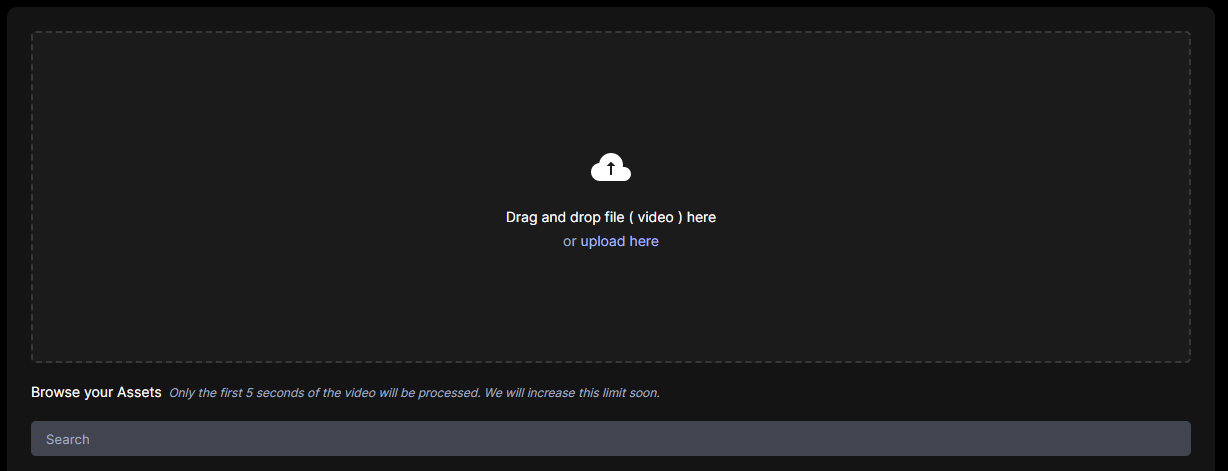

3. Upload your starting video:

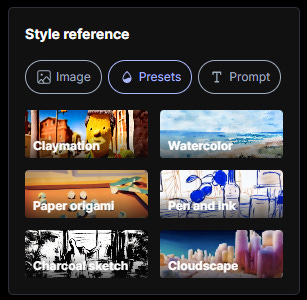

4. Pick which “skin” to apply to it. There are three possibilities:

Image reference (make your video match that image)

Several default presets (claymation, watercolor, etc.)

Text prompt (type anything you want, like I did)

5. Smash the “Generate” button and wait for your result.

Here’s a detailed guide explaining the extra settings:

Gen-1 is limited to max 5 seconds, although Runway expects to relax this limit.

Heads up: Gen-1 will soon be old news, because Runway recently teased Gen-2, which promises full text-to-video magic without any reference input:

To learn more and sign up for Runway’s Discord channel, check out the Gen-2 landing page.

But we don’t have to wait for Gen-2 to try making videos from text prompts…

ModelScope: Pure text-to-video

If you’ve browsed the Internet in the past week or so, there’s a chance you’ve already seen the viral video of Will Smith eating spaghetti. If not…I’m so sorry:

That abomination is the output of a new text-to-video diffusion model called ModelScope. It stitches together semi-coherent sequences of frames based on simple text prompts.

Want to give it a try? You’ve got at least three options:

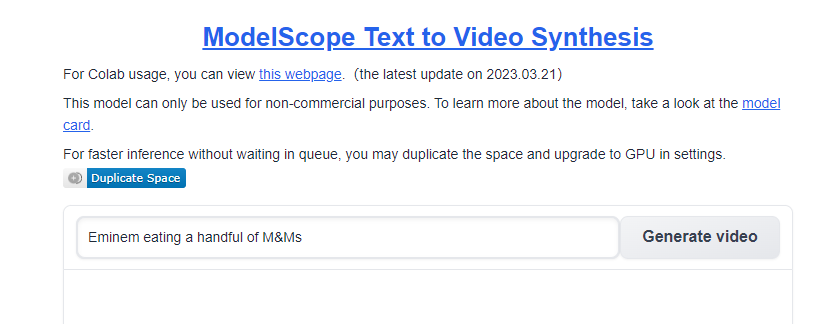

Option 1: Hugging Face demo (free and easy)

This is definitely the one I recommend. Go to the Hugging Face demo page:

From here, it’s as simple as typing in your text prompt and clicking “Generate video.”

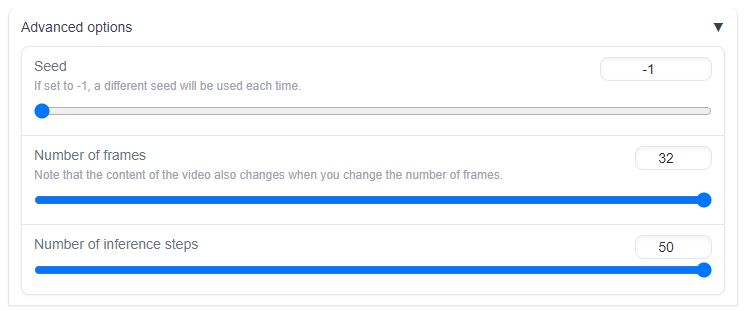

But I do suggest an extra step. Expand the “Advanced options” dropdown below the canvas:

And set the number of frames and inference steps to max levels (32 and 50):

This should result in slightly longer, more coherent clips.

Here’s my video of Eminem eating M&Ms (with default settings):

Perfection!

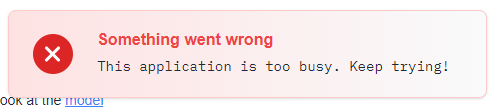

Note: As with most Hugging Face demos, there’s a queue, so you’ll have to wait a few minutes to get your result. Also, the app often returns this error:

If you see it, just click “Generate” again a few more times until your request goes through. It usually takes 3-5 attempts.

(I know that doing the same thing over and over and expecting different results is the textbook definition of “insanity,” but trust me, it works here.)

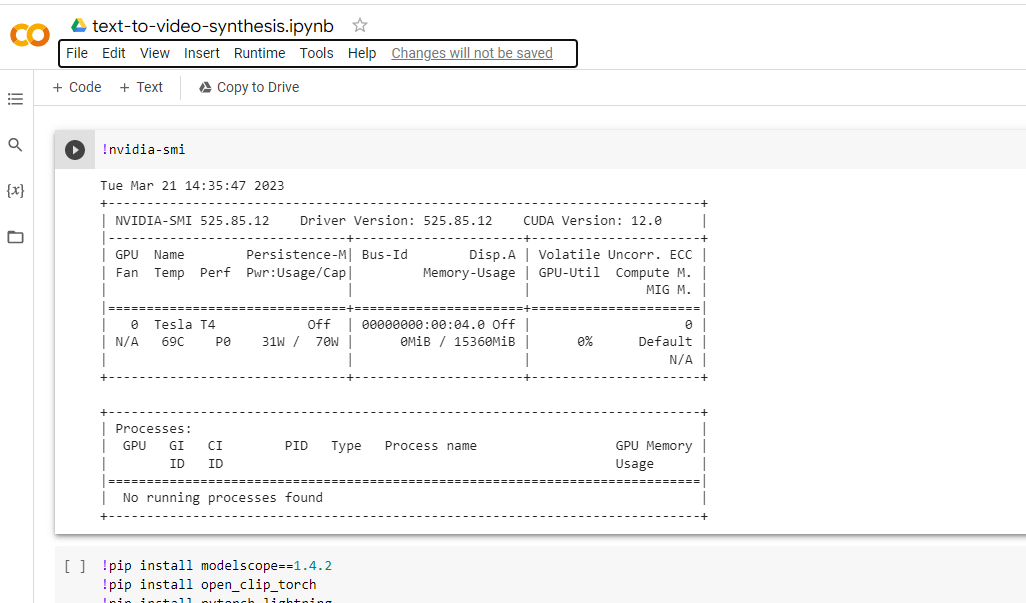

Option 2: Google Colab (free but slow and fiddly)

Your other free option is this Google Colab:

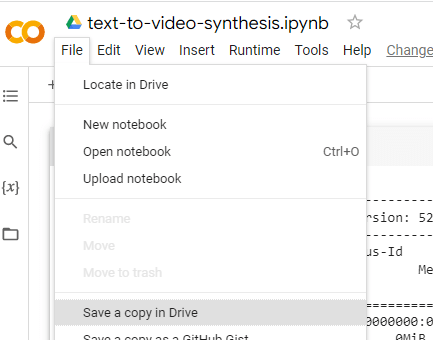

Before using it, I recommend saving a copy in your own Google Drive:

While the wall of text looks overwhelming, there are really only two steps to making it all work:

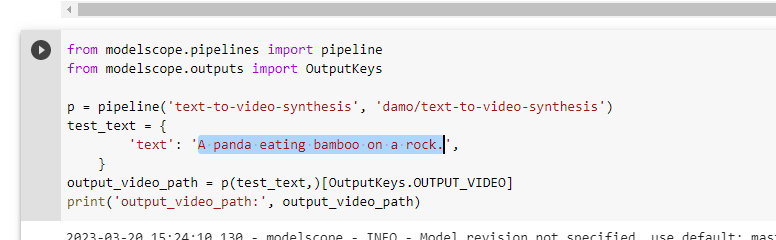

1. Scroll down to the bottom section with the text input. The default is “A panda eating bamboo on a rock”:

Replace the highlighted text with your own prompt.

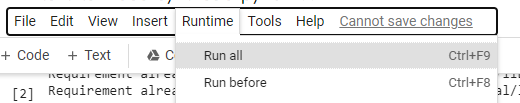

2. In the top menu, expand the “Runtime” dropdown and click on “Run all”:

(You can also just click Ctrl+F9 if you’re feeling fancy.)

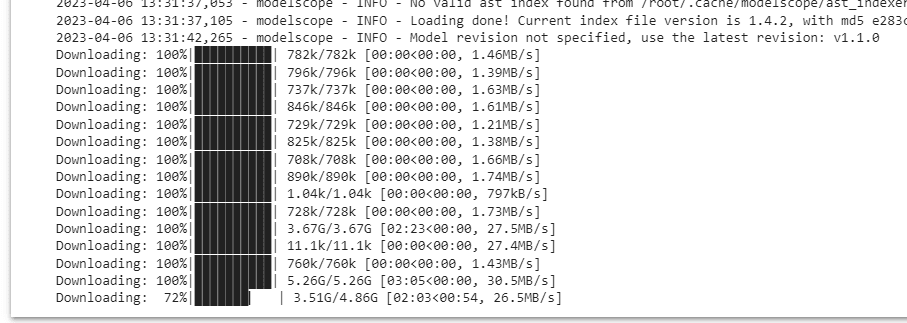

This launches a whole bunch of events that involve connecting to the hosted runtime and working through all of the script elements line by line.

You’ll see processes happening and crazy large files downloading in the cloud:

Don’t panic!

Just wait for the Colab to do its thing. It takes around 10 minutes.

Your patience will eventually be rewarded with a tiny 2-second video.

Yeah, it’s not a lot. But it doesn’t return errors like the Hugging Face demo.

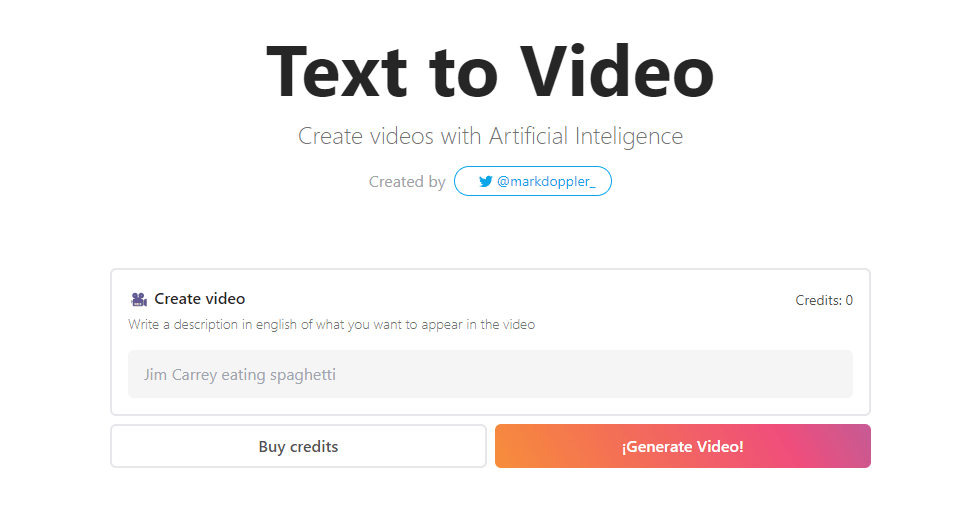

Option 3: Vercel (easy but paid)

Disclaimer: I cannot vouch for this as I haven’t tried paying money myself.

The last alternative is only for those willing to pay for crappy short clips of humanlike monstrosities doing things that vaguely resemble what you asked.

You find it right here:

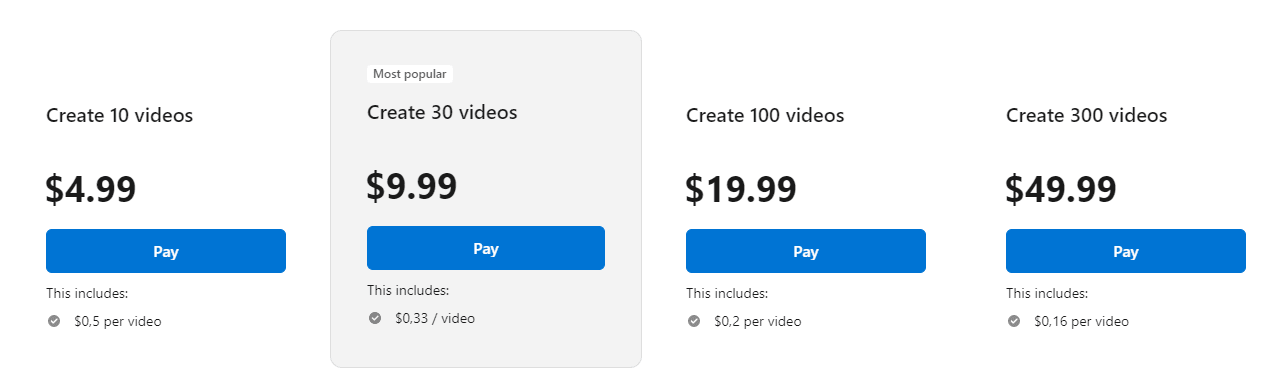

As far as I can tell, this does exactly the same thing as the Hugging Face demo but charges you anywhere between $5 to $50, depending on how many videos you need:

One can assume that there’s no queue and no errors, but I can’t confirm it.

The state of text-to-video

Right now, text-to-video is clearly just for crude, meme-worthy stuff.

But so was text-to-image when it first went mainstream last year.

We really can’t underestimate the pace of progress in generative AI.

As you saw in my Midjourney V5 post, their text-to-image algorithm went from crude to photographic-level output in a single year.

I’m excited to see what this year has in store for generative AI.

Over to you…

Have you done any cool stuff with text-to-video or video-to-video AI? Do you know of any competing video models that are publicly available?

If so, I’d love to hear from you. Drop me an email or leave a comment below.