Text-to-Image Model Showdown: GPT-4o vs. Ideogram 3.0 vs. Reve 1.0

A steampunk platypus, a cyberpunk goose, and a dieselpunk duck walk into a club...

Last week was crazy y’all!

After months of relative calm on the text-to-image scene1, three top-tier image models suddenly rolled out within days of each other:

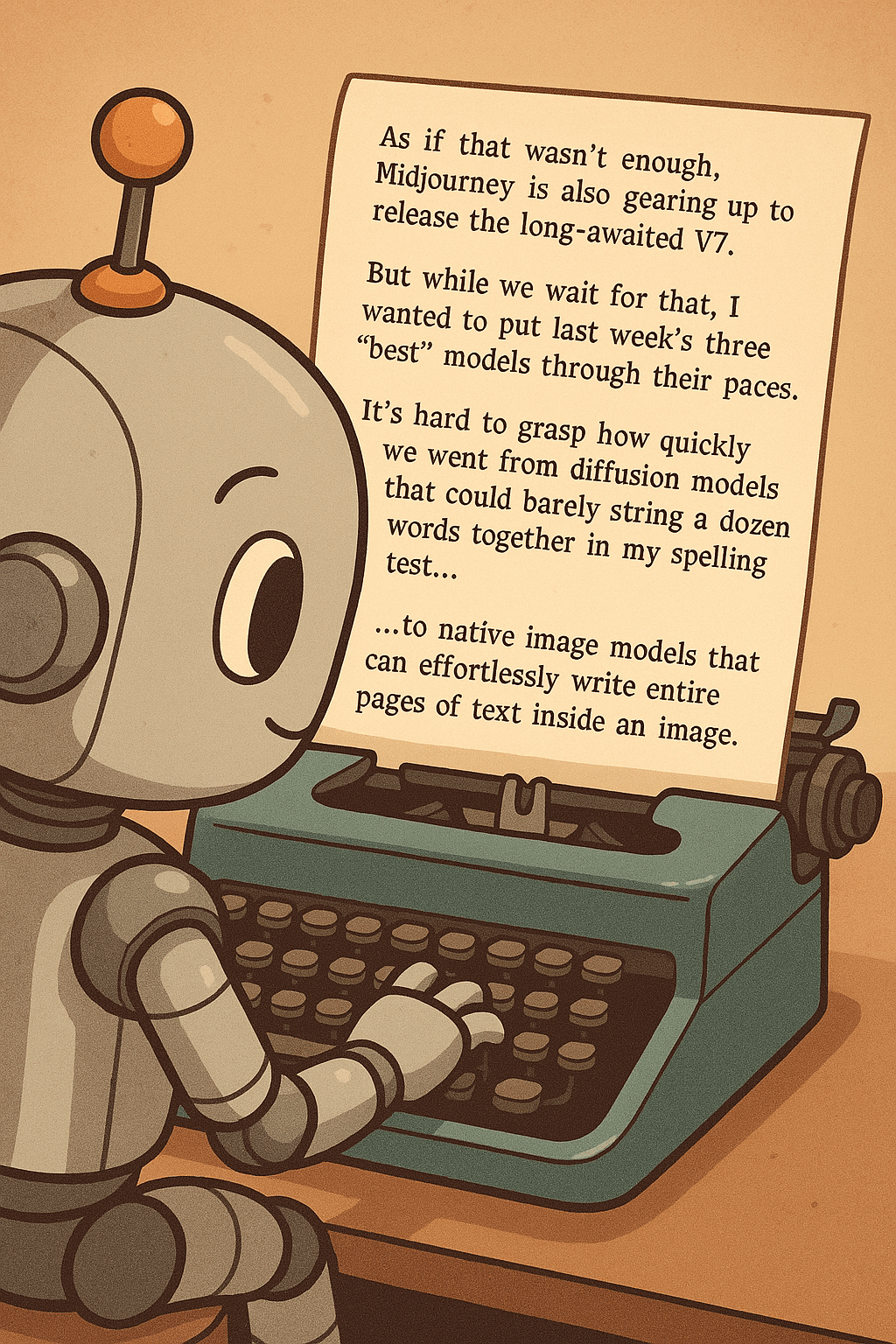

As if that wasn’t enough, Midjourney is also gearing up to release the long-awaited V7.

But while we wait for that, I wanted to put last week’s three “best” models through their paces.

It’s hard to grasp how quickly we went from diffusion models that could barely string a dozen words together in my spelling test…

…to native image models that can effortlessly write entire pages of text inside an image.

Not so long ago, I wrote about the somewhat lengthy back-and-forth process of working on AI-generated cartoons for

:But now, based on nothing but this short vague prompt…

Make a hilarious four-panel comic about our relationship with modern technology, relying on relatable tropes.

…GPT-4o comes up with the concept, lays out the four panels, and draws this finished comic strip all on its own:

But is GPT-4o native image generation superior on all fronts, or are there some areas where diffusion models still shine?

There’s only one way to find out: a classic prompt-to-image battle!

Fun fact: In my head, I hum the words “Text-to-Image Model Showdown” to the chorus of “Teenage Mutant Ninja Turtles”—and now it’s in your head, too!

Sorry.

🧪 The test

If I’m honest, I don’t consider this a fair fight.

I fully expect GPT-4o to knock things out of the park.

As I argued last week, native image generation is a harbinger of a new era. Diffusion models simply can’t compete on prompt adherence, text rendering, and context awareness.

Still, I’m curious to see just how big the gap is.

To test the models, I’ll use a cumulative sequence of prompts of increasing complexity. Here’s how it’ll go:

“Steampunk platypus”

This one’s just to establish the default aesthetic of each model.“Candid photo of a steampunk platypus”

This will showcase how the models render photographic images.“Candid photo of a purple steampunk platypus with wings on stage in a comedy club”

This should test how well a model can generate unfamiliar scenarios.”Candid photo of a purple steampunk platypus with wings on stage in a comedy club. Next to the platypus is a cyberpunk goose playing a saxophone. In front of the stage, in the audience, is a dieselpunk duck.”

This will gauge how each model handles multiple characters and precise scene composition directions.”Candid photo of a purple steampunk platypus with wings on stage in a comedy club. To the left of the platypus is a cyberpunk goose playing a saxophone. Behind them is a show banner with the words ‘Top Billed.’ In front of the stage, in the audience, is a dieselpunk duck.”

How good is each model at rendering short text?”Candid photo of a purple steampunk platypus with wings on stage in a comedy club. To the left of the platypus is a cyberpunk goose playing a saxophone. Behind them is a show banner with the words ‘Top Billed.’ In front of the stage, in the audience, is a dieselpunk duck. The duck is holding a handwritten show program that says ‘Welcome to Top Billed! No beaks were harmed in the making of this lineup. Prepare for laughs, feathers, and unpaid sax solos.’”

Can the model replicate longer text sequences and follow font instructions?

At each step, I’ll only be awarding points for the additional elements introduced.

To make sure I’m comparing apples to apples, here are the test conditions:

Prompt adherence in focus: Image quality is largely a solved issue. Leading image models can now spit out polished, high-definition pictures. What I’m after is how closely each model can follow the prompt.

Square aspect ratio: This makes the results easily comparable in a Substack image gallery without any details getting cropped. While this means our models have to cram an increasingly complex scene into limited space, hey—take it as an extra challenge!

Naked prompts: The above prompts are exactly what’s fed into each model. Ideogram offers a “Magic prompt” option, which turns your short input prompt into a long and detailed one. Reve has an “Enhance” toggle that does the same. I’m keeping both of these off for my test.

Best of four: Ideogram always spits out four images by default. As such, I’ll ask each model for four images per prompt, and then pick the most prompt-adherent result.

Let’s get this visual show on the road!

🖼️ The results

Here are the images.

Level 1: Default aesthetic vibe check

Steampunk platypus

Excellent work, 4o. A stylish anthropomorphic steampunk platypus, as requested.

Ideogram went for a flat cartoon illustration, which also sticks to the prompt. Nice.

With that out of the way, here’s me when I saw Reve’s output:

This simple vibe check shouldn’t have been possible to fail, Reve!

The first level is the softest of softballs I could give you.

How do you hear “Steampunk platypus” and end up with “Beaver wearing a leather strap-on snout”?!

Steampunk is one of the most popular mass-market aesthetics in the world. Why are you unable to render it?

Scoreboard

GPT-4o: 1

Ideogram: 1

Reve: 0 (somehow)

Level 2: Photographic images

Candid photo of a steampunk platypus

Solid work by both 4o and Ideogram, who clearly went shopping together at the same steampunk clothes store. I’d even give Ideogram a slight edge for the more authentic “candid photo” vibe.

Reve…what’s happening, buddy?

Where’s the steampunk? Why is his tongue flopping out like a cheap chew toy? And why do his spots make it look like his platypus mom hooked up with a cheetah? (Insert your own “cheater” vs. “cheetah” pun here, dear reader.)

Begrudgingly, I’ll still have to award Reve a point since I’m only judging the newly added elements at each stage, and that abomination does look like a candid photo.

Scoreboard

GPT-4o: 2

Ideogram: 2

Reve: 1

Level 3: Imagining the nonexistent

Candid photo of a purple steampunk platypus with wings on stage in a comedy club

Let’s see:

GPT-4o: Purple? Check. Wings? Check. On stage in what could be a comedy club? Yup. It even threw in a pair of goggles that could generously be described as steampunk-ish.

Ideogram: How did you know that “Canedy IUE” is my favorite comedy club? I kid, Ideogram, you did well: Purple platypus, wings, stage, comedy club. All there. (The platypus is facing away from a nonexistent audience, but maybe that’s part of the act?)

Reve: AAAAAAAAAAAAAAAH! AAAAAAAAAAAAAAAAAH! Why can’t I stop screaming?! AAAAAAAAAAAAAAAAAAAH!

In case you think I intentionally picked a bad image just to mock Reve, here’s the entire 4-image grid:

Good luck trying to fall asleep tonight.

Scoreboard

GPT-4o: 3

Ideogram: 3

Reve: 1

Level 4: Stage directions and multiple characters

Candid photo of a purple steampunk platypus with wings on stage in a comedy club. Next to the platypus is a cyberpunk goose playing a saxophone. In front of the stage, in the audience, is a dieselpunk duck.

As expected, GPT-4o nailed it!

Ideogram, you had a good run, but all good things come to an end.

Shockingly enough, this is Reve’s best attempt thus far. But that’s not saying much. I’m starting to suspect that Reve was engineered for realism and photography, so it just can’t be bothered to deal with my “cyberpunk” and “dieselpunk” nonsense.

Scoreboard

GPT-4o: 4

Ideogram: 3

Reve: 1

Level 5: Short text

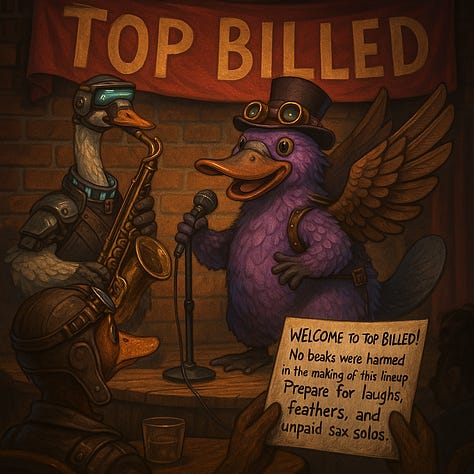

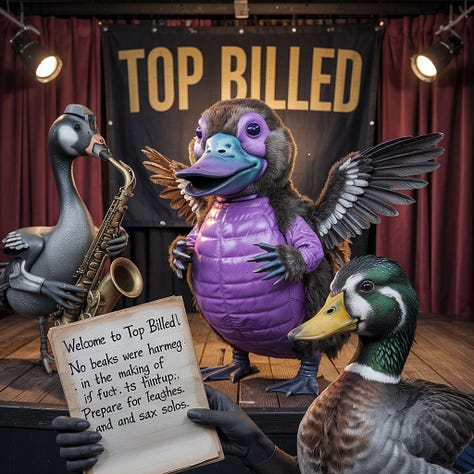

Candid photo of a purple steampunk platypus with wings on stage in a comedy club. To the left of the platypus is a cyberpunk goose playing a saxophone. Behind them is a show banner with the words “Top Billed.” In front of the stage, in the audience, is a dieselpunk duck.

Yes, everyone gets a point for placing the banner and spelling the text correctly!

No, we won’t discuss the angry man in a furry costume hijacking a high-school theater rendition of “Duck Duck Goose: The Musical” in Reve’s image.

(Note how GPT-4o still keeps every other scene detail in place, even though it’s finally decided to shift away from candid photography.)

Scoreboard

GPT-4o: 5

Ideogram: 4

Reve: 2

Level 6: Long text

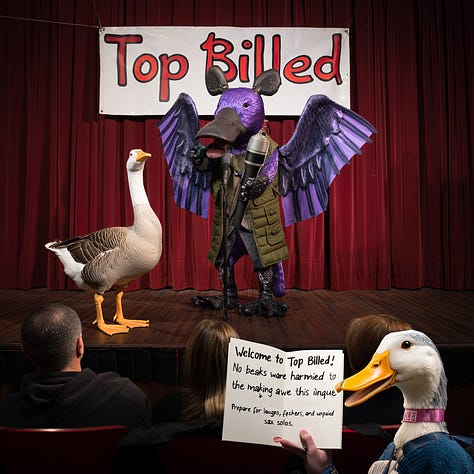

Candid photo of a purple steampunk platypus with wings on stage in a comedy club. To the left of the platypus is a cyberpunk goose playing a saxophone. Behind them is a show banner with the words “Top Billed.” In front of the stage, in the audience, is a dieselpunk duck. The duck is holding a handwritten show program that says “Welcome to Top Billed! No beaks were harmed in the making of this lineup. Prepare for laughs, feathers, and unpaid sax solos.”

I’ll…be…damned.

GPT-4o sneaks in the correctly spelled handwritten note while holding on to every other element except candid photography.

Ideogram, you tried your best.

Reve, I appreciate the impressive winged samurai koala-duck hybrid, but no thanks.

Scoreboard

GPT-4o: 6

Ideogram: 4

Reve: 2

🏆 The verdict

You’ll never guess, but…

🥇1st place: GPT-4o

To the surprise of absolutely nobody except those who didn’t read the “Test” section above, GPT-4o is in a league of its own.

It handles spelling, different visual aesthetics, and specific scene directions without breaking a sweat.

Although GPT-4o overlooks the “candid photo” aspect in later prompts, even that is fixable with a minor prompt tweak.

Here’s what happened when I added the following line to the prompt: “Remember that this is a photograph, so make it look photographic.”

🥈2nd place: Ideogram

I think Ideogram had the best “photographic” feel to its images.

It fought valiantly and only started falling apart midway through our competition.

Context awareness gives native image models like GPT-4o an unfair advantage when scene complexity increases.

🥉3rd place: Reve

On the plus side, Reve stayed consistent throughout the test.

On the minus side, Reve was consistently bad at just about everything.

Years from now, Reve will still be fond of telling people at parties about that time it won a bronze medal in a drawing competition by ranking third.

Then, it’ll turn away and quietly, under its breath, mutter: “…out of three.”

It sucks that Reve didn’t get a chance to shine today. Maybe it was trained for a different set of challenges and image requests.

On the one hand, I feel bad that I’ve set Reve up to fail with my “out there” test.

But on the other hand…

🫵 Over to you…

What do you say? Was Reve robbed of an opportunity to prove itself? Have you tried Reve and found it to be great at certain things where other models fail? Is there anything you can share to redeem Reve in people’s eyes? Anything?!

Leave a comment or drop me a line at whytryai@substack.com.

Thanks for reading!

If you enjoy my writing, here’s how you can help:

❤️Like this post if it resonates with you.

🔗Share it to help others discover this newsletter.

🗩 Comment below—I read and respond to all of them.

Why Try AI is a passion project, and I’m grateful to everyone who helps keep it going. If you’d like to support my work and unlock cool perks, consider a paid subscription:

If you don’t count Gemini 2.0 Flash with native image generation, which was a big deal.

I don't have a ton to add here other than I'll probably stay away from Reve for a while, but I must comment on this:

"Fun fact: In my head, I hum the words “Text-to-Image Model Showdown” to the chorus of “Teenage Mutant Ninja Turtles”—and now it’s in your head, too!"

First, good for you! This is important work, and I'm glad somebody is doing it. However, now I'm concerned: what comes next?

I'm envisioning something like:

Text-to-Image Model Showdown

Text-to-Image Model Showdown

Text-to-Image Model Showdown

GPT 4 will win again

But that's kind of lame. It goes w/the rhythm, but it's also kind of self-defeating. Thoughts?

These are always fun.