Replace Stuff in Images Using Only Text

Text-based inpainting lets you replace objects in existing images simply by describing what you want to change. It's as straightforward as it sounds.

The concept of AI-driven inpainting—replacing specific parts of an image with something else—isn’t new.

DALL-E and Stable Diffusion have had this functionality for months now:

Inpainting isn’t very complicated. You just:

Upload an image

Erase (mask) parts of it you want replaced

Specify the inpainting area

Describe to the AI model what you want to see there

Profit?

But a new tool has taken this simplicity to the next level.

Let’s take a quick look.

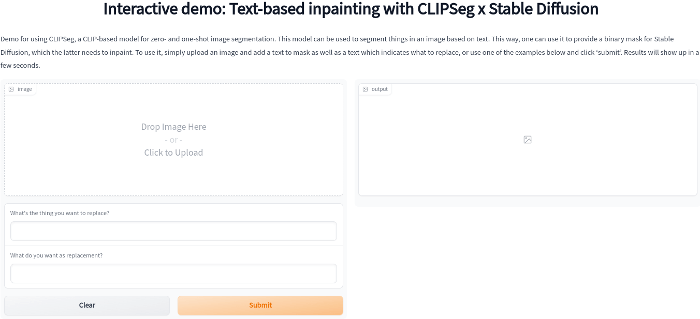

Text-based inpainting demo

The tool is currently a standalone demo, but my guess is that it won’t be long before we see this feature incorporated into AI generators by default.

Here’s how it works.

1. Go here: Interactive demo: Text-based inpainting

2. Upload an image. I’ve used this Pixabay photo of an easter egg basket:

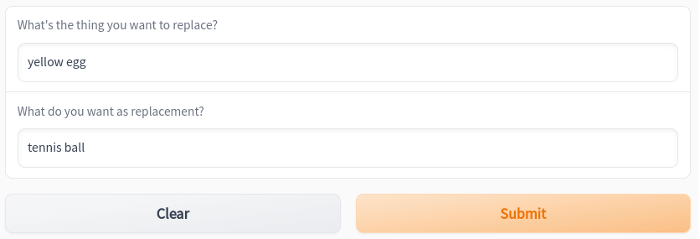

3. Specify the object you want replaced (and with what):

4. Get your output image:

Super cool, right?

What’s impressive is that the tool can zero in on an object based on additional descriptors. I deliberately picked a basket of eggs to see if I could pinpoint just the yellow one for replacement.

A few caveats

Nothing’s perfect, so here are a few things to keep in mind:

The object you want replaced should ideally exist in a single version.

It’d be harder to tell AI which of the two yellow eggs you want replaced.It should be easy to identify and isolate.

Selecting a specific star in a photo of a starry sky is tricky using just text.Replacement objects should have roughly the same size and shape.

I tried replacing the egg with a banana and AI had to get, uh, creative:You can expect imperfections and artifacts.

Have you noticed what happened to the blue egg next to our tennis ball?

Still, it’s quite extraordinary that we can now edit images using nothing but words. I hope to see this functionality perfected and implemented in other tools soon!

Over to you...

Have you played around with inpainting in DALL-E, Stable Diffusion, or other products? What’s your impression of this text-based functionality?

If you know of products with similar features, I’d love to hear about them. Drop me an email or leave a comment.