Sunday Rundown #95: So Much Google & So Much Waldo

Sunday Bonus #55: Comparison page for 15 AI video models.

Heads up: I’m traveling for Easter with my family, so the next Sunday Rundown will be on April 27.

Happy Sunday, friends!

Welcome back to the weekly look at generative AI that covers the following:

Sunday Rundown (free): this week’s AI news + a fun AI fail.

Sunday Bonus (paid): an exclusive segment for my paid subscribers.

Let’s get to it.

🗞️ AI news

Here are this week’s AI developments.

👩💻 AI releases

New stuff you can try right now:

Amazon news (models available via Amazon Bedrock):

Nova Sonic is a speech model that can pick up subtle cues like tone, pacing, inflection, and more.

Nova Reel 1.1 is a video model that can create 2-minute multi-shot clips from text and image prompts.

Anthropic introduced a two-tiered Max Plan for Claude, with 5x more usage ($100/month) or 20x more usage ($200/month) plus early access to new features.

Canva rolled out Visual Suite 2.0, a massive product upgrade with dozens of new features, many powered by AI.

Deep Cogito released Cogito v1 Preview, a family of open-source LLMs that outperform competitor models of comparable sizes.

Google news (will Google ever take a break?):

AI Mode is now multimodal, so you can snap a photo or upload an image to ask questions and get comprehensive responses.

Deep Research is now powered by Gemini 2.5 Pro Experimental for Gemini Advanced users, making it a far more capable research agent that now convincingly outperforms OpenAI’s version.

Firebase Studio uses Gemini models to help developers prototype, build, and deploy full-stack applications more efficiently. (Try it here.)

Gemini Live now lets users share their phone’s screen and camera during conversations for rich, multimodal chats. (Previously known as “Project Astra”)

Vertex AI now incorporates all of Google’s creative AI models like Lyria (music), Veo 2 (video), Imagen 3 (images), and Chirp 3 (voice), most of which have also received additional improvements and features.

Workspace product suite is getting many new AI capabilities, tied together by the new Workspace Flows, which helps orchestrate work across different apps.

…and even more stuff announced at Google Cloud Next 2025.

Meta released Llama 4, the next generation of its open-source, natively multimodal family of language models. (Reception has been mixed.

wrote an excellent summary.)Microsoft rolled out almost a dozen new Copilot features including memory, vision, agentic tasks, and more.

Moonshot AI open-sourced Kimi-VL and Kimi-VL-Thinking, lightweight vision-language models that excel in multimodal reasoning.

NVIDIA’s new Llama 3.1 Nemotron Ultra 253B excels at advanced reasoning and instruction following, suited for high-accuracy scientific, code, and math tasks.

OpenAI expanded ChatGPT’s Memory to (optionally) reference all past chats to give personalized responses. Rolling out to Pro and Plus accounts outside the EU.

Runway introduced Gen-4 Turbo, a faster version of Gen-4 Alpha that can generate 10-second clips in just 30 seconds. (Also available on free accounts, as long as you have credits left.)

WordPress launched an AI Website Builder that designs complete sites with images and text from a simple prompt.

YouTube is rolling out a Music Assistant that generates music tracks from prompts to a subset of Creator Music users.

🔬 AI research

Cool stuff you might get to try one day:

Adobe is working on a range of AI agents that can intelligently assist users of its products: Acrobat, Express, Photoshop, and Premiere Pro.

Researchers NVIDIA and several US universities outlined a video generation method that uses Test-Time Training to create coherent 1-minute video stories.

📝 Suddenly, a surprise survey spawns…

Please help make Why Try AI better. Let me know what works and what doesn’t:

🤦♂️ AI fail of the week

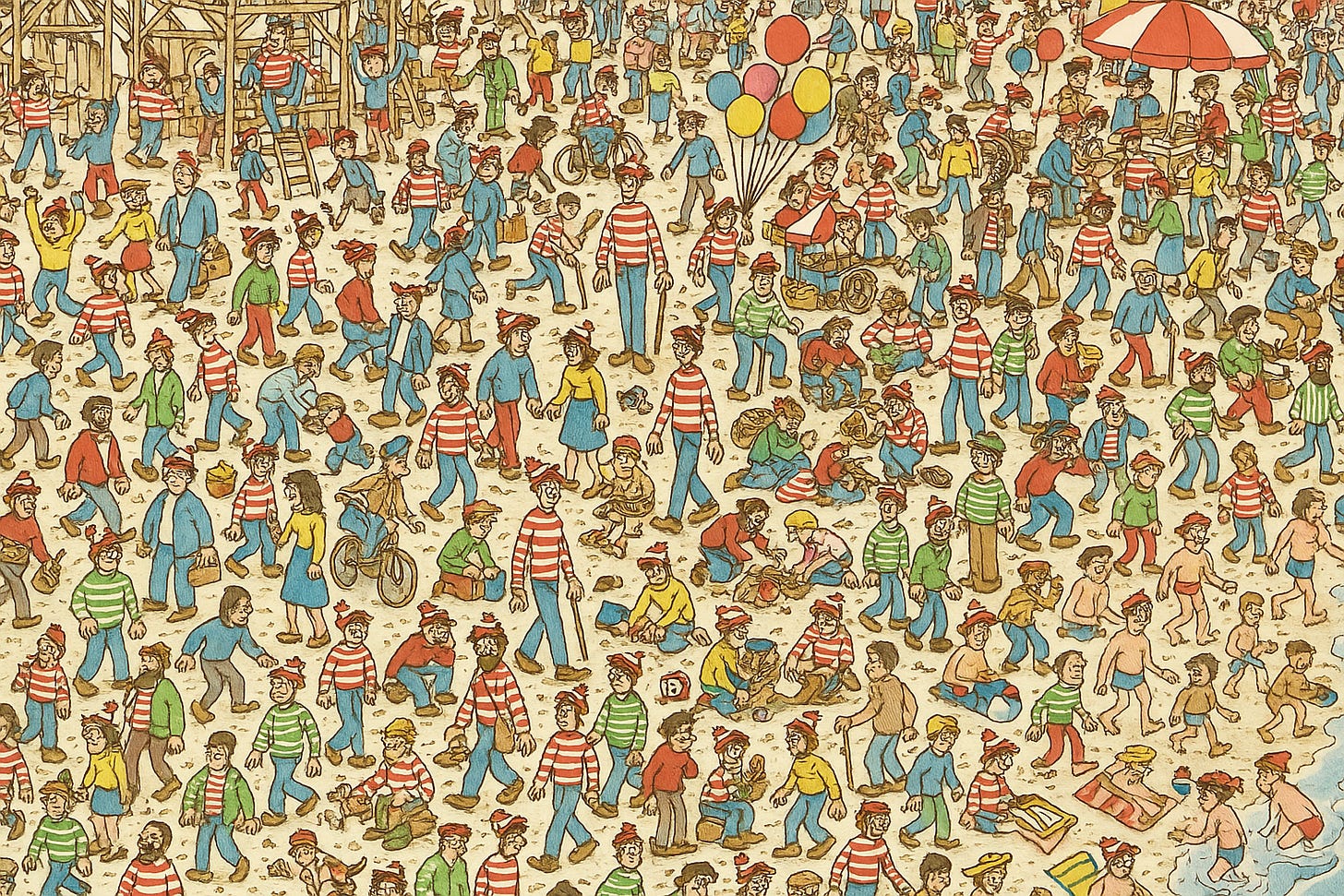

Waldo is somewhere in this GPT-4o image. See if you can find him.

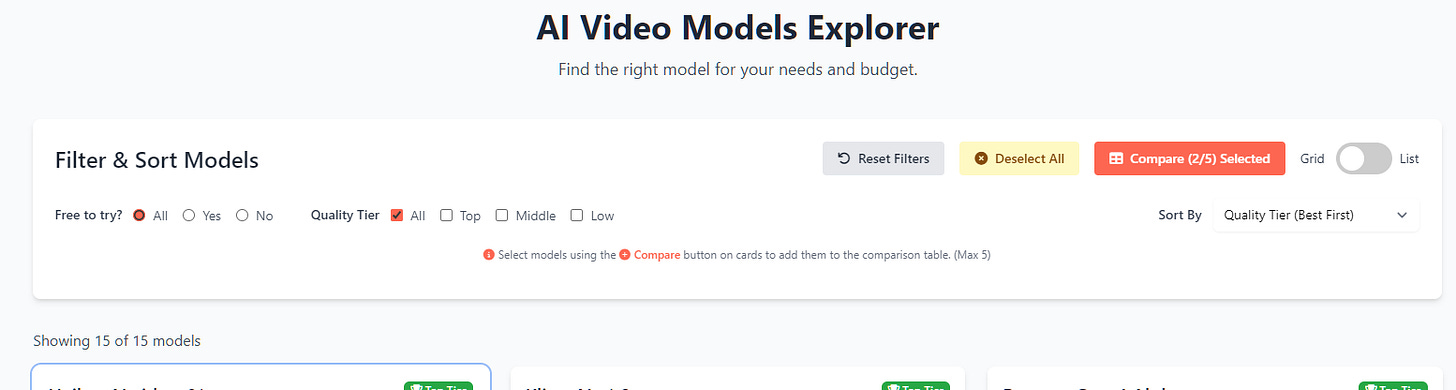

💰 Sunday Bonus #55: Interactive page to compare 15 AI video models

Wow, last week’s GPT-4o use cases swipe file proved quite popular!

So I once again went to my new friend Genspark Super Agent to help turn my research insights into a neat interactive page. This time, it’s about video models.

While working on my recent guest post about AI video for

, I put together a Google Sheet comparing different video models, pricing, and features.This week, I spent a bit of time with Genspark and Gemini to turn my initial research into a nice page that lets you explore and compare these models:

If nothing else, it’ll give you a great overview of the current AI video landscape and available models.