Bye, Splatterprompting. We Hardly Knew You.

The era of text-to-image prompts filled with dozens of descriptors is over. These days, it's better to start with simple prompts and build them up gradually.

Eons ago, in the distant era of September 2022, Stable Diffusion was just entering the AI art scene.

In those ancient times, a group of wise elders emerged from the shadows, shrouded in mystery. They roamed the land crafting powerful, elaborate text prompts.

They were…the Splatterprompters.

These enigmatic visionaries recited secret incantations that only they were privy to.

“Hyperdetailed, extremely detailed, highly detailed, insane detail,” they’d whisper.

“4K, UHD, ultra-high resolution, 16K render,” they’d mutter.

“Sorry, I don’t share my prompts,” they’d reply to those foolish enough to ask.

But as AI models evolved and became capable of generating great images from really basic prompts, the influence of Splatterprompters began to shrink. They tried to hold on to their now-redundant practices but saw themselves made increasingly irrelevant.

Let’s see what we can learn from their sad fate.

What’s splatterprompting?

You won’t find “splatterprompting” in any dictionary. Yet.

The term was coined by Medium writer John Walter in his December 2022 post about getting high-quality images with simple prompts.

He used “splatterprompting” to describe the practice of stuffing your prompts full of largely synonymous descriptors in the hope of getting images that are sharper, higher quality, more photorealistic, and so on.

Here’s a 100% real splatterprompt found on this “best 100” list:

Or how about this one, featuring at least several repeated instances of “highly detailed” along with “high detail,” “intricate,” “intricate abstract,” “intricate artwork,” and so forth. (I lost track after what felt like several hours of trying to parse it):

Friends, if you recognize yourselves in the above, please stop doing this!

Here’s why:

Why splatterprompts are a bad idea

So what exactly do I have against splatterprompting? Well…

1. Whole chunks of long text get ignored without you knowing

Here’s what many don’t realize: Just because you can type a long prompt into a text-to-image generator, it doesn’t mean the entire prompt counts.

That’s because most AI art tools have a hidden limit to how many characters they actually take into account when generating an image:

Midjourney: Approximately 60 words.

Stable Diffusion: 75 tokens (which is also just around 60 words).

Token counts are a bit more tricky, as they depend on stuff like how common the character sequences used in your prompts are. I discuss this in more detail in my TLDR version of the “Stable Diffusion Prompt Book” (see Tip #6).

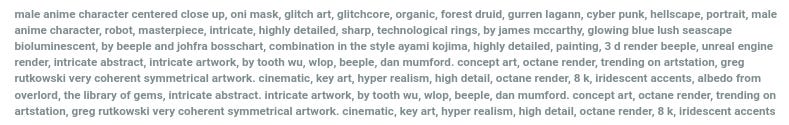

As a shortcut, you can use this free Tokenizer to see how many tokens your prompt takes up. For instance, that endless splatterprompt from above clocks in at a whopping 436 tokens:

A keen observer will notice that 436 is a slightly higher number than 75. This means that over 80% of that insane prompt is completely disregarded.

2. Splatterprompts make it hard to evaluate what works

Quiz time: Can you guess which of the adjectives and descriptors in that 436-token prompt made the biggest difference?

Don’t look at me, I have no fucking clue. And I guarantee you that neither does the original prompter.

That’s because filling your prompt with every descriptor under the sun makes it near-impossible to know which of them actually matter and to what extent.

Trying to test that empirically would take days of removing and replacing each of the dozens of descriptors one by one, then comparing all of the resulting images…and good luck with that!

3. Duplicate modifiers don’t do anything. At all.

Here are two shiny silver frogs:

One of them is “highly detailed,” the other is “highly detailed, highly detailed, highly detailed, highly detailed, highly detailed.”

Can you spot the frog that’s 5x more awesome? Me neither!

There’s little evidence that repeating the same exact modifier does anything except waste your already limited space (see above).

Now, there’s some indication that repeating variations of a concept helps AI get the scene itself right (e.g. using “garden mouse” and “a mouse in a garden” to increase the chance of getting both of these elements in the final image).

But no, you won’t make your image 10x more intricate by chanting “highly detailed” ten times like a voodoo spell.

4. Splatterprompts draw your focus away from the actual subject matter

The time you spend sprinkling identical adjectives all over the place like magic fairy dust distracts you from thinking of the actual scene or subject.

Prompt modifiers can be copied, but the vision you apply them to is far more likely to be truly unique.

So don’t let splatterprompting pull you in the wrong direction!

5. AI models will only get better at language comprehension

Early text-to-image algorithms needed more hand holding to get the scene right. That’s where the above practice of repeating similar scene descriptions comes from.

But Midjourney V4 was a huge leap in the direction of AI art models that understand prompts better and generate quality images without the need for descriptor-spam.

During recent office hours, Midjourney founder David Holz indicated that the soon-to-be-released V5 will be even better at understanding language and working with text input.

Splatterprompting is a dying concept. It’s time to shake the habit and start using natural language when talking to AI models.

What to do instead of splatterprompting

Here’s a better way to approach text-to-image creation.

1. Start simple

Begin with your basic subject or scene in mind.

Are you going for a photo of a cat? Just use “cat photo” as your starting point.

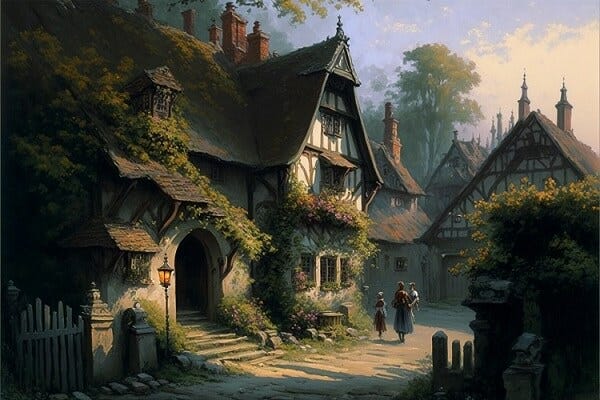

Want an oil painting of a medieval village? Start with “medieval village, oil painting.”

And so on.

There’s no need to overengineer your prompt before you even get going.

2. Add modifiers one by one

Once your first images come back, you’ll see just how close they are to your original idea. Then you can identify the missing elements and add them in.

If your "cat photo” prompt returns full-body shots of cats but you want a portrait, just add “portrait” to your prompt and see where that takes you.

I described this deliberate process of gradually tweaking prompts as far back as September last year. Much of the advice in that article still stands!

3. Use fewer but more impactful descriptors

Some modifiers have a strong effect on the resulting image all by themselves. Learn how and when to use them.

For Stable Diffusion, you can refer to the “magic” words from the OpenArt prompt book (see Tip #4 in my post).

But resist the temptation to splatterprompt with them. Just because HDR, UHD, 64K, 8K, and 4K are listed as “magic” modifiers, doesn’t mean you should throw them all in. If you’ve added “64K” to your prompt, “8K" and “4K” are redundant. Unnecessary. Unneeded.

For Midjourney, you can follow my monthly “Best Midjourney prompts” series. I specifically focus on short, high-impact modifiers.

4. Focus on scene and subject instead of “quality” tags

“So then, I can’t write long prompts anymore?” you may wonder.

Quite the opposite!

Removing unnecessary splatterprompt elements leaves more space in the prompt to describe your actual vision in greater detail.

If we take the “isometric” modifier from my most recent article and simply ask for a “village,” we get something like this:

Cool isometric look but a rather generic village. If you have a more nuanced vision in mind, describe it.

Watch what happens when I ask for an “isometric medieval village with wooden huts lit up by torches around a blue lake near a forest at twilight”:

A very different feel, right? Note that “isometric” is the only image modifier, the rest is pure scene description.

5. Get inspiration from ChatGPT (or other LLMs)

If you’re stuck trying to describe a scene, ChatGPT can help! I find this especially fun because it combines the powers of two separate AI algorithms.

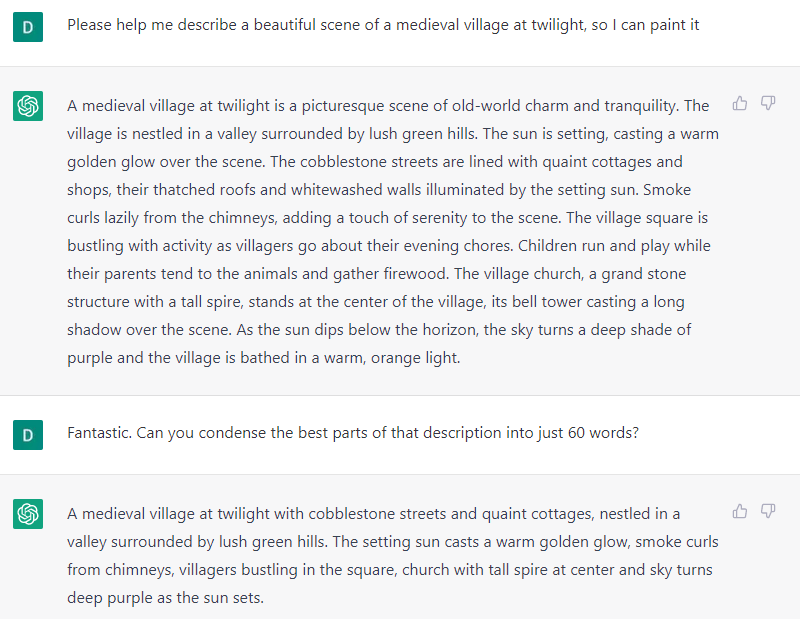

ChatGPT is very competent at coming up with descriptions based on your basic idea. Then you can plug those straight into Stable Diffusion, DALLE-2, or Midjourney (just remember the 60-word limit):

Using ChatGPT’s prompt in Midjourney along with “isometric” gives us this:

Not too shabby for two AI models working in tandem with minimal human input, eh?

Over to you…

Have you been guilty of splatterprompting? (Don’t worry, I’m sure we’ve all been there at some point.)

What’s your approach to crafting a good prompt and where do you draw inspiration from?

I’m always happy to hear new ideas, so send me an email or leave a comment below.

I wonder how many folks (since this was written) have switched from Team Splatterprompt to Team Just Have a Conversation Already. For folks who use these things every day, that is consistent advice I hear, and I can vouch for how much more effective the results are if I just commit to "talking" for a minute.

Bit late to the party but this was a great read. The splatterprompts you see elsewhere make the generative models seem far less accessible than they are. "Ghee if I have to remember all those weird incantations, why even bother?"