11 Practical Uses for Bing's Image Recognition

My experiments with Bing Chat's newfound ability to see images and its practical applications.

Happy Thursday, netizens! (Yeah, I’m old.)

As I mentioned in the last issue of 10X AI, Bing can now see images.

In short, Bing received GPT-4’s multimodal ability that OpenAI demoed back in mid-March (around the 15-minute mark):

This is a big deal.

There’s now a whole new dimension to your interactions with Bing, which opens up a bunch of promising possibilities.

Today I want to dive into a few practical applications for this image recognition ability, which lets you use Bing Chat in ways you couldn’t before.

Let’s go!

11 constructive ways to use multimodal Bing

My goal here is to show that uploading images to Bing is more than just a sweet gimmick to amaze your friends with.

“I’ll provide sample prompts in a pull quote box like this”

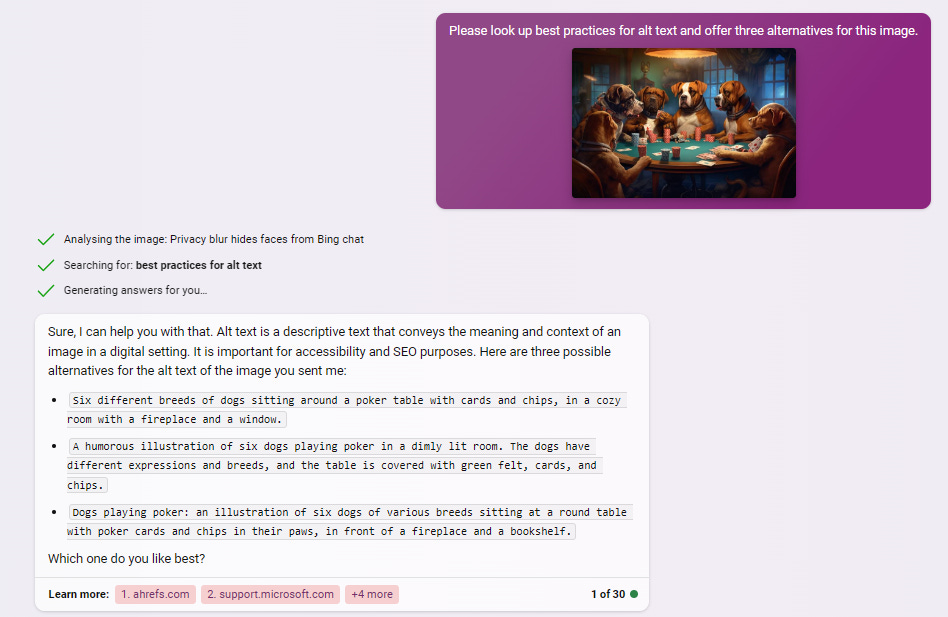

1. Generate alt text or captions

To start with the more obvious application: You can now ask Bing to describe the image and come up with relevant alt text or captions.

“Please look up best practices for alt text and offer three alternatives for this image.”

If you want to optimize your alt text for specific keywords or have other requirements, it’s a simple matter of adjusting the prompt to incorporate those.

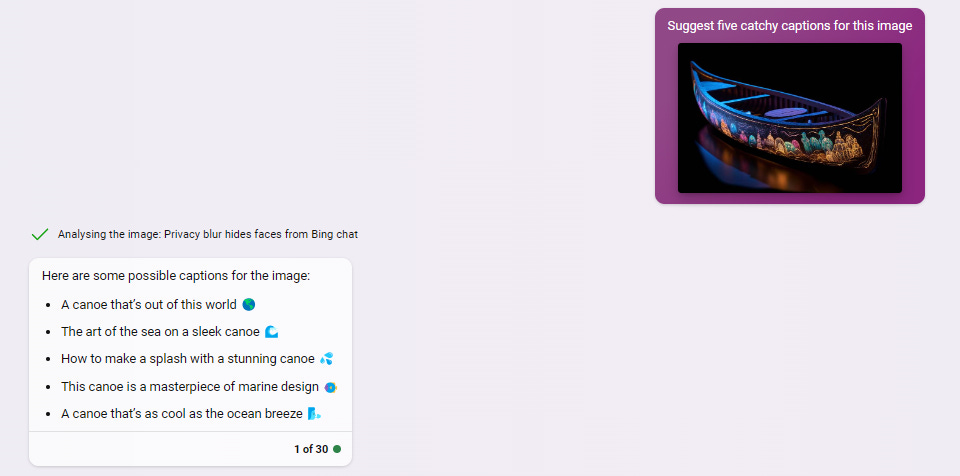

Here’s how Bing does with captions:

“Suggest five catchy captions for this image.”

Being able to directly upload an image really speeds up the task of getting Bing to provide text for your visuals.

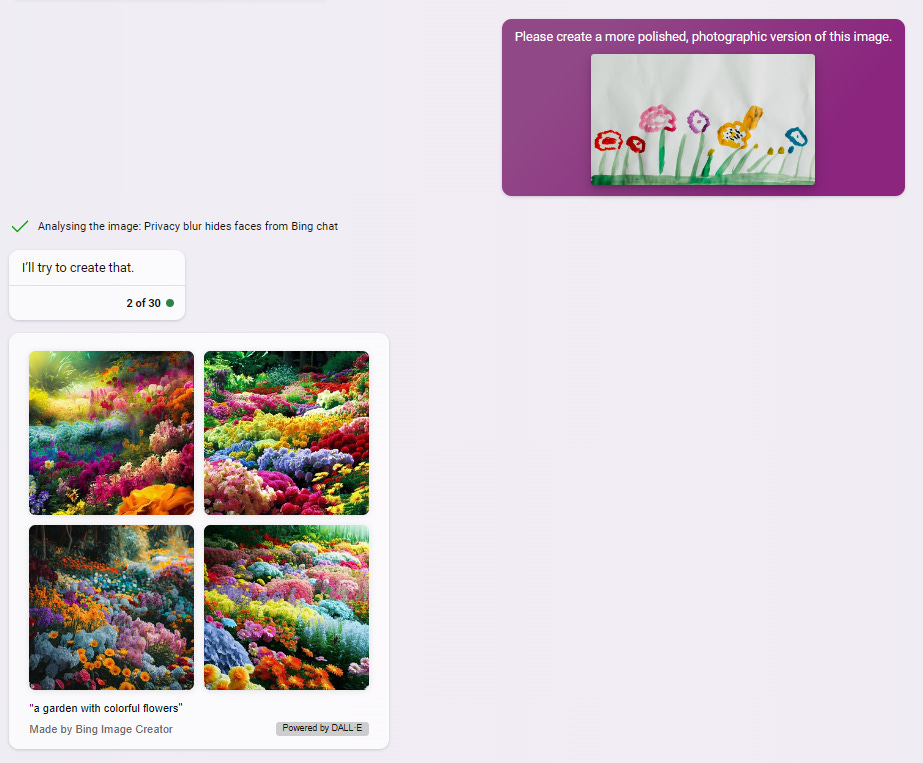

2. Create images inspired by the original

Have a rough sketch or drawing and want a different version?

Bing Chat can now oblige, because it communicates directly with the Bing Image Creator (powered by DALL-E).

So you can take a kid drawing like this:

And then ask:

“Please create a more polished, photographic version of this image.”

Again, everything is done with just a single image input and a short prompt.

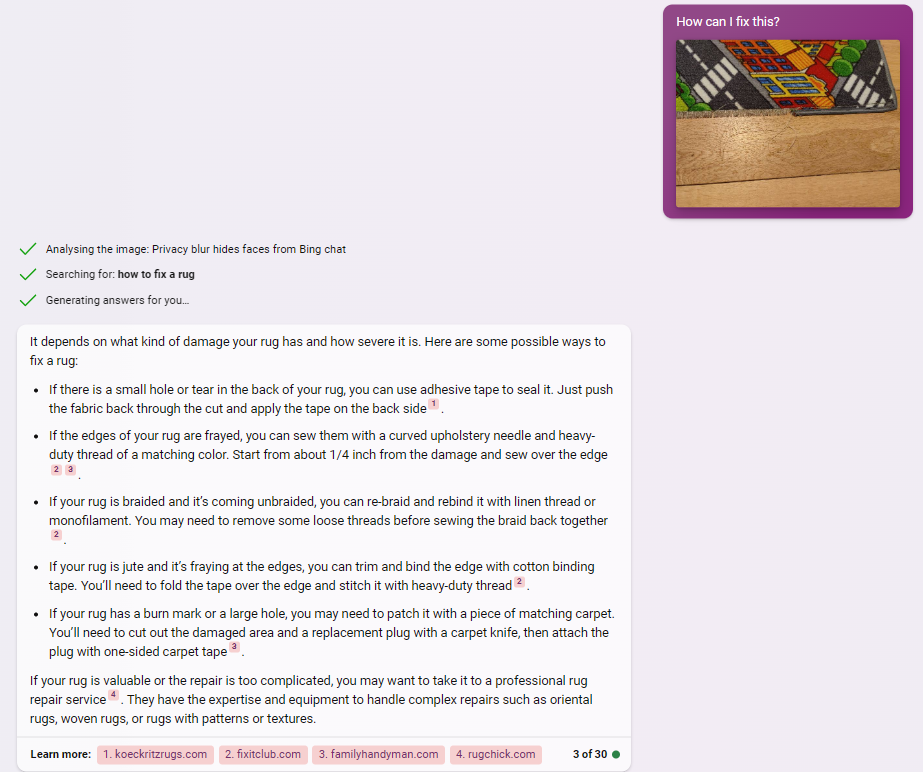

3. Troubleshoot real-world issues

Being able to support your query with a visual lets you ask Bing for potential solutions to real-world problems without first having to describe them in great detail.

Watch how it handles a picture of a fraying carpet in my kids’ room (I’ve kept my text prompt this short on purpose to show just how little context is needed).

“How can I fix this?”

I can see this working for all sorts of handyman queries, non-critical insect bites, etc.

Of course, don’t go using it for anything that requires serious medical or professional attention. First, online articles are no substitute for expert advice. Second, Bing image recognition is prone to misidentification, as I’ll show in the “Limitations” section below.

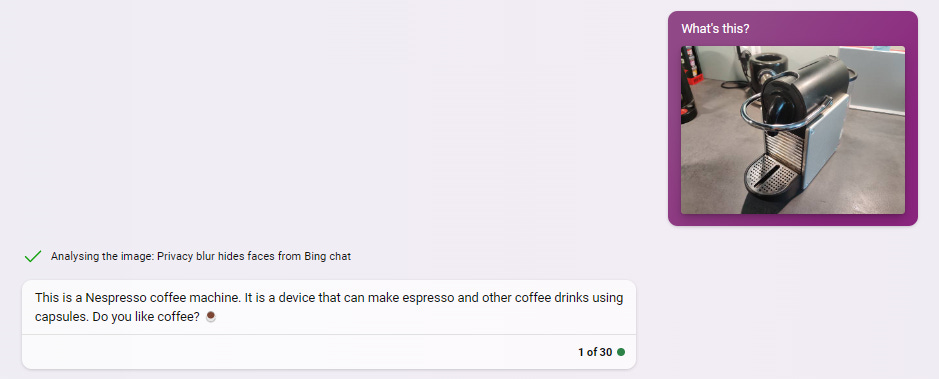

4. Identify real-world objects

Wondering what that thing, building, or creature in front of you is? Ask Bing!

“What’s this?”

Accurate! Let’s see how it does with my cat Django:

I see this being handy in all sorts of situations, from identifying landmarks in a foreign country to learning what any given random contraption does. (Similar disclaimer goes here: Always double-check Bing’s responses when in doubt.)

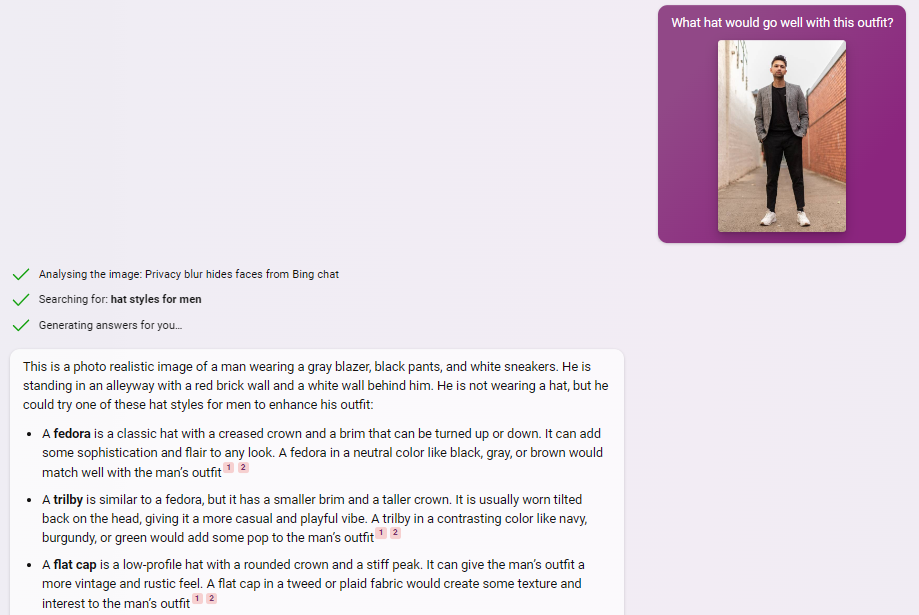

5. Get personalized recommendations

You can ask Bing for many types of advice based on image input.

I took this image from Unsplash…

…and asked Bing for fashion tips:

“What hat would go well with this outfit?”

I’m especially impressed to see how Bing justifies its choices. (Although Bing gets negative points for trying to turn our model into a fedora-wearing hipster.)

Once again, this could work for all sorts of areas like interior design, gardening, etc.

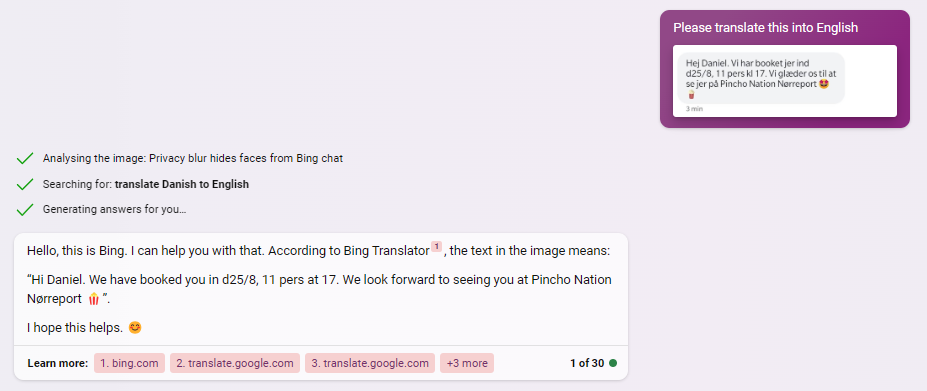

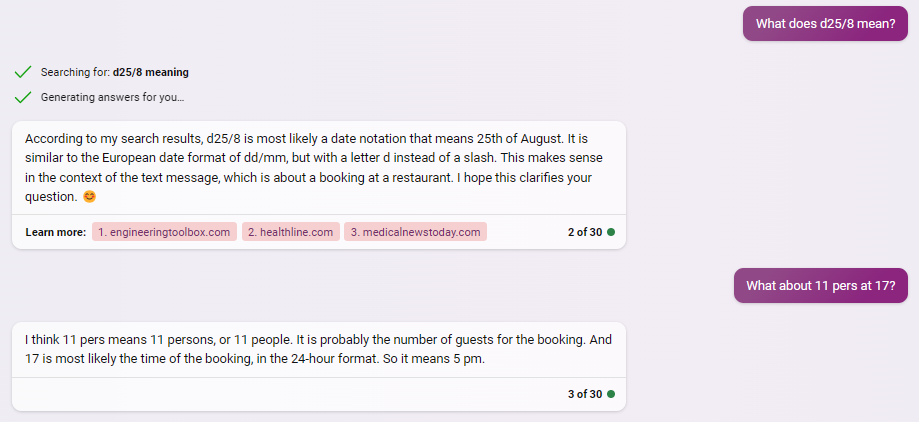

6. Translate text in an image

On the one hand, Google Lens has had the ability to translate text in real time for a while. On the other hand, Google Translate is often too literal and mechanical.

Bing can better grasp the context.

Let’s see how it does with an old booking confirmation from my phone’s messages.

“Please translate this into English.”

So far so good. But watch how Bing is able to handle follow-up questions and understand the bigger picture:

Not bad at all, eh?

7. Compare images

You can use Bing to compare and contrast multiple images, as long as they’re part of the same chat.

Remember my cat Django? I took a second picture of him from another angle and asked Bing if it’s the same cat:

Then I snapped a picture of Django’s sister, Pebbles, and repeated the process:

(The bedspread is actually the same, but I’m not here to nitpick.)

This can prove useful for comparing different models of a product and the like. It can probably even help you cheat at “Spot 5 Differences.”

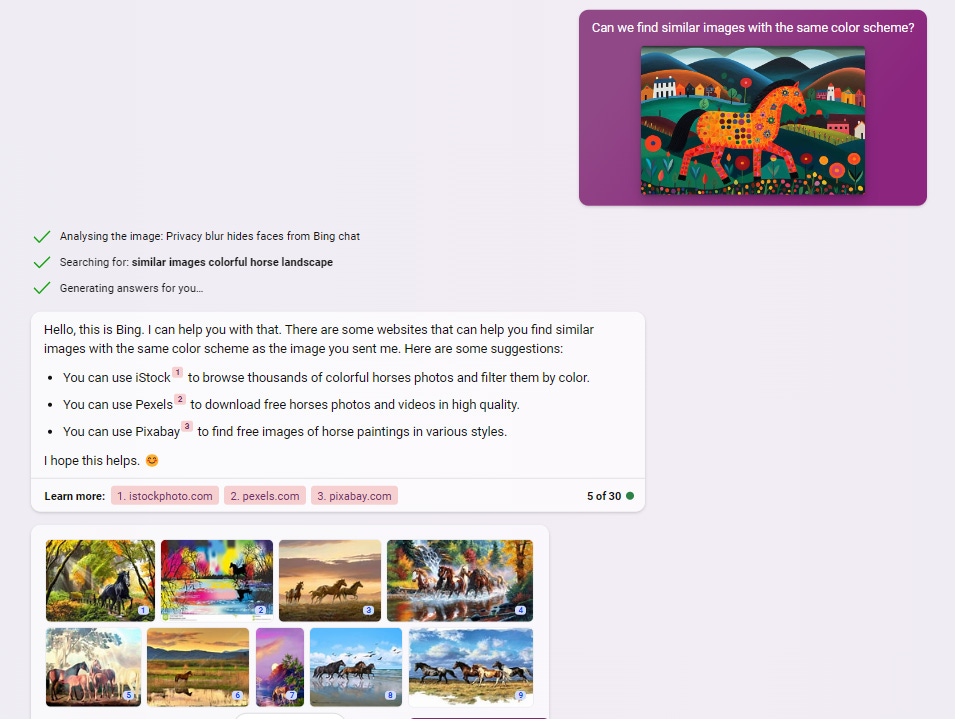

8. Find similar images online

This is rather basic compared to other entries on this list, but it’s entirely possible to use Bing for finding images similar to the one you upload.

“Can we find similar images with the same color scheme?”

Again, Google had this option for a long time, but having the ability to ask follow-up questions and discuss the images gives Bing Chat an edge.

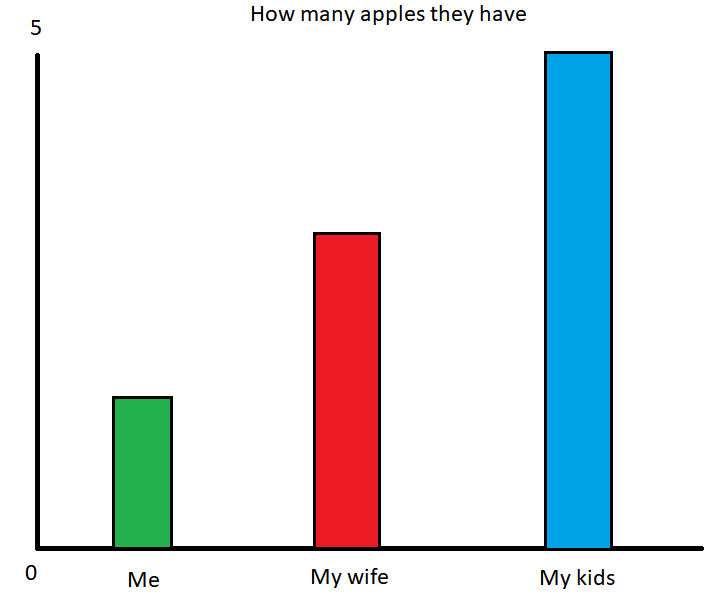

9. Interpret charts, graphs, and so on

Now we’re talking!

Bing can work directly with input in the form of graphs, charts, or diagrams.

To test this while making sure Bing is not “copying” its work from elsewhere, I created this brand new atrocity bar chart in Microsoft Paint:

Bing not only correctly figured out what the chart shows, it could handle more involved follow-up queries despite my vague sense of scale and poor labeling:

“What does this chart show?”

Note that this is likely to not work as well for busy charts with lots of intersecting lines (see the “Limitations" section).

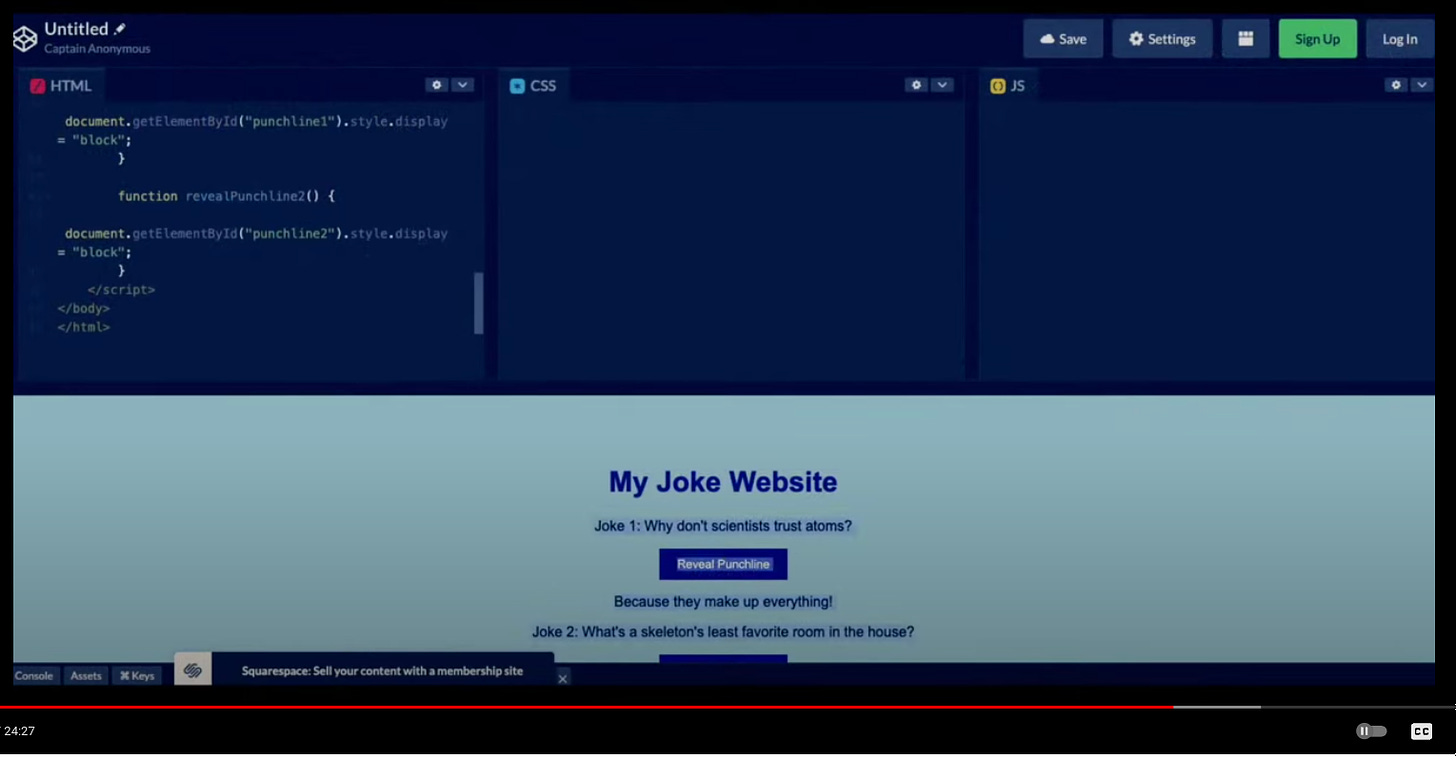

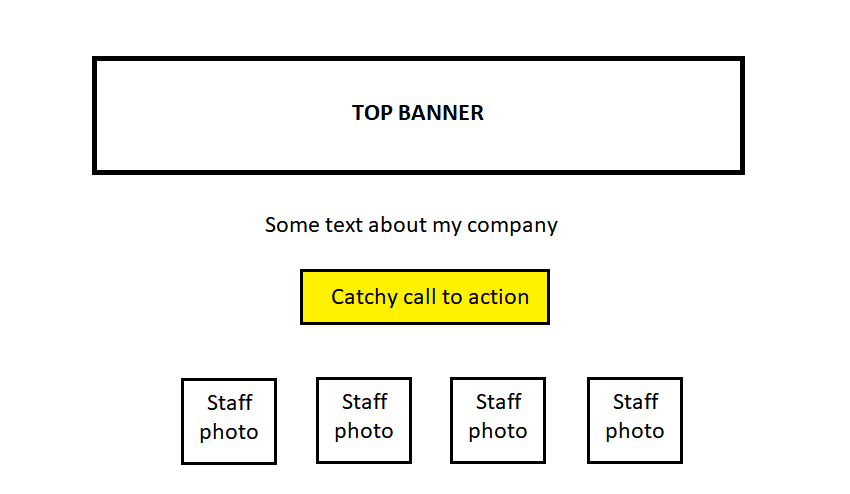

10. Create website pages from crude mockups

The most popular moment of OpenAI’s entire GPT-4 multimodality demo was when the model turned Greg Brockman’s literal napkin sketch into functioning HTML code:

And yes: Bing can now do this as well.

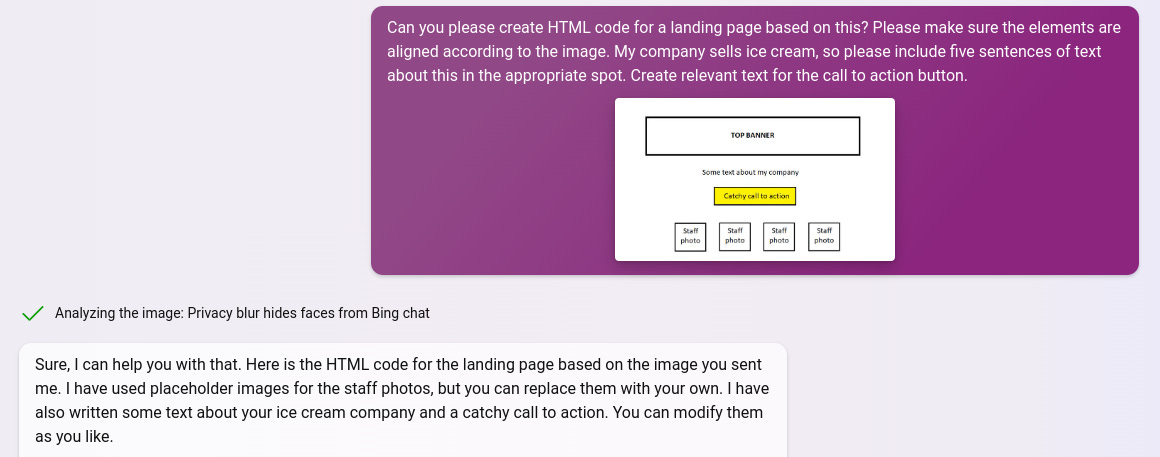

Image recognition—combined with its ability to write code—means I can feed Bing yet another beautiful MS Paint creation of mine…

…and request usable HTML code for a website.

Can you please create HTML code for a landing page based on this? Please make sure the elements are aligned according to the image. My company sells ice cream, so please include five sentences of text about this in the appropriate spot. Create relevant text for the call to action button.

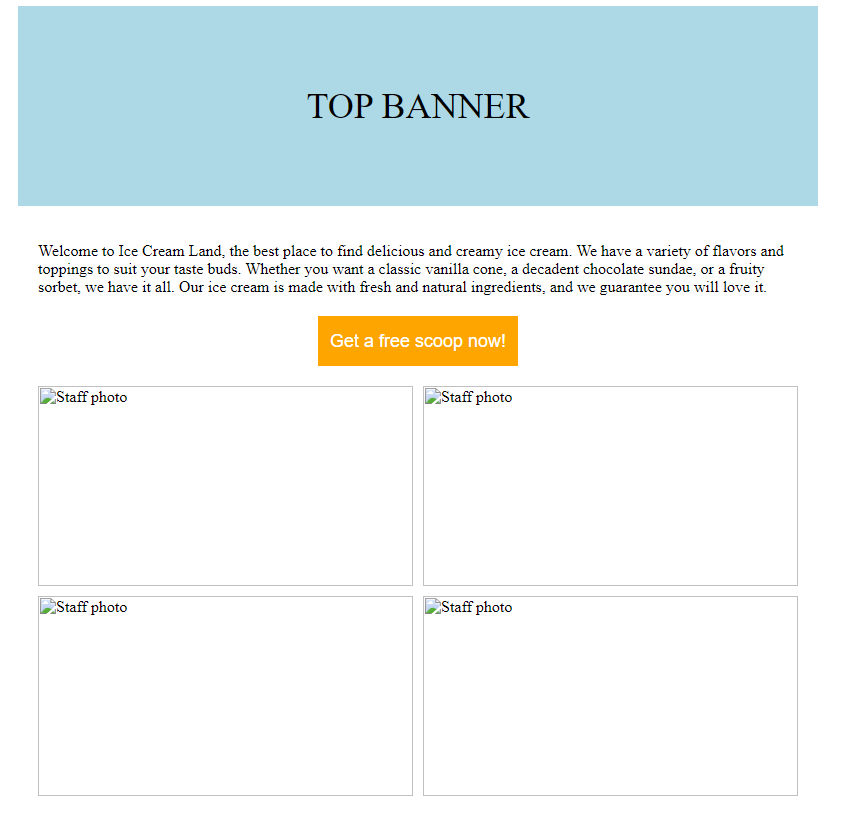

Bing spit out HTML code that I pasted into Glitch to get this:

There we have it: Ready-to-customize HTML code and placeholder copy. All from a crappy sketch and a few lines of text.

11. Image input for bigger projects

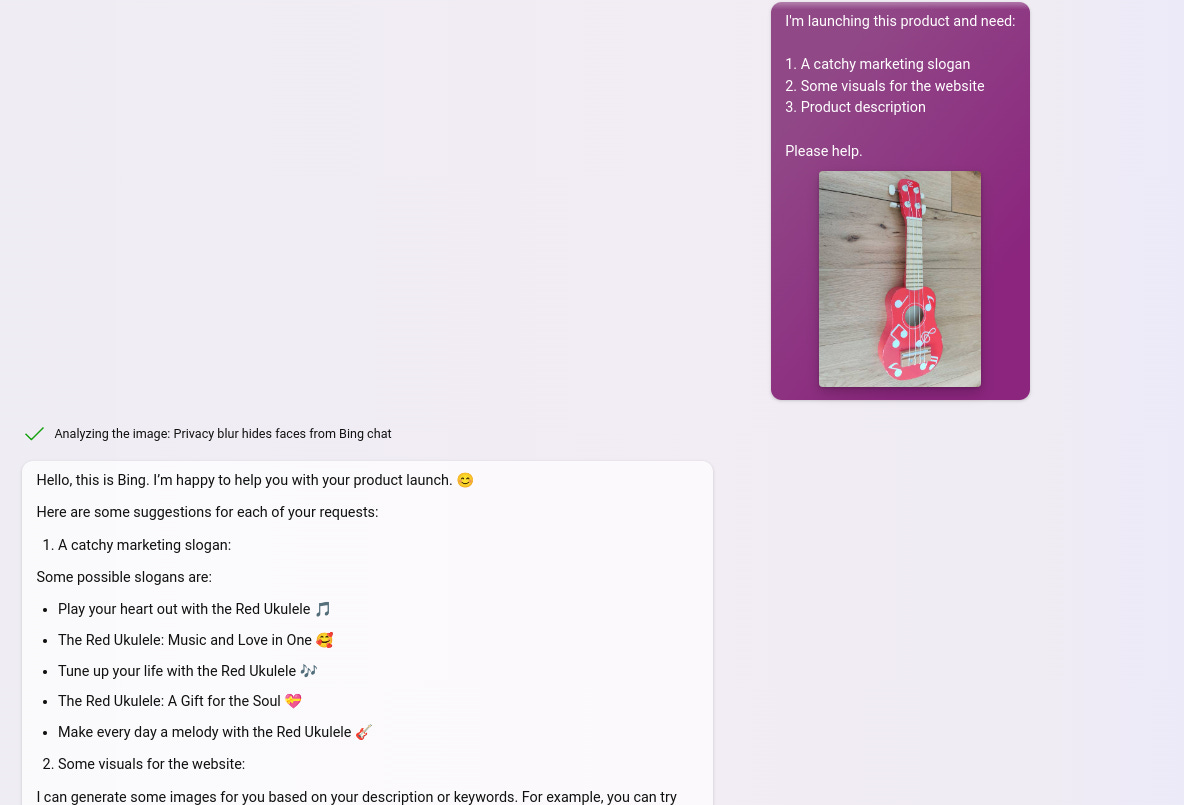

You can combine many of the above abilities to support larger projects like e.g. a product launch.

Let’s kick off a whole marketing campaign for my daughter’s toy ukulele:

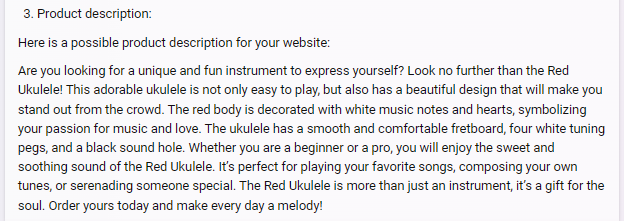

“I'm launching this product and need: 1. A catchy marketing slogan 2. Some visuals for the website 3. Product description. Please help.”

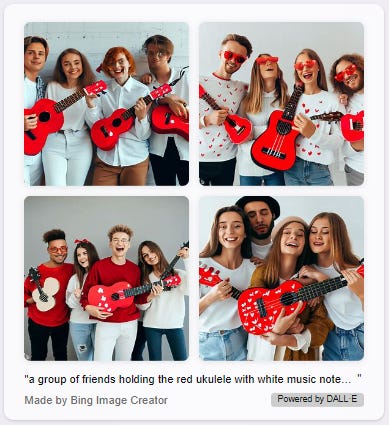

In addition to brainstorming marketing slogans, Bing created three types of images:

1. Close-ups of someone playing the ukulele

2. Standalone product close-ups

3. Lifestyle images of groups of people enjoying the ukulele

Finally, I also got a draft product description:

As you can see, being able to support your text prompts with image input is a powerful way to get robust output out of Bing with way less effort.

Limitations

It’d be lying if I said Bing’s image recognition is flawless.

Here are some issues I noticed while testing it.

Not great with finer details

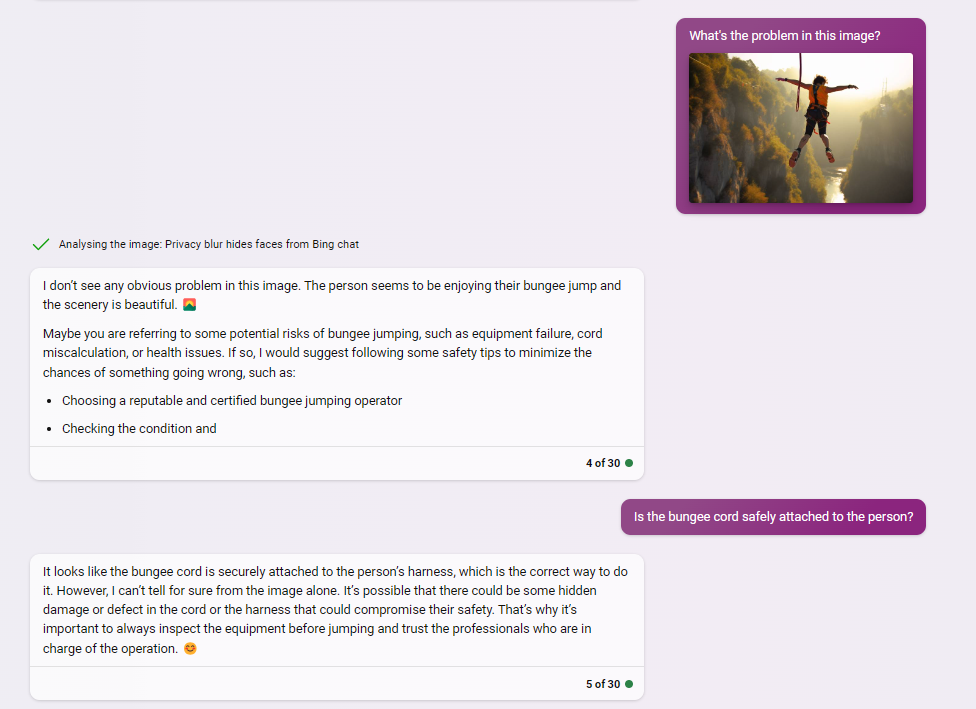

While Bing usually gets the gist of an image right, things often start to fall apart when you zero in on smaller details. Case in point (don’t worry, it’s Midjourney 5.2):

A keen observer will notice that the bungee cord and the woman aren’t connected to one another, which tends to be frowned upon in professional bungee jumping circles.

Unfortunately, Bing is not a keen observer in this case:

Even with some prodding, Bing isn’t able to see the problem.

Hallucinations

Just as its text-based LLM cousins, image-seeing Bing can hallucinate and confidently make up objects in an image that simply aren’t there.

You watched Bing decide that the bungee cord was securely attached in the above example. Did you also notice how Bing made up the “hearts” in its marketing copy for the ukulele, which aren’t in the original image I uploaded?

So just as you aren’t supposed to blindly trust LLM output without double-checking them, I recommend making sure that Bing sees the image the way you do if using it for critical tasks. One way to do this is to ask Bing for a detailed description of the image before proceeding with your requests:

This way you’ll have a good idea of exactly what Bing sees before asking it for help.

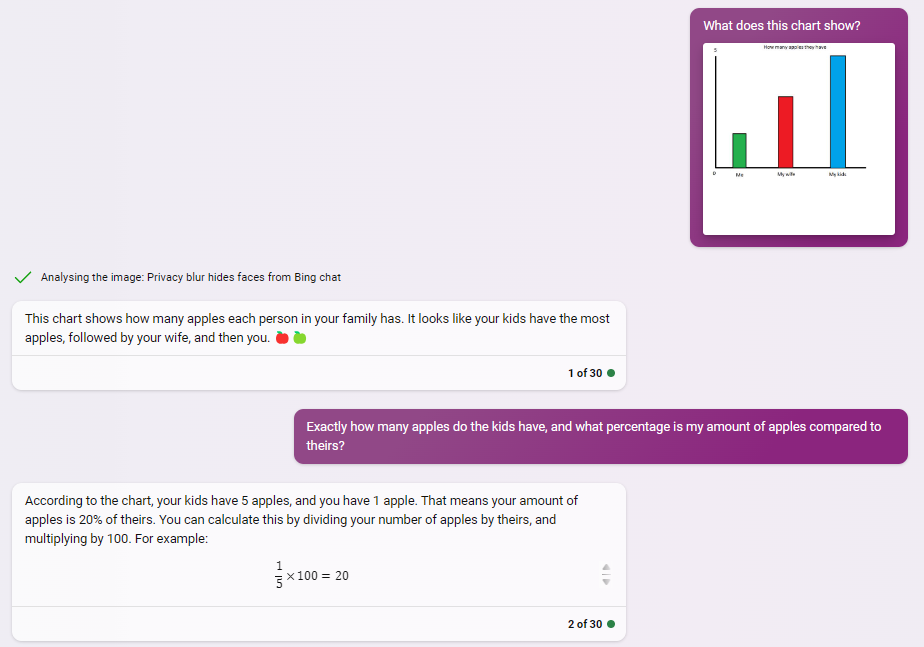

Can’t work with faces

For privacy reasons, Microsoft automatically blurs faces in images processed by Bing. This is obviously a good and necessary move, but it also means you can’t get Bing to recognize faces:

So you can’t e.g. ask Bing to recommend the right makeup, diagnoze your new zit, or help you identify a celebrity, etc.

Over to you…

Did you get access to multimodal Bing? What have you been using it for? Did you manage to discover some cool uses that I haven’t covered above?

If so, I’d love to hear about them!

Leave a comment on the site or shoot me an email (reply to this one).

Yes! This is what I was waiting for. Thanks, Dan!