DeepSeek-R1: The Free o1 Alternative

How to use the new DeepSeek-R1 model and how it compares to o1.

Hot Takes are occasional timely posts that focus on fast-moving news and releases, in addition to my regular Thursday and Sunday columns.

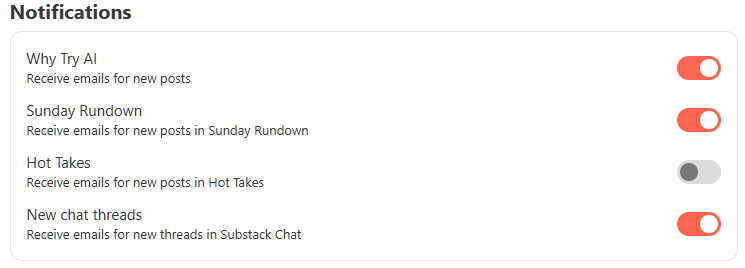

If Hot Takes aren’t your cup of tea, simply go to your account at www.whytryai.com/account and toggle the “Notifications” settings accordingly: