No, DeepSeek’s Janus-Pro-7B Doesn’t Make Better Images Than DALL-E 3.

DeepSeek is an incredible AI lab, but let's not elevate it to godlike status just yet.

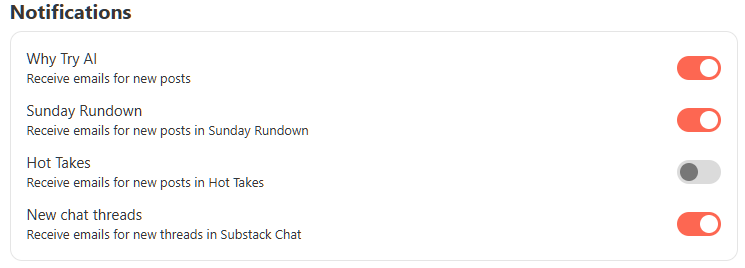

Hot Takes are occasional timely posts that focus on fast-moving news and releases, in addition to my regular Thursday and Sunday columns.

If Hot Takes aren’t your cup of tea, simply go to your account at www.whytryai.com/account and toggle the “Notifications” settings accordingly:

TL;DR

DeepSeek released an image model called Janus-Pro-7B, which some claim makes better images than DALL-E 3 and Stable Diffusion, but it’s nowhere near in my tests.

What is it?

DeepSeek describes Janus-Pro-7B as “a novel autoregressive framework that unifies multimodal understanding and generation.”

In short, this means you can use the same model to process image inputs and generate new images. This makes Janus-Pro-7B quite flexible, combining the capabilities of task-specific models in a single one.

That—and the fact that it’s yet another open-source model—is worthy of praise.

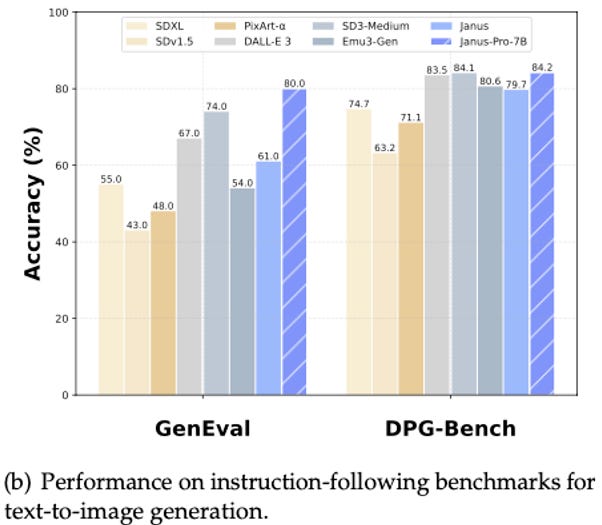

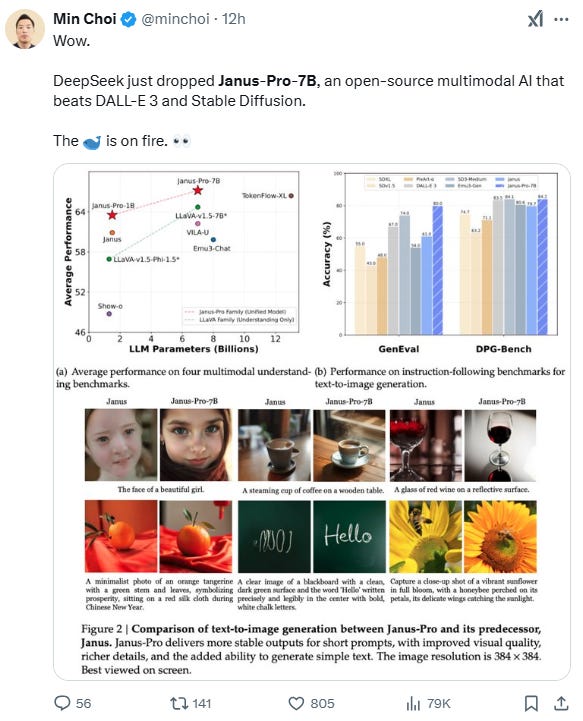

But DeepSeek also shared a few benchmarks that show Janus-Pro-7B outperforming OpenAI’s DALL-E 3 and Stable Diffusion 3 Medium:

Here’s the thing: GenEval and DPG-Bench are benchmarks that measure prompt adherence—how well a model follows directions in the text prompt.

They say absolutely nothing about the aesthetic quality of the model.

Still, this didn’t stop the Internet from instantly proclaiming Janus-Pro-7B the new king of images:

And while better prompt adherence is nothing to sneeze at, you also want your image model to create something that looks good.

Unfortunately for Janus, most of what it generates is hot garbage.

Let me show you.

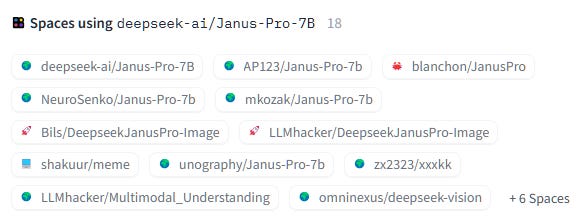

How do you use it?

To test Janus-Pro-7B for yourself, head on over to its Hugging Face page and select any of the “Spaces” using the model on the right-hand side:

I’ll go with the official deepseek-ai/Janus-Pro-7B. Here’s my 2-minute walkthrough:

If you saw the video, you’ll know that Janus-Pro-7B:

Hallucinates nonexistent details in uploaded images.

Creates new images that look like Lovecraftian horrors.

Here are three sample prompts and side-by-side comparisons against DALL-E 3 and Stable Diffusion 3, the models DeepSeek chose to benchmark against:

Prompt: “photo of a samurai in a traditional outfit holding a sci-fi blaster, futuristic skyscrapers with neon signs in the background”

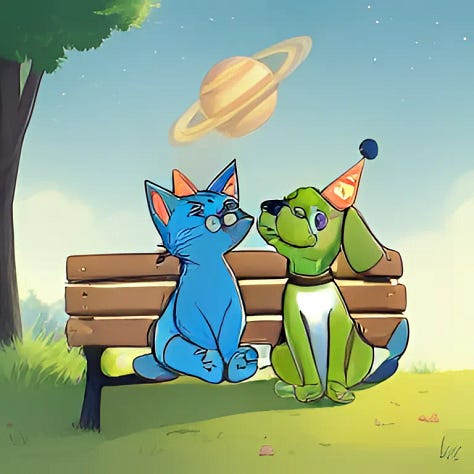

Prompt: “cartoon illustration of a blue cat and a green dog wearing party hats, sitting on a park bench and looking up at Saturn”

Prompt: “a cute purple robot holding up a cardboard sign that reads “I can spell better than you!”

Janus—how do I put this mildly—sucks ass.1

If I’m being generous, I’ll put Janus on par with Midjourney V2. (Reminder: We currently have Midjourney V6.1 with Midjourney 7 set to come out soon.)

Curiously, DeepSeek chose to benchmark against the outdated DALL-E 3 and Stable Diffusion 3 Medium instead of the newer, better image models, many of which are also way better at prompt adherence and spelling than DALL-E 3 (see my recent test).

Why should you care?

DeepSeek released the truly impressive DeepSeek-R1 reasoning model just a week ago. Since then, this Chinese AI lab has been the talk of the town, getting much-deserved praise for successfully competing with large, high-profile US labs on a “shoestring budget.”

Not only that but DeepSeek is also seen as more open and transparent:

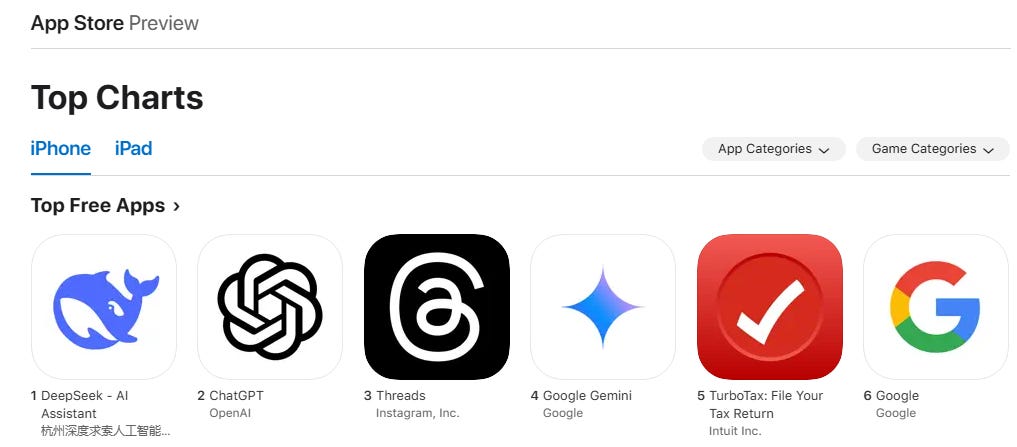

DeepSeek got so much attention that it skyrocketed to the #1 free app spot on the App Store:

Everybody loves DeepSeek.

So much so that we’re now doing that classic thing where we put a company on a pedestal and automatically give it a free pass.

Let’s not do that.

With DeepSeek feeding so much discourse, we’ll likely see even more hype around it. Some well-earned, some less so.

As always, take it all with a grain of salt and test things yourself where possible.

I certainly can’t rule out that DeepSeek will eventually release competitive image and even video models. If anything, last week has shown it to be an astoundingly capable AI lab.

But let’s not rush to find a new AI darling we can blindly idolize.

🫵 Over to you…

What’s your take? Am I being unreasonably nitpicky? Have you tested Janus and found its multimodality especially helpful for certain use cases?

Leave a comment or drop me a line at whytryai@substack.com.

This Reddit thread agrees with me.

I had the same thought about R1, although I know there's at least some fire to this smoke.

Not a hot take, but a level take, a rational take, a quantitative take, a unhypey take. Cool take man