Claude 3.7 Sonnet: Fantastic Model Held Back by Lack of Native Internet Access

The new SOTA model from Anthropic could really benefit from web browsing.

TL;DR

Anthropic just launched the impressive Claude 3.7 Sonnet, but it’s still locked inside the old interface without built-in access to the web.

What is it?

Claude 3.7 Sonnet is a new state-of-the-art model that incorporates a traditional LLM and a reasoning model in one.

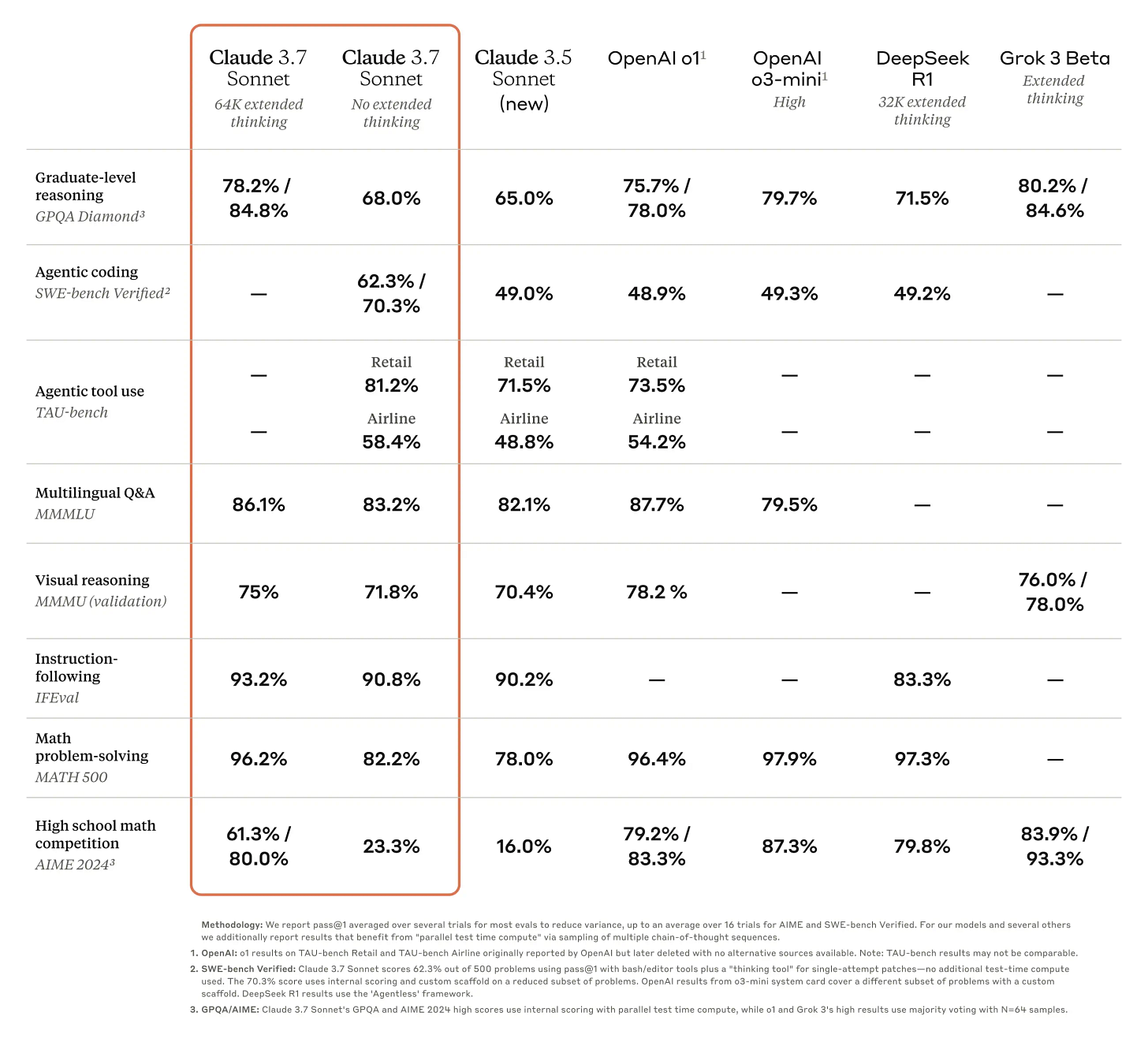

It goes toe-to-toe with or outperforms frontier models like Grok 3, DeepSeek-R1, and OpenAI’s o-family on most benchmarks:

How do you use it?

Simple: Just go to the usual claude.ai website.

If you’ve never used Claude before, you’ll need to create an account.

If you have, Claude 3.7 Sonnet will now be the default model:

Now you’ll know just what to do: You’ve seen plenty of these chat interfaces before.

Anthropic calls Claude 3.7 Sonnet “the first hybrid reasoning model on the market.”

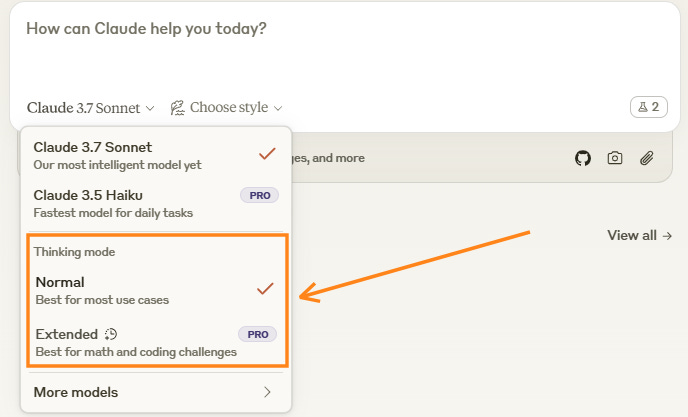

If I’m honest, I’m somewhat puzzled by the way this is currently handled.

To me, the word “hybrid” entails a single model that independently switches between standard and reasoning modes without the user’s involvement.

But Anthropic still presents us with a manual thinking mode selection in its interface:

Grok has a “Think” option that does the same:

And here’s OpenAI’s version that currently lets free users tap into o3-mini:

The way I interpret this is that Claude 3.7 Sonnet has a “basic“ thinking mode for free users and a “super” thinking mode reserved for PRO accounts.

So this dropdown decision is driven by marketing considerations.

Nevertheless, I find it unnecessarily confusing to have dropdown selections for an ostensibly hybrid model.

Anthropic did kind of beat OpenAI to the punch here, as Sam Altman recently announced that they’re moving in a similar direction of a unified system without model pickers.

By early accounts, Claude 3.7 Sonnet is fantastic, especially when it comes to coding.

Yet I can’t help but feel that Anthropic is shooting itself in the foot by not giving it Internet access.

Why should you care?

Having a cutting-edge model that can’t browse the web is a bit like taking a genius scientist to a world-class research lab and then shackling them to a corner table next to a bunch of dusty encyclopedias.

Claude can’t look up information about new coding tools and features or visit troubleshooting forums. It can’t apply its reasoning skills to real-time developments or read newly released scientific papers.

All of this might make Claude 3.7 Sonnet less competitive against otherwise weaker models that have direct Internet access.

Of course, this is mostly an issue for regular users, since developers can always use the API to incorporate Claude into existing, web-enabled applications. There are also ways to give Claude indirect web access via tool use or third-party solutions.

Still, Anthropic could turn Claude 3.7 Sonnet into an even more appealing package by granting it out-of-the-box Internet access. Hell, I might even be persuaded to switch from my ChatGPT Plus account if that happens.

To be sure, I’ve been quite skeptical about AI search before.

But we’re starting to see impressive products like OpenAI’s “Deep Research,” which make up for the inherent issues of AI web browsing with their ability to reason through problems and identify quality sources.

Now, I’m not saying that we need yet another Deep Research product on the market.

But I’m also not not saying that.

🫵 Over to you…

Have you had the chance to try Claude 3.7 Sonnet for yourself? What have been your early impressions? I’d love to hear if there are specific use cases in your life that Claude 3.7 Sonnet has been especially well-suited for.

Also: Do you consider the lack of web access as much of a drawback as I do? Or is it pretty negligible, as far as you’re concerned?

Leave a comment or drop me a line at whytryai@substack.com.

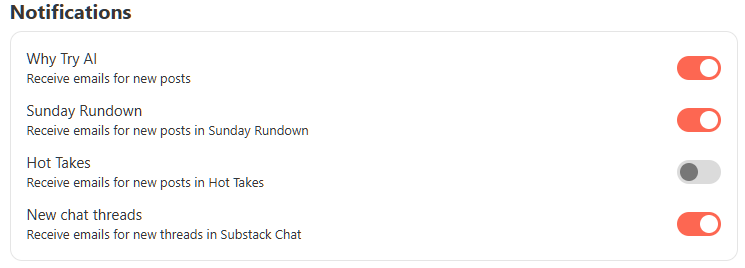

Hot Takes are occasional timely posts that focus on fast-moving news and releases, in addition to my regular Thursday and Sunday columns.

If Hot Takes aren’t your cup of tea, simply go to your account at www.whytryai.com/account and toggle the “Notifications” settings accordingly:

Another point is that creating this additional friction not giving internet access is actually helpful for users I think. It reduces offloading information gathering and synthesizing or at least makes it a bit more challenging and I think that's actually might be better than long-term for human users.

I don't want the future where I'm just validating AI outputs after it's done all the cognitive work for me that is pretty dystopian in my opinion.

https://www.microsoft.com/en-us/research/uploads/prod/2025/01/lee_2025_ai_critical_thinking_survey.pdf?_bhlid=40d0090608b9ebfba2ccd983402c9ede29cfcf47#:~:text=We%20survey%20319%20knowledge%20workers%20to%20investigate%201%29,first-hand%20examples%20of%20using%20GenAI%20in%20work%20tasks.&msockid=14be17bab9a06aed088e03b4b8f76bf7

My early testing has me thinking that it might win me back. I've been kicking Claude to the side lately for some of the other new models and features like ChatGPT deep research and Grok.

I would like to see internet access at some point. Seems pretty standard at this point. Regular users can work around this by using something like ChatGPT or Perplexity and NotebookLM to build out briefs from online sources. Then feed that into Claude's project knowledge. Gives you full control of the sources it pulls, which is often better than just letting it browse the web anyways.

Oh and the visuals/tables it creates within articles are pretty damn good.