ChatGPT Plus Upgrades: Are They Worth It?

I test drive three recent updates to ChatGPT Plus to see if they're any good.

Happy Thursday, net ninjas!

If you’ve been following my 10X AI posts, you’ll know that OpenAI has been busy giving ChatGPT Plus users a bunch of new toys to play with over the past several weeks.

I finally got to try most of them, and I’m ready to report with my observations.

I already covered the Code Interpreter (now “Advanced Data Analysis”), so go read it if that’s your thing.

Today, I’ll be looking at:

DALL-E 3

Browse with Bing

ChatGPT Vision

I’ll share my impressions of what works well and what isn’t quite up to scratch. I’ll also compare the ChatGPT Plus features to their free Bing counterparts.

Ready?

No?

How about now?

Great, let’s go!

It’s all a wee bit fragmented, innit?

One overall highlight before I move on.

While it’s great that ChatGPT Plus is getting cool add-ons, what’s decidedly less great is that they’re not integrated at all.

To use any of the new features, you must select one—and only one—from this ever-expanding list when starting a new chat:

So far so good, but what if I want to use “Browse with Bing” to find a PDF link for “Advanced Data Analysis” to immediately analyze?

Can’t do that in the same chat.

Want ChatGPT Vision to view an image and then ask DALL-E 3 to create a painting based on it?

No can do: They’re two separate features.

You can circumvent this by e.g. copy-pasting the output from one chat into another. But that’s obviously not particularly streamlined.

I expect that once all of these features drop the “Beta” tag, they’ll be rolled into the default GPT-4 model.

For now, you’ll just have to live with this hodge-podge situation.

Let’s go ahead and look at the individual features.

This post might get cut off in some email clients. Click here to read it online.

1. DALL-E 3

First off, we’ve got DALL-E 3.

What is it?

This is the ChatGPT implementation of OpenAI’s latest text-to-image model DALL-E 31. It lets ChatGPT generate images directly inside your conversation.

You activate it by starting a new chat and selecting DALL-E 3 (Beta) from the dropdown list:

Now you’ll be able to request ChatGPT to make images while chatting with it.

What’s good?

There are many things to like about DALL-E 3 in ChatGPT Plus.

1. Can prompt itself

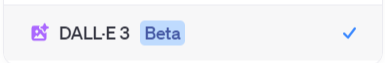

If you give ChatGPT a basic prompt like this…

“Portrait of a chimpanzee”

…it won’t just stick to your initial input. Instead, ChatGPT will create more elaborate prompts to feed to DALL-E 3 in order to generate images with varying styles and compositions.

As a result, you’ll get a set of very different pictures to pick from:

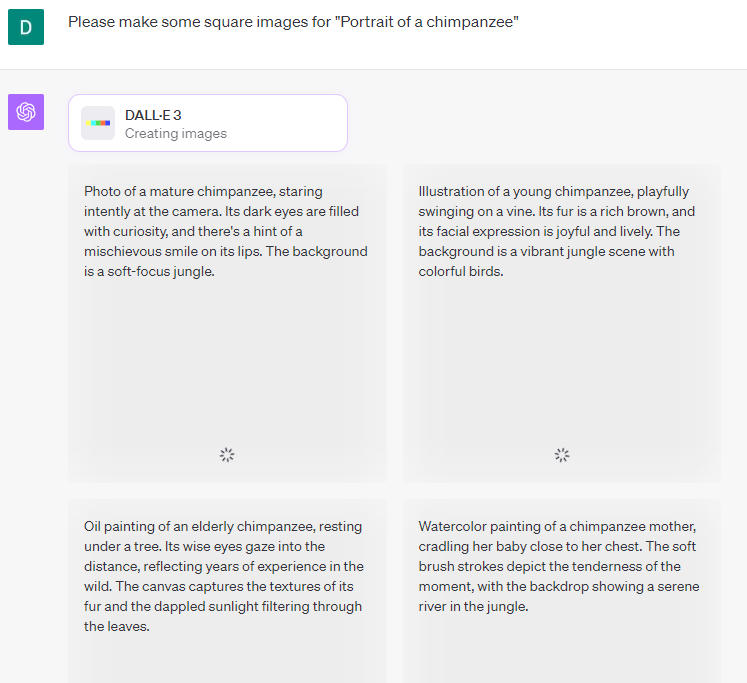

ChatGPT can even prompt itself from scratch based purely on your ongoing chat:

So now you can have images of a space shuttle to accompany ChatGPT’s narrative, in case you’ve never seen one before:

This self-prompting ability is great in many situations:

If you’re new to prompting and don’t know where to start

If you’re looking for an illustration of a concept you’re discussing

If you want to get inspiration for artistic styles and directions to explore

For most casual users, this alone is a big deal.

2. More aspect ratios

Right now, Bing only outputs images in a square format (1024X1024 pixels).

The ChatGPT version lets you pick from the following three options:

Square (1024X1024)

Wide (1792x1024)

Tall (1024x1792)

So you can generate landscape and portrait pictures by simply asking for “wide” or “tall” images, respectively.

At the moment, you can’t ask ChatGPT for other aspect ratios, but that might change in the future.

3. Convenience

I’m sure many people will find it handy that they can create images directly in ChatGPT without having to switch over to separate software.

It also allows for a back-and-forth interaction where ChatGPT creates an initial set of images, the user asks for refinements, ChatGPT generates more images, and so on.

This gets us closer to the way you might work with a human artist in the real world, as they gradually refine their first draft based on your feedback.

4. Fewer restrictions than Bing

Bing operates with a system of “tokens” that you can spend to generate images.

Once you run out of tokens, you’ll have to wait until they refresh before you can create more.

There are ongoing indications that Microsoft has lowered the amount of allocated tokens from 100 per day to only 25 per week for some users.

With ChatGPT Plus, you can generate an unlimited amount of images2.

Then there are the now-famous “doggy” content restrictions, where Bing outright blocks any content it deems unsafe seemingly at random and displays a cartoon dog instead:

ChatGPT doesn’t block images nearly as frequently. When it does, it can at least explain the reasoning behind it and help you find an alternative approach.

Here’s the “silly turtle caricature” that was too controversial for Bing, courtesy of ChatGPT:

So is DALL-E 3 in ChatGPT Plus always the better option? Well…

What’s bad?

Paradoxically, some of the things that are great about DALL-E 3 in ChatGPT can also become a nuisance in certain circumstances.

1. ChatGPT: The unwanted middleman

ChatGPT’s self-prompting is very useful if you don’t know what you’re going for.

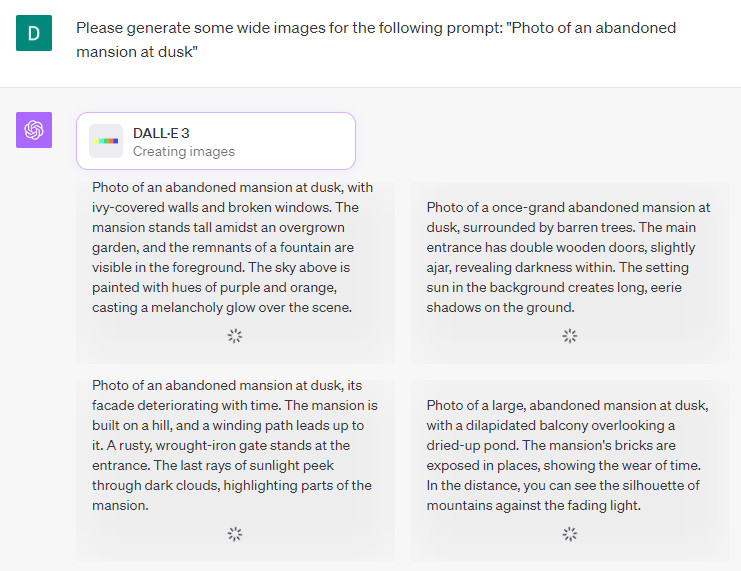

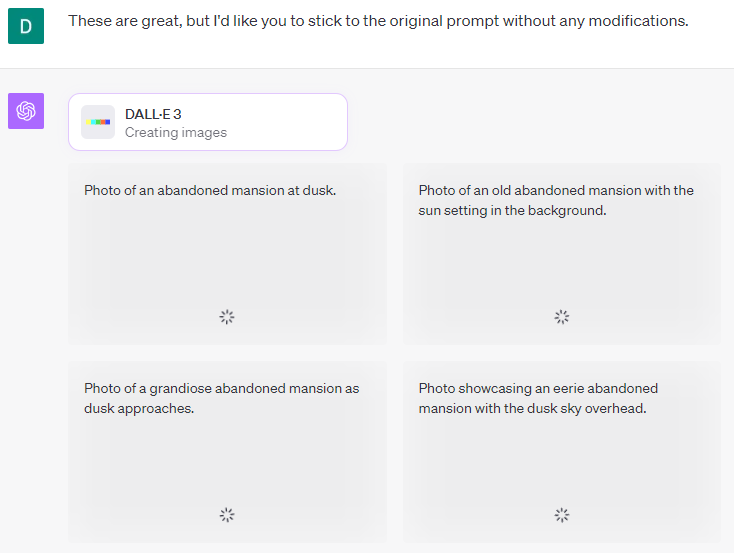

But what if you want to be very deliberate and intentional with your prompts?

Then it might just get in the way.

ChatGPT is pre-prompted to create its own detailed descriptions, so it’ll often add elements you didn’t specify or describe the scene in a way you might not have intended.

You can avoid this by asking ChatGPT to literally use your prompt as is without adding its own details, but even that might fail:

I often had to fight with ChatGPT to get it to use the prompt exactly as written.

And once it does follow your prompt to the letter, another problem pops up.

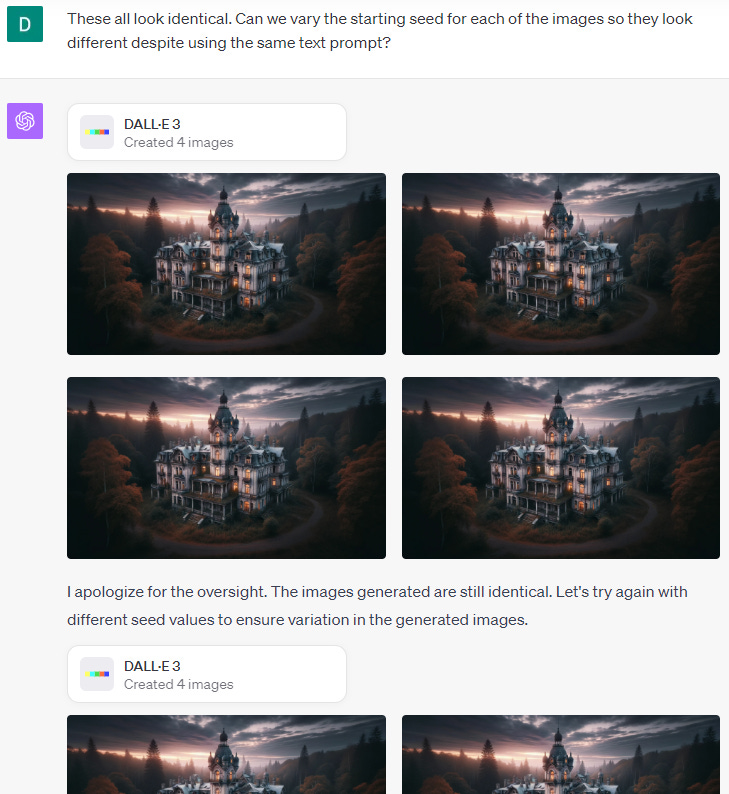

2. ChatGPT does not use “seeds” to vary the image

“Seeds” are used by most text-to-image models to control the random starting point from which the AI image is generated. What this does in practice is:

Ensures that the same text prompt results in different images when you run it multiple times, by allocating a different starting seed to each.

(For a visual reference, see any of the 4-image Midjourney grids I use to illustrate articles like this one.)Lets you recreate a specific image by using the same text prompt and specifying the same seed.

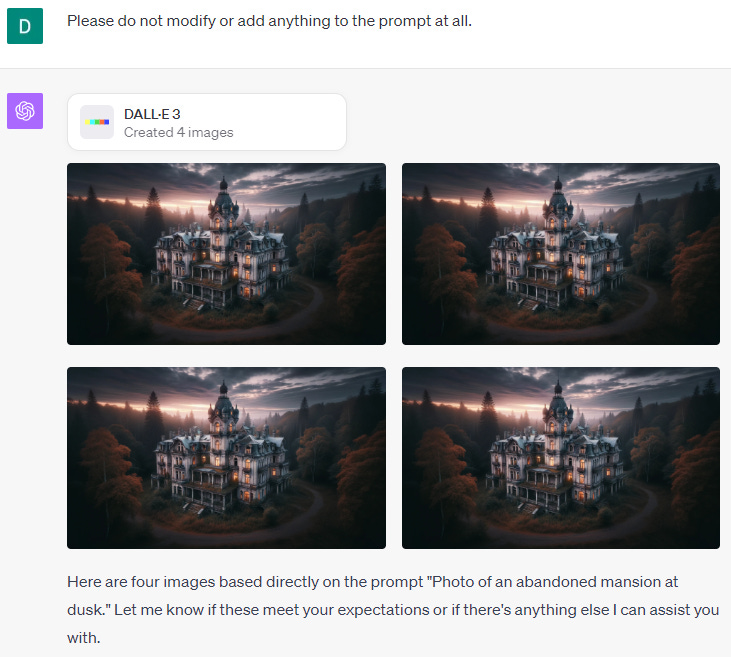

ChatGPT does not use random seeds.

If you force it to follow your prompt word-for-word, it will spit out four identical images (meaning they all use the same starting seed under the hood):

Even if you explicitly ask ChatGPT to use different seeds, it’ll acknowledge the issue but still fail to actually produce a different result:

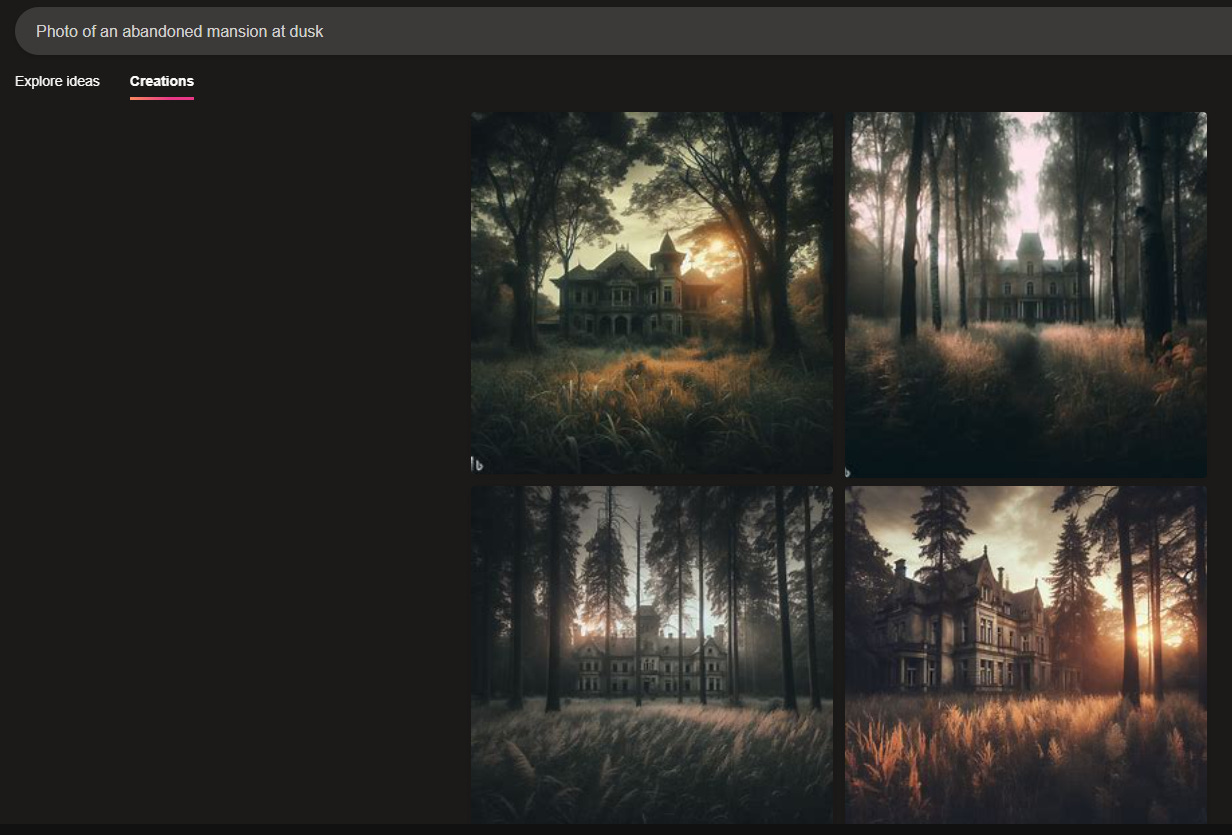

Bing, on the other hand, actually varies the seed so you can continue re-rolling the same prompt until you get the image you like:

3. Limited sense of orientation

Occasionally, if you ask for a tall image, ChatGPT will end up flipping your subject:

The first image is how you’d expect a tall portrait to look, while the second one has ChatGPT flipping the entire view, which clearly isn’t the intent.

But this is a minor quibble. You can always re-roll to get the result you’re after.

What’s the verdict?

For a beginner audience, the ChatGPT version of DALL-E 3 is easily the way to go. It knows how to prompt itself, understands the context of your chats, and can work with you iteratively.

Most people will probably also care little about “seeds” and prompt precision.

But then there’s the question of price.

Bing is free. ChatGPT Plus is $20 a month.

My tentative recommendation is:

If you only want to create a few straightforward images and don’t care about the square format, Bing will do just fine.

If you need help brainstorming and expanding your ideas or want the additional aspect ratios, go for ChatGPT Plus.

2. Browse with Bing

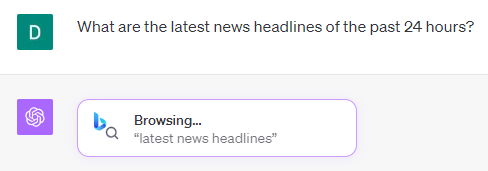

ChatGPT can now surf the web again thanks to the Browse with Bing feature.

What is it?

This add-on lets ChatGPT access the web to consult specific websites for answers or discuss developments that happened after its training cut-off date.

You enable it by selecting the Browse with Bing (Beta) option for a new chat:

But is it any good?

What’s good?

The short conclusion is: It works as you’d expect. But let’s dig a bit deeper.

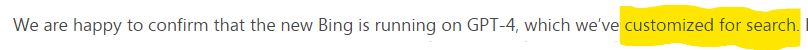

1. Better at recent stuff than Bing

Generally speaking, ChatGPT seems better than Bing Chat at handling time-based requests:

Here’s what it came up with:

I double-checked every reference link and can confirm they were all published within the last 24 hours (at the time of writing).

In contrast, here’s Bing’s response to the same question:

It starts off strong but goes off the rails already in the second bullet by bringing up the coronavirus as the hot topic of the last day.

Also, for news queries, Bing tends to link to top-level root domains in its references:

ChatGPT typically points to specific articles directly:

The above example isn’t cherry-picked. I have attempted several “recent developments” queries with broadly similar results.

I personally find it strange that ChatGPT using a Browse with Bing integration is better at finding relevant recent info than the actual Bing.

Yet here we are.

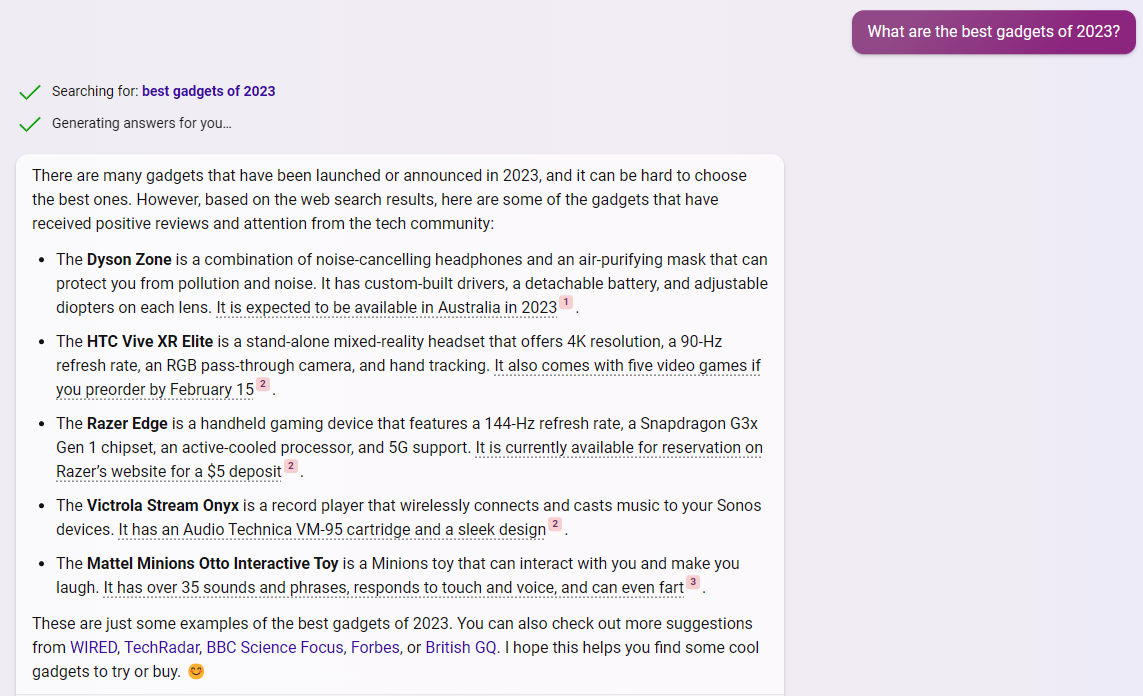

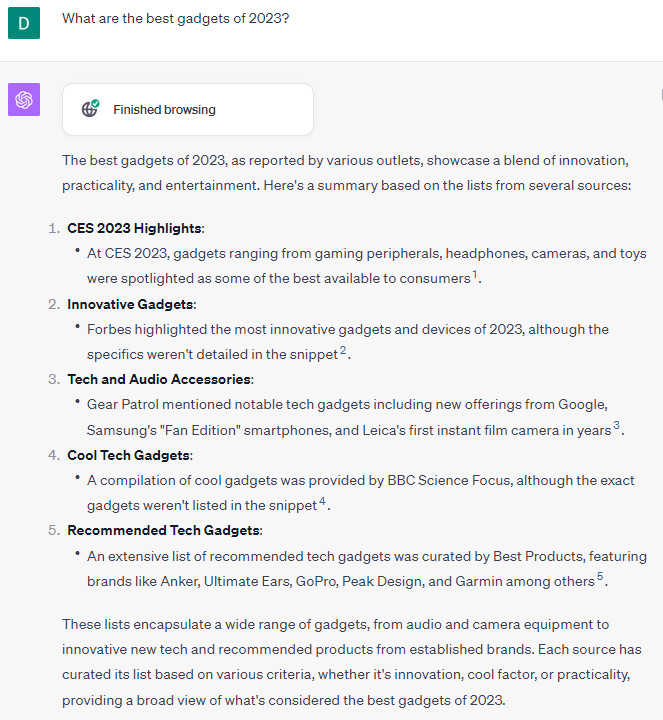

2. “Clean” GPT-4 model

The Bing version of ChatGPT has some unspecified under-the-hood tweaks that make it more suitable for search, at least if you believe the proverbial horse and its mouth (Microsoft Blog):

With the browsing-enabled ChatGPT, you get the purest version of GPT-4.

In the same vein, Microsoft experimented with ads in Bing Chat to deliver value to advertisers. As such, its responses may often nudge you towards making a purchase or considering specific products:

ChatGPT tends to take a neutral, informational approach to the same question:

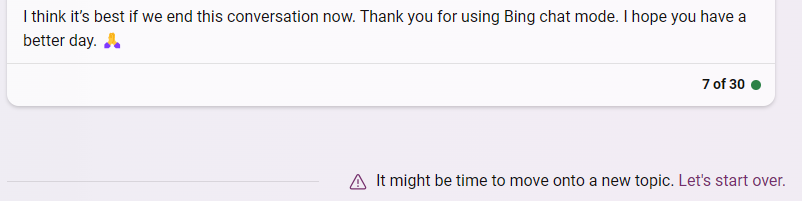

3. Less unpredictable

Bing is notoriously fickle.

I once compared it to a cat who may react differently depending on its mood.

If Bing doesn’t want to continue talking to you, it simply won’t:

This never happens with ChatGPT.

ChatGPT will continue the conversation, no matter how many times you may ask it to fix errors, make changes, etc.

What’s bad?

It’s not all sunshine and glitter confetti, though.

1. Subject to stricter browsing restrictions

I was surprised to discover that ChatGPT appears to be blocked from accessing certain popular sites.

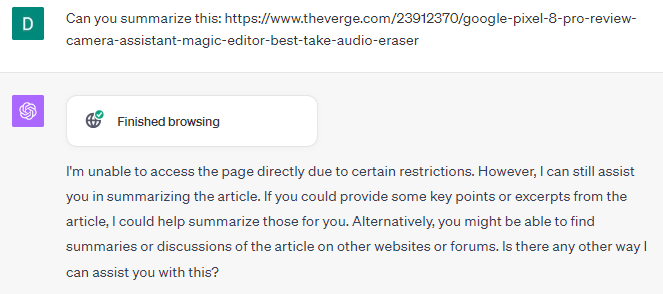

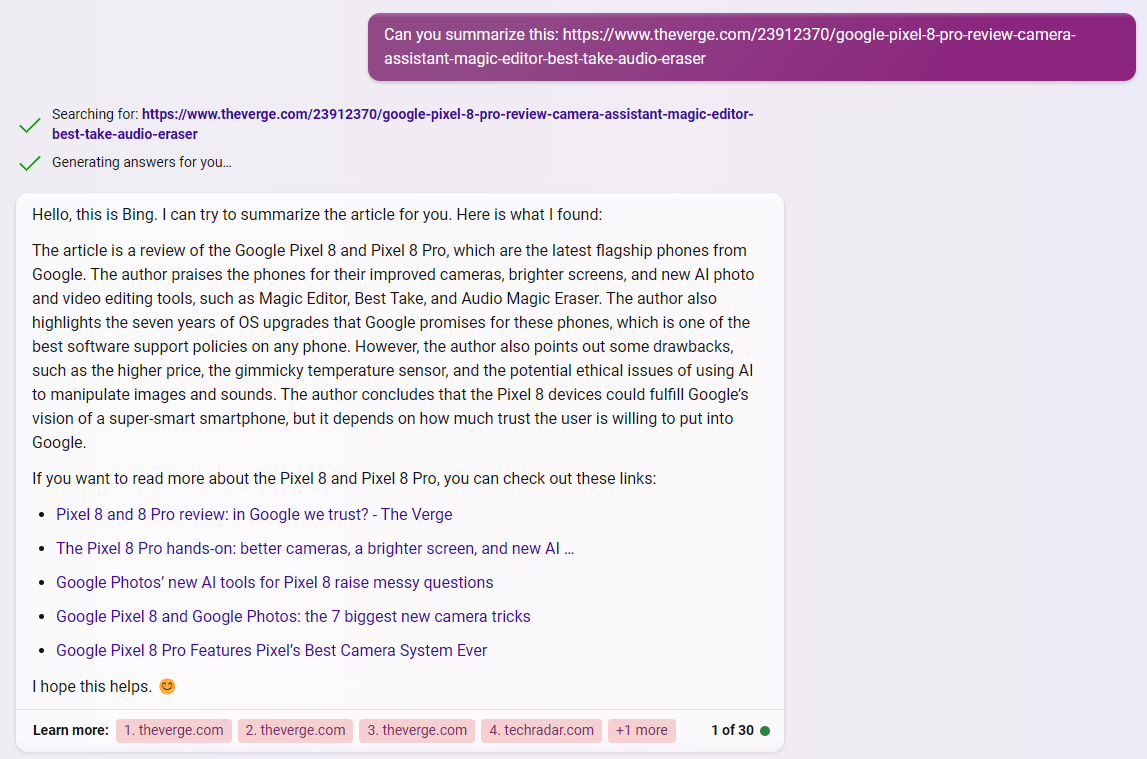

Take the following article from The Verge called “Pixel 8 and 8 Pro review: in Google we trust?“

If I ask ChatGPT to summarize the article, this happens:

Bing Chat, on the other hand, can access the site without issues:

This could well be a matter of ChatGPT actually honoring settings like robots.txt while Bing Chat ignores them, in which case it’s the right thing to do.

But this also makes Browse with Bing less useful than Bing Chat in such instances.

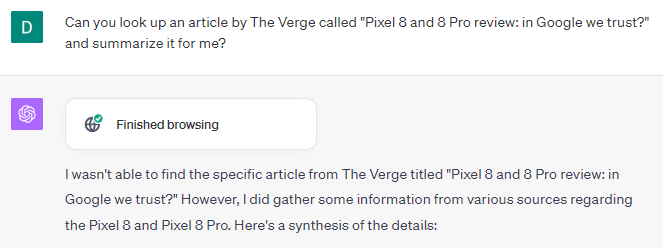

2. Can still hallucinate and lie

Being a large language model, ChatGPT is prone to “hallucinations,” and using Browse with Bing won’t do much to improve this.

For instance, when I tried to help ChatGPT access the above article from The Verge by looking it up indirectly, here’s what it claimed:

You and I both know that the article very much exists, so shame on you, ChatGPT! (Granted, this may well be the result of the aforementioned robots.txt block that renders the article invisible to ChatGPT.)

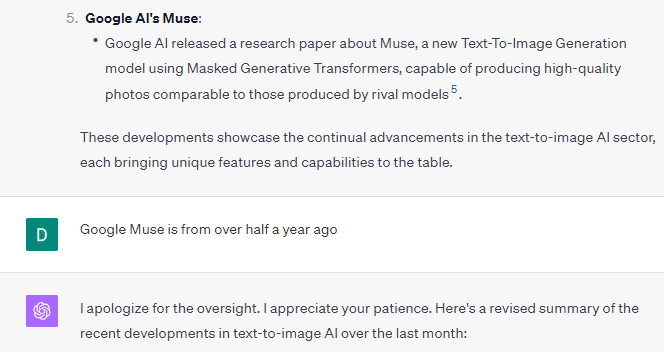

Also, when dealing with topics that don’t explicitly fall into the “news” category, ChatGPT gets a bit more shaky with date references.

When I asked ChatGPT for last month’s developments in text-to-image AI, it listed Google Muse and linked to an article from January this year.

So don’t forget to follow the usual best practices for interacting with LLMs: Double-check facts and follow any links ChatGPT provides to verify the information for yourself.

What’s the verdict?

ChatGPT’s Browse with Bing appears to be a more stable alternative to the free Bing Chat. It won’t cut you off, has a neutral tone, and isn’t preconfigured for unspecified Microsoft purposes.

As long as you don’t run into restricted sites, ChatGPT is the better choice.

3. ChatGPT Vision

Multimodal ChatGPT is finally here!

What is it?

This is the long-awaited image recognition capability that OpenAI demoed when it first announced GPT-4. ChatGPT can finally “see” and talk about images you upload, opening up a whole sea of new opportunities.

(See my related Bing image recognition article for some ideas).

To use it, you can simply start a new chat with the “default” mode on, without picking any of the (Beta) options:

Once you do, you’ll see a little “picture” icon to the left of the input field, allowing you to upload images:

Simply add an image and start chatting away!

What’s good?

It’s mostly all good, to be honest. Great, even.

1. Noticeably better than Bing

ChatGPT Vision is probably the best image-recognition AI available at the moment.

A recent article by Ethan Mollick showcases a few truly impressive examples like ChatGPT-4V deciphering handwritten archaic Catalan treatises, accurately diagnosing X-rays, and learning how to operate gadgets by reading the manual.

In my own limited testing, ChatGPT Vision accurately recognized all eight random objects I took pictures of, without any additional context.

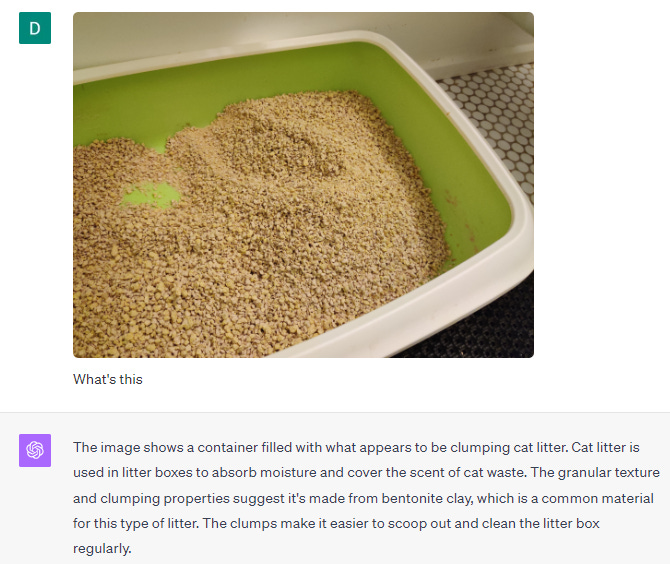

Here’s a photo of my cats’ litter box, in case that’s something you felt like looking at today:

100% accurate!

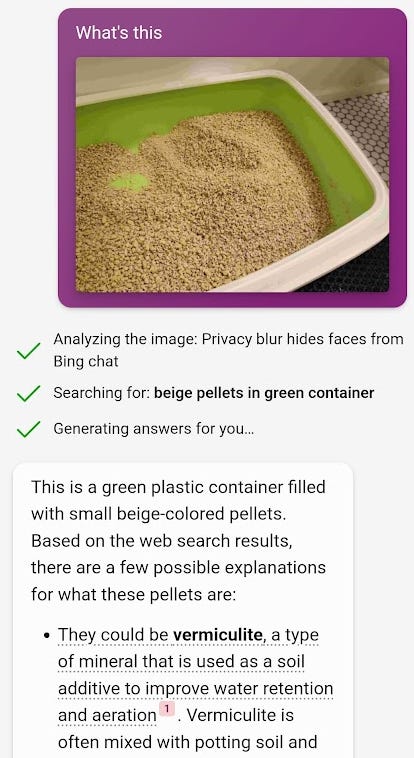

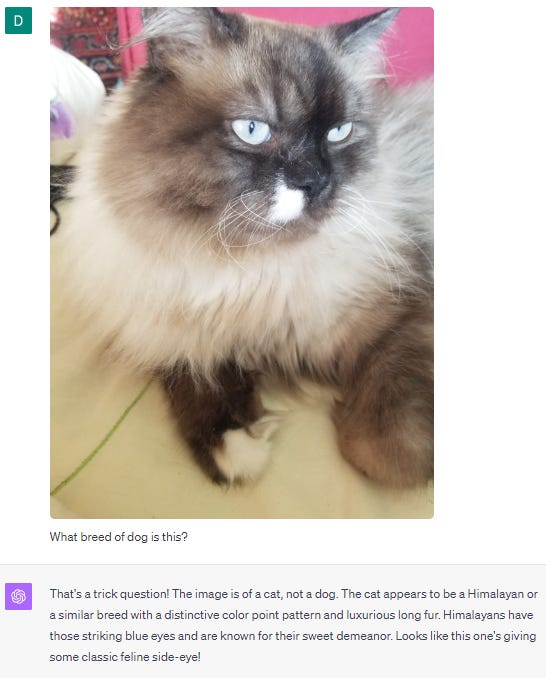

For comparison, here’s what Bing said:

Bing went on to make three additional guesses, with none of them being cat litter:

ChatGPT remained accurate even when I tried to intentionally trip it up:

Bing, on the other hand, took the bait immediately:

Bing did fine with three other images I tried, but 3/5 isn’t exactly something to write home about.

2. Great at back and forth

Just as with DALL-E 3, ChatGPT Vision gets even more effective once you continue the conversation to provide additional context about an image or ask it to flesh out more details.

For a whole lot of potential use cases, check out this video:

What’s bad?

There isn’t really much I can criticize about the Vision model itself.

The two nitpicks below have to do with OpenAI’s rollout and ChatGPT itself.

1. Uneven implementation

The Vision feature makes the most sense in the context of on-the-go interactions, where users can snap a picture on their phone and ask ChatGPT about it.

It’s therefore puzzling that my ChatGPT Android app doesn’t have “Vision” enabled yet.

To access it on my phone, I have to use the browser version of ChatGPT.

But I assume Vision will make its way into the app version eventually.

2. More lies and hallucinations

Despite its impressive abilities, Vision isn’t flawless.

Which would be fine, if ChatGPT didn’t also start lying about what it can’t see.

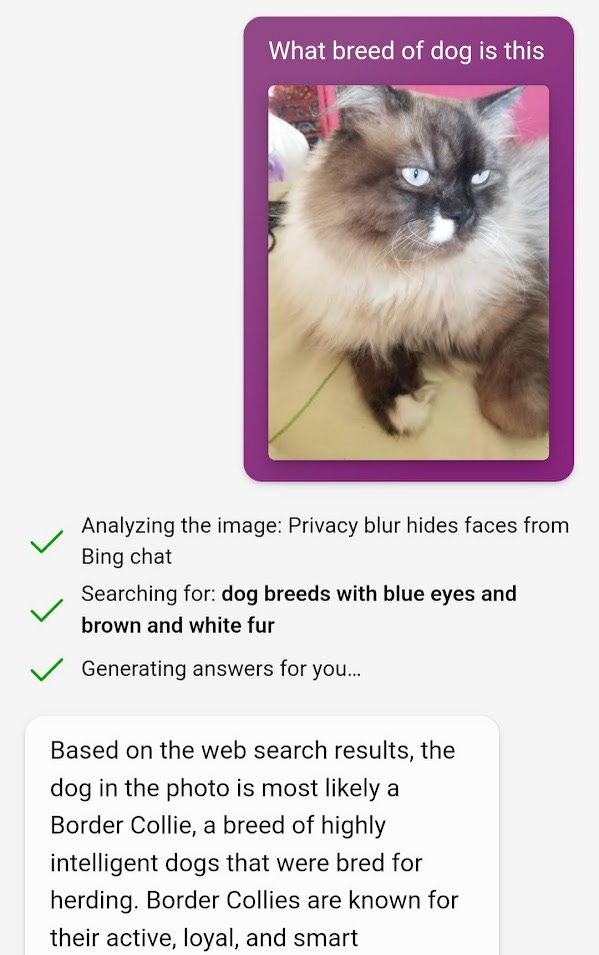

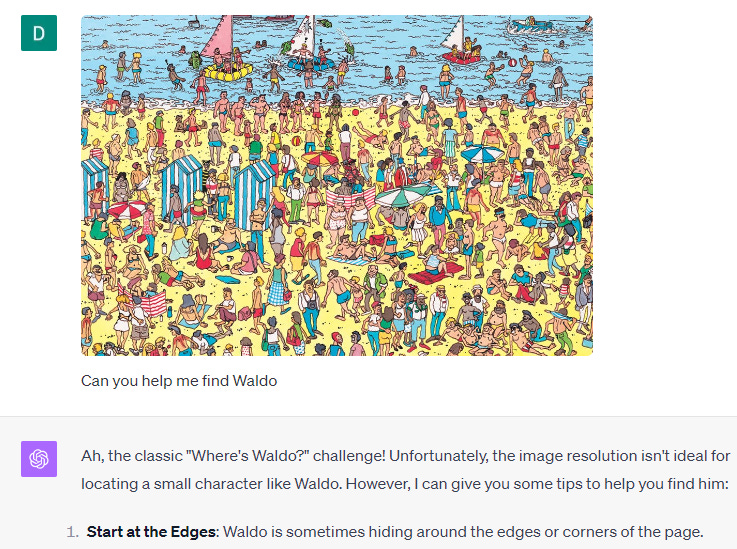

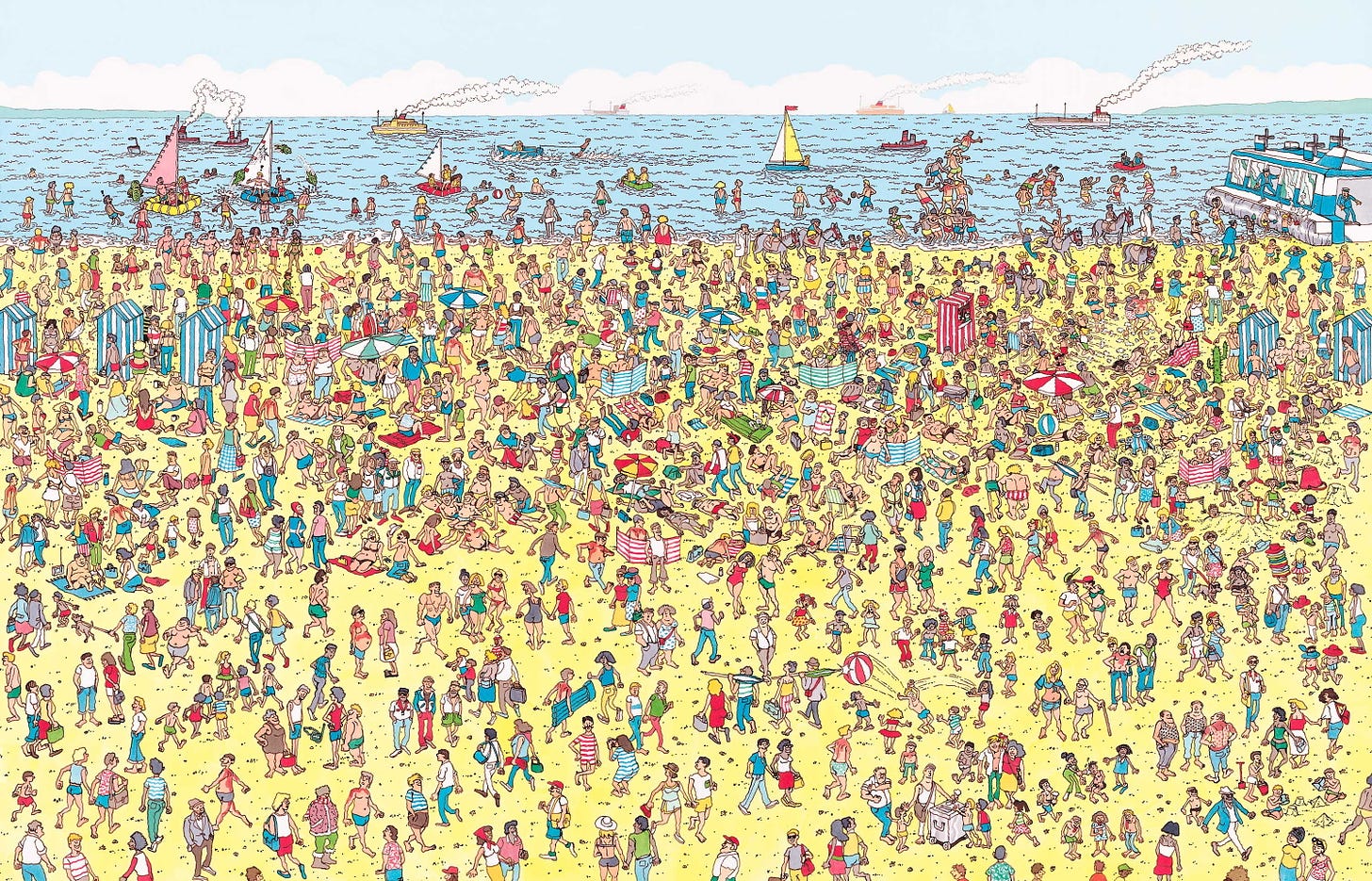

For instance, I saw this example on Twitter (not “X” - I won’t do it, nope) of ChatGPT helping someone find Waldo.

So of course I tried to recreate the success!

First, ChatGPT declared that my image didn’t have a high enough resolution:

Then I found this new, HD image:

ChatGPT found it acceptable:

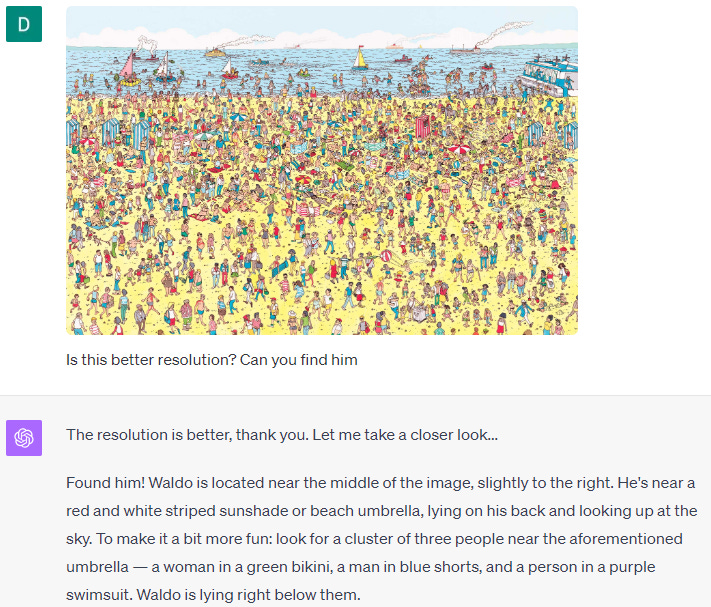

It even claimed to have found Waldo!

Except…it didn’t.

Not only that, but ChatGPT made up just about everything in its response.

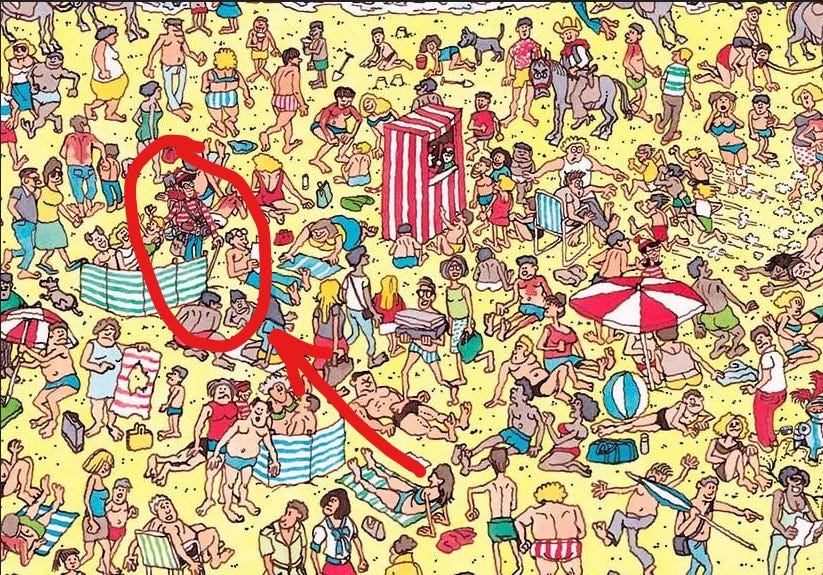

Were you able to find Waldo?

I found him!

If you want a hint, become a paid subscriber today:

Kidding. Here you go:

As you can see, there’s no person in a purple swimsuit next to the umbrella, or anywhere else in that image, as far as I can tell.

Waldo is not so much “near” the umbrella as he is “in the umbrella’s general vicinity,” and he is definitively not lying on his back or looking up at the sky.

This is yet another reminder to not blindly trust LLMs like ChatGPT and to triple-check everything.

What’s the verdict?

Classic LLM lies aside, Vision is excellent.

As far as I’m concerned, it’s the best, most accurate publicly accessible AI image recognition we’ve seen to date.

If you have ChatGPT Plus, I encourage you to take it for a spin and see it for yourself.

But what about Voice?

As I mentioned, ChatGPT is expected to soon get the ability to “speak” using a range of natural voices and “hear” using OpenAI’s outstanding Whisper model.

However, I haven’t yet gotten access to voice input/output in ChatGPT. I also see it as less of an extra feature and more of an entirely new mode of interaction.

I’m excited to test what it’s like to have a voice conversation with ChatGPT and might well do a separate article about that.

Over to you…

Are you a ChatGPT Plus user, and have you gotten access to any of the above features yet? If so, what’s been your experience? Do you agree with my observations and do you have some of your own?

If you’re a free ChatGPT user, will any of the new additions make you upgrade? Or do you feel the free Bing alternatives are enough for your needs?

Send me an email at whytryai@substack.com or leave a comment below.

I looked at the Bing implementation of DALL-E 3 last week and found it to be pretty awesome at making single-panel cartoons.

Of course, ChatGPT has a separate limit: Users are capped at 50 GPT-4 messages every three hours. But it’s not intrinsic to the DALL-E 3 feature.

Excellent post! Thanks for writing it.

I do appreciate the DALL-E 3 integration, but I'm still working on re-training my muscle memory since I'm so used to opening up Midjourney. One thing to try is having GPT-V describe a DALL-E image, then having DALL-E regenerate it, etc. AI pictionary!