The Pace of AI Progress [Revisited]

A look at AI progress within consumer tools and models. Updated with the biggest developments of 2023.

Happy 2024, crew!

I trust your holidays were full of family gatherings, joyful gift exchanges, and more sugar than is healthy or reasonable, as is tradition.

To ease us all into the new year, I want to dust off this ancient article from October 2022:

Already back then, I felt things were moving too fast, and that was a month before ChatGPT hit the scene.

So I want to ring in 2024 by taking a few extracts from the original post and appending them with the many shenanigans of 2023.

It’s quite a fun exercise to compare the 2022 and 2023 snapshots.

If you want just the “new” stuff, you can simply skip to the ”Early 2024” Daniel part within each section.

Let’s go!

📝 1. Progress in text AI (LLMs)

“Late 2022” Daniel

AI copywriting software has existed in some form for years, but it wasn’t until the end of 2021 that things got truly impressive.

What’s available now…

In November last year, OpenAI unlocked full access to its latest text-generation model, GPT-3.

GPT-3 is short for “Generative Pre-trained Transformer 3,” and I’ll let this article explain it better than I ever could. The key takeaway is that texts produced by GPT-3 are extremely humanlike and coherent. Suddenly, AI could write competent marketing copy, draft articles, and understand requests written in natural language.

This opened the floodgates for a whole slew of apps, tools, and online services that give people access to automated copywriters.

If you haven’t already seen it, this interview with a GPT-3-powered Synthesia avatar demonstrates just how uncanny its ability to comprehend and respond to human language is:

While GPT-3-based services haven’t quite reached a stage where they can replace human copywriters, they’re quickly becoming essential tools for drafting articles, creating blog outlines, and composing social media posts.

What’s coming…

Very soon, OpenAI should be releasing the next version of the algorithm named—wait for it—GPT-4.

Judging by all the excited chatter surrounding it, GPT-4 is expected to be a huge leap from its already impressive predecessor.

Personally, I’m ready to be wowed by it.

“Early 2024” Daniel

Since I wrote the above…

ChatGPT (GPT-3.5) kicked off the current generative AI craze and became the fastest-growing consumer app in history. It’s currently estimated to have 180 million monthly active users.

GPT-4 launched in March 2023 and remains the best-performing large language model to this day. (That might change when Gemini Ultra gets released.)

Microsoft brought GPT-4 capabilities to its Bing Chat / Copilot

Google launched its AI-powered assistant Bard (powered first by LaMDA, then by PaLM 2, and finally by Gemini Pro)

Meta took the lead on open-source LLMs with its Llama family.

Anthropic launched Claude (now powered by Claude 2).

Inflection AI launched Pi.

Existing players and newcomers alike released dozens of other LLMs.

Hundreds of specialized tools were built around LLMs (primarily GPT-4) to help you with all aspects of life, from learning to writing marketing copy to journaling to creative writing to so much more.

State-space LLMs (like Mamba) recently emerged as a viable alternative to existing transformer-based ones, possibly heralding a paradigm shift in LLM architecture.

Perhaps the greatest change of all has been the massively improved accessibility of these tools, both in terms of user interface and price.

While early 2022 still had niche players charging up to $100 per month for their GPT-3 wrappers, by now anyone can access far more powerful LLMs directly inside products they’re already familiar with…for the low, low price of free.

This post might get cut off in some email clients. Click here to read it online.

🖼️ 2. Progress in image-making AI

“Late 2022” Daniel

This is the one that fully got me on board the AI train.

Pictures are so much more immediate and, well, visual.

What’s available now…

Just. So. Fucking. Much.

I’ll have to restrain myself from turning this post into a novel.

Text-to-Image

Text-to-image art only entered the scene in July this year with the closed beta of DALL-E 2 by OpenAI. Almost simultaneously, a competing AI art model from Midjourney joined the fray.

In late August, StableDiffusion from StabilityAI completed the (for now) trinity.

With these three algorithms, anyone can type a prompt into a text box and watch as AI magically conjures up an image that reflects the text.

Here’s a fun video that demonstrates how each model works with prompts and the types of images it generates:

In addition to “The Big Three,” there are sites and apps that use their own AI models. Crayion, for instance, is especially popular for generating funny and absurd scenarios.

Image-to-Image

I covered this in my very first post.

The general idea is that in addition to a text prompt, you give the algorithm an initial image as a reference point. That image can be anything from a photograph to a painting to an embarrassingly crude sketch.

It still blows my mind.

Outpainting & Inpainting

These are sort of two sides of the same coin.

With outpainting, AI helps you expand the scene beyond your original image while maintaining its style, color palette, and overall composition.

Watch:

Inpainting—on the other hand—lets you replace any space within an image with an AI-generated alternative.

This short video showcases how the inpainting technique can be used to “fill in” an image in Photoshop with visually consistent AI creations:

2D-to-3D

In late September, NVIDIA unveiled the GET3D algorithm that can create 3D objects from a single 2D image:

GET3D is already freely available as an open-source code on GitHub, and it’s only a matter of time before we see a consumer-level implementation that lets non-code-savvy folks like myself play with it.

Your-Face-to-AI-Art

For all their impressive feats of art-wizardry, AI algorithms were initially lacking in one important area: Consistency.

While you could reliably recreate images of famous landmarks or celebrities that are well-represented in the models’ training data, you couldn’t do stuff like:

Take an AI-generated object and reuse it for subsequent AI images

Insert specific real-world objects or faces into AI models

Enter Google’s DreamBooth.

With DreamBooth, you can train the AI on any face or object using just a few reference photos. To appreciate just how powerful that is, watch this:

Yeah. I know.

What’s coming soon…

Honestly?

It’s just about impossible to predict.

By the time I click “Publish” on this post, there will already be something like 187 new tools using refined and more sophisticated text-to-image models.

This space is exploding way too fast for a regular human to keep track of without going absolutely mental.

“Early 2024” Daniel

Since I wrote the above…

Text-to-image features were widely incorporated into major existing products like Microsoft Designer, Canva, and Adobe’s suite of tools.

We got even more control over images with things like DraGAN, ControlNet, Midjourney’s Style Tuner, live canvass tools, face-swapping, and much more.

There was an explosion of firms developing AI models to make 3D assets, including Common Sense Machines, Luma Labs, Meshy, etc.

Many AI image models can now reliably spell (e.g. Ideogram, DALL-E 3, and Midjourney V6)

Some are dramatically better at natural language comprehension and prompt adherence (e.g. DALL-E 3 and Midjourney V6).

In general, we went from three to at least seven main text-to-image models:

DALL-E 3 (OpenAI)

Emu (Meta)

Firefly Image 2 (Adobe)

Ideogram (Ideogram)

Imagen (Google)

Midjourney V6 (Midjourney)

SDXL (Stability AI)

Just as LLMs, text-to-image tools have become way more accessible. With the notable exception of Midjourney, all models can be used for free.1

📹 3. Progress in video AI

“Sure, Daniel, images are kind of neat,” you scoff, “But can AI create entire videos?”

Well…

What’s available now…

Already today, some tools let you put together videos with the help of AI. Some of the things you can do are:

Interpolation

In short, this involves generating minor variations of an image using AI and then stringing them together into an evolving video. Like so:

One of the easier ways to do this on your own is by using a Google Colab called Deforum.

It does require a bit of effort to figure out how Colabs work and how to run the steps within them. (Fortunately, this guy can help!)

Instead of selecting your own frames for an interpolation video, you can provide a string of related prompts and ask AI to attempt morphing between them through a specified number of frames. Watch this for more on how that works:

Video input

Deforum also allows you to use a base video as an input. This is quite similar to the “starting image” concept for AI-generated art.

Below you can see how one guy turned his starting video into a trippy Stable Diffusion music clip:

What’s coming soon…

Hold on to your monocles, because what comes next is a literal text-to-video bonanza.

At the end of September, Meta AI announced a model that’s capable of generating videos exclusively from text input:

It’s hard to overstate just how much of a breakthrough that is.

Getting AI to produce a coherent video is orders of magnitude more difficult than creating a single static image.

And yes, as some have pointed out, many of Meta AI’s current videos have a certain “creepy” vibe to them.

But guys…this is an algorithm. Making videos. On the fly. From a short text prompt.

Can you imagine the possibilities after these models are polished, fine-tuned, and hit the mainstream?

What’s more, Meta AI isn’t even the only player in the game. A recent model called Phenaki does pretty much the same thing.

Neither of the models is open to the public (and probably won’t be any time soon). But you can play with a limited demo of the Meta AI model over at Make-A-Video.

“Early 2024” Daniel

Since I wrote the above…

Text-to-video rapidly evolved from crude nightmare fuel like ModelScope to something much more polished.

Runway released Gen-1, followed by Gen-2, and gradually expanded its toolkit to become the most full-featured text-to-image tool on the market.

Pika Labs came out of nowhere to become a formidable free competitor.

A vast array of features now lets users control camera movement, expand the canvas, change objects inside a video, and select specific areas of the canvas to animate.

Deforum became available on Discord, making access much easier.

Google teased an impressive model called VideoPoet, capable of controllable video editing, stylization, inpainting, and more.

Upcoming tools will make it possible to reliably animate consistent characters from a single input image.

In general, we went from zero public text-to-video AI tools to at least six:

Again, many of the above are free to use, with Pika leading the pack. It’s fair to say that this field of generative AI saw the most dramatic progress of all.

🎹 4. Progress in music AI

“Late 2022” Daniel

I can’t speak with much authority here, since I haven’t paid nearly as close attention to the music side of AI. But…

What’s available now…

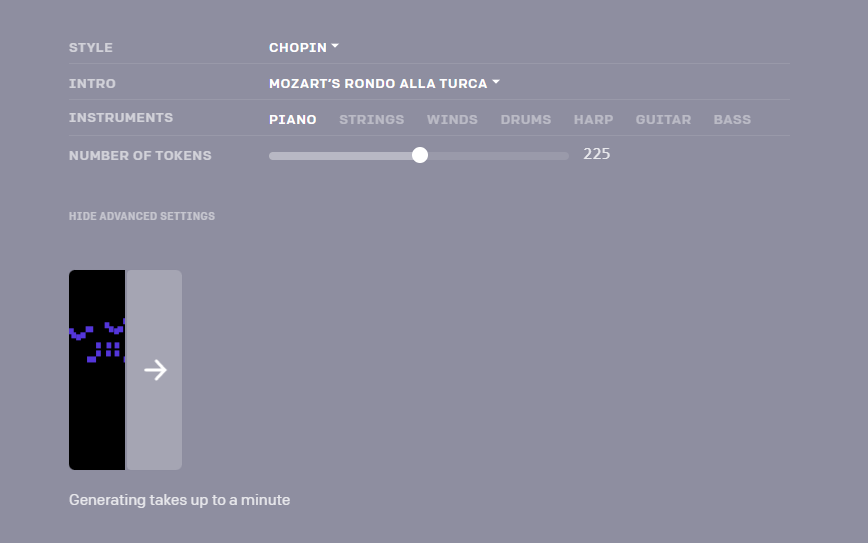

OpenAI had a neural network in the works capable of composing music since as far back as early 2019. It’s called MuseNet and you can even check out a limited demo on their site.

There’s not much info on whether the MuseNet model will be developed further or when it might be released.

At the same time, there’s already a whole range of AI-powered music tools with varying capabilities, built for different purposes.

What’s coming soon…

Stability AI—the company behind art-making Stable Diffusion—is throwing its weight behind Harmonai, which describes itself as a “community-driven organization releasing open-source generative audio tools to make music production more accessible and fun for everyone.”

In late September, they released Dance Diffusion, which loosely follows the diffusion process used for image generation…but for music.

By all accounts, this is just the very first step in a project that may yet surprise us with AI’s musical prowess.

We’ll just have to stay tuned!

“Early 2024” Daniel

Since I wrote the above…

Riffusion launched as a curious spectrogram-to-music experiment and eventually evolved into a site that can create songs with lyrics, in whatever genre and style you ask for.

Discord-based Suno Chirp largely does the same thing but can even write the lyrics for you using ChatGPT.

Google released MusicLM and later rolled it into the new MusicFX experience in December 2023.

Meta released its MusicGen model and then incorporated it into the open-source AudioCraft project. In December 2023, Meta launched Audiobox, which lets you manipulate sound and voice in various ways.

Stability AI also entered the scene with its Stable Audio offering.

In addition to these standalone models, dozens of music generation sites now let you create AI music for all kinds of projects (e.g. Mubert).

Back in June 2023, I did a Battle Of The Bands showdown between MusicLM, MusicGen, and the “old” Riffusion…and that post is already hopelessly outdated.

You probably already know what I’m about to say, but yes: Many of these can also be used for free.

🗣️ Over to you…

As you can see, we’re truly spoilt for choice and have free access to a growing number of exciting AI tools.

To me, it’s clear that there are no signs of AI slowing down. If anything, we’re still seeing exponential progress. This makes it hard to even speculate on what 2024 might bring, but I’m excited to see it.

Did I overlook any obvious 2023 developments in any of the above fields? What are your general thoughts on the speed of progress within generative AI?

Do you read these questions or am I talking to myself?

As always, you can leave a comment or shoot me an email at whytryai@substack.com.

A few of them impose daily or monthly limits on the number of generated images.

One category that seems to be missing is speech - the quality and speed of voice cloning has come a long way in the past year. Also, I agree that 2024 is probably going to be even faster - I'm not sure when things will slow down, but we've probably got at least a couple of years of low hanging technological fruit to zoom through first.

Whew, fire hose for sure.

Is it fair to say that we saw twice as much change in 2023 as we saw in 2022, which was a revolutionary year already?