The Current Pace of AI Progress Is...Nuts?

A look back at a year of insane advances within text, image, video, and other types of consumer-level AI tools.

I’ll admit, what initially sucked me into the whole AI scene was all the cool images that pre-trained algorithms could draw.

Daniel see pretty picture. Daniel happy. Daniel enjoy shiny art.

But ever since I started paying closer attention, the sheer speed of developments within the AI space caught me off-guard.

It’s been staggering, even by modern standards of technological progress. We’ve jumped from text-writing AI to image-drawing AI to (soon) video- and music-producing AI in the span of a single year.

That’s half the time it takes an African elephant to birth one baby.

Now, I’m not talking about all of the invisible, behind-the-scenes stuff that made this apparent AI explosion possible. Lots of people far smarter than I have been developing and training these AI models for years.

I’m talking about just the releases of ready-to-use tools made available to us, mere mortals.

So I wanted to help all of us (myself included) wrap our heads around what’s out there and what’s coming.

Let’s run through the major highlights of the past year and take a peek at what’s coming next.

Text-writing AI

AI copywriting software has existed in some form for a number of years, but it wasn’t until the end of 2021 that things got truly impressive.

What’s available now…

In November last year, OpenAI unlocked full access to its latest text-generation model, GPT-3.

GPT-3 is short for “Generative Pre-trained Transformer 3,” and I’ll let this article explain it better than I ever could. The key takeaway is that texts produced by GPT-3 are extremely humanlike and coherent. Suddenly, AI could write competent marketing copy, draft articles, and understand requests written in natural language.

This opened the floodgates for a whole slew of apps, tools, and online services that give people access to their own automated copywriters.

If you haven’t already seen it, this interview with a GPT-3-powered Synthesia avatar demonstrates just how uncanny its ability to comprehend and respond to human language is:

While GPT-3-based services haven’t quite reached a stage where they can replace human copywriters, they’re quickly becoming an essential tool for drafting articles, creating blog outlines, and composing social media posts.

What’s coming…

In the very near future, OpenAI should be releasing the next version of the algorithm named—wait for it—GPT-4.

Judging by all the excited chatter surrounding it, GPT-4 is expected to be a huge leap from its already impressive predecessor.

Personally, I’m ready to be wowed by it.

Image-drawing AI

This is the one that fully got me on board the AI train.

Pictures are so much more immediate and, well, visual.

What’s available now…

Just. So. Fucking. Much.

I’ll have to restrain myself from turning this post into a novel.

Text-to-Image

Text-to-image art only entered the scene in July this year with the closed beta of DALL-E 2 by OpenAI. Almost simultaneously, a competing AI art model from Midjourney joined the fray.

In late August, StableDiffusion from StabilityAI completed the (for now) trinity.

With these three algorithms, anyone can type a prompt into a text box and watch as AI magically conjures up an image that reflects the text.

Here’s a fun video that demonstrates how each model works with prompts and the types of images it generates:

In addition to “The Big Three,” there are dozens of sites and apps that use their own AI models. Crayion, for instance, is especially popular for generating funny and absurd scenarios.

Image-to-Image

I covered this in the very first post.

The general idea is that in addition to a text prompt, you give the algorithm an initial image as a reference point. That image can be anything from a photograph to a painting to an embarrassingly crude sketch.

Here’s how that works in practice:

I know. It still blows my mind.

Outpainting & Inpainting

These are sort of two sides of the same coin.

With outpainting, AI helps you expand the scene beyond your original image while mainting its style, color pallete, and the overall composition.

Watch:

Inpainting—on the other hand—lets you replace any space within an image with an AI-generated alternative.

This short video showcases how the inpainting technique can be used to “fill in” an image in Photoshop with visually consistent AI creations:

2D-to-3D

In late September, NVIDIA unveiled the GET3D algorithm that can create 3D objects from a single 2D image:

GET3D is already freely available as an open-source code on GitHub, and it’s only a matter of time before we see a consumer-level implementation that lets non-code-savvy folks like myself play with it.

YourFace-to-AIArt

For all their impressive feats of art-wizardry, AI algorithms were initially lacking in one important area: Consistency.

While you could reliably recreate images of famous landmarks or celebrities that are well-represented in the models’ training data, you couldn’t do stuff like:

Take an AI-generated object and reuse it for subsequent AI images

Insert specific real-world objects or faces into AI models

Enter Google’s DreamBooth.

With DreamBooth, you can train the AI on any face or object using just a few reference photos. To appreciate just how powerful that is, watch this:

Yeah. I know.

What’s coming soon…

Honestly?

It’s just about impossible to predict.

By the time I click “Publish” on this post, there will already be something like 187 new tools using refined and more sophisticated text-to-image models.

This space is exploding way too fast for a regular human to keep track of without going absolutely mental.

Video-producing AI

“Sure, Daniel, images are kind of neat,” you scoff, “But can AI create entire videos?”

Well…

What’s available now…

Already today, there are tools that let you put together videos with the help of AI. Some of the things you can do are:

Interpolation

In short, this involves generating minor variations of an image using AI and then stringing them together into an evolving video. Like so:

One of the easier ways to do this on your own is by using a Google Colab called Deforum.

It does require a bit of effort to figure out how Colabs work and how to run the steps within them. (Fortunately, this guy can help!)

Instead of selecting your own frames for an interpolation video, you can provide a string of related prompts and ask AI to attempt morphing between them through a specified number of frames. Watch this for more on how that works:

Video input

Deforum also allows you to use a base video as an input. This is quite similar to the “starting image” concept for AI-generated art.

Below you can see how one guy turned his starting video into a trippy Stable Diffusion music clip:

What’s coming soon…

Hold on to your monocles, because what comes next is literal text-to-video bonanza.

At the end of September, Meta AI announced a model that’s capable of generating videos exclusively from a text input:

It’s hard to overstate just how much of a breakthrough that is.

Getting AI to produce a coherent video is orders of magnitude more difficult than creating a single static image.

And yes, as some have pointed out, many of Meta AI’s current videos have a certain “creepy” vibe to them.

But guys…this is an algorithm. Making videos. On the fly. From a short text prompt.

Can you imagine the possiblities after these models are polished, fine-tuned, and hit the mainstream?

What’s more, Meta AI isn’t even the only player in the game. A recent model called Phenaki does pretty much the same thing.

Neither of the models are open to the public (and probably won’t be any time soon). But you can play with a limited demo of the Meta AI model over at Make-A-Video.

Music-composing AI

I can’t speak with much authority here, since I haven’t paid nearly as close attention to the music side of AI. But…

What’s available now…

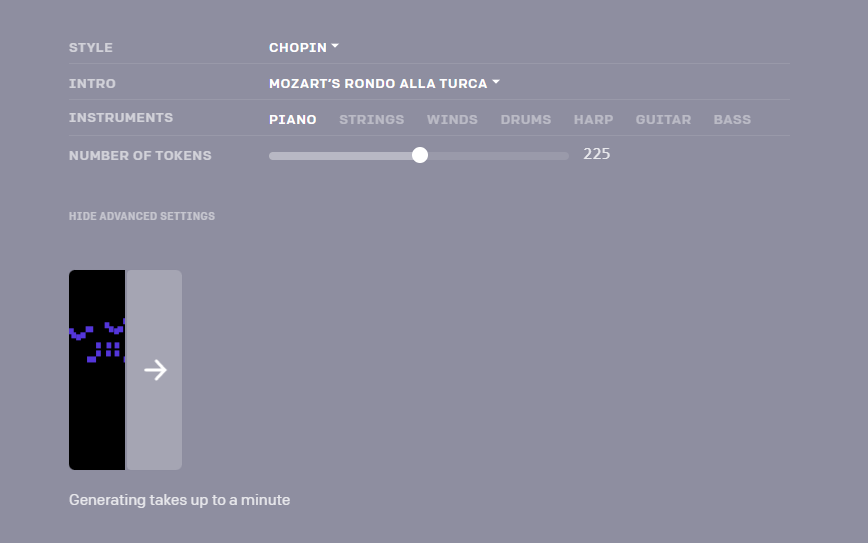

OpenAI had a neural network in the works capable of composing music since as far back as early 2019. It’s called MuseNet and you can even check out a limited demo on their site.

There’s not much info on whether the MuseNet model will be developed further or when it might release.

At the same time, there’s already a whole range of AI-powered music tools with varying capabilities, built for different purposes.

What’s coming soon…

StabilityAI—the company behind art-making Stable Diffusion—is throwing its weight behind Harmonai, which describes itself as a “community-driven organization releasing open-source generative audio tools to make music production more accessible and fun for everyone.”

In late September, they released Dance Diffusion, which loosely follows the diffusion process used for image generation…but for music.

By all accounts, this is just the very first step in a project that may yet surprise us with AI’s musical prowess.

We’ll just have to stay tuned!

Bonus mention: OpenAI’s Whisper

If I bring up speech-recognizing voice assistants, few of you will be particularly amazed.

After all, Alexa, Google Assistant, Siri, and Cortana have been around for years.

But OpenAI’s Whisper is a next-generation model that promises to put them all to shame. It’s trained on a larger and more diverse data set, which allegedly allows it to:

Maintain accuracy while processing accents, dialects, mumbling, etc.

Better cope with background noise and other audio interference

Accurately detect and transcribe technical language

Recognize and eliminiate filler words, backtracking, etc.

In short, it should be able to effortlessly deal with the way we actually speak in our everyday lives.

You can already test Whisper for yourself by recording a 30-second audio clip on your microphone. (Try to see if you can throw it off!)

Over to you…

Have you tried any of these fast-emerging tools? What has been your experience? Are you impressed or underwhelmed?

Also, is there something obvious I’ve missed that had you screaming at your screen while you read this? If so, I’d love to hear about it so I can include it. Drop me an email or leave a comment below!