10X AI (Issue #46): Udio Can Sing, Gemini Can Hear, and Rastafari Palm Trees

Sunday Showdown #6: Suno V3 vs. Udio: Who makes the best songs?

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at generative AI that covers the following:

AI News + AI Fail (free): I highlight nine news stories of the week and share an AI photo or video fail for your entertainment.

Sunday Showdown + AI Tip (paid): I pit AI tools against each other in solving a specific real-world task and share a hands-on tip for working with AI.

So far, I had image models creating logos, AI song makers producing short jingles, AI-generated sound effects, LLMs telling jokes, and lip-syncing by Pika and Runway.

Upgrading to paid grants you instant access to every Sunday Showdown (+AI Tip) and other bonus perks.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments. Lots of new LLM releases and upgrades.

1. Udio challenges Suno’s supremacy

What’s up?

Udio is the new kid on the AI music block, generating realistic-sounding songs from text prompts.

Why should I care?

Until now, Suno was the undisputed leader in the text-to-song space. Now a team of former Google DeepMind researchers have developed a promising challenger: Udio. Udio’s output quality appears to be at least on par if not better. It is currently in Beta and lets anyone generate up to 1,200 songs per month for free. So if you ever wanted to try making entire songs from prompts, now’s your chance.

Where can I learn more?

Follow the official announcement thread on Twitter / X.

Try making your own tracks at udio.com.

2. Google 1.5 Pro gets ears and goes global

What’s up?

Google Gemini Pro 1.5 can now “hear” audio files and has rolled out to 180+ countries.

Why should I care?

If you live in a newly supported country, you can now access Gemini Pro 1.5 without a VPN. Gemini’s newfound ability to hear means you can upload audio files to analyze and interact with the model using voice. I tested this by recoding the string “Mary had a little lamb, its fleece was…what?” and Gemini 1.5 Pro correctly responded with “White as snow ❄️.” So yeah, it’s practically AGI now.

Where can I learn more?

Read the official announcement post.

Try Gemini 1.5 Pro for free in Google AI Studio.

3. Upgraded GPT-4 Turbo tops the charts again

What’s up?

OpenAI released an updated GPT-4 Turbo, which is already available to ChatGPT Plus users.

Why should I care?

The new version climbed straight back to the top of the LMSYS Chatbot Arena leaderboard, leapfrogging Claude 3 Opus. GPT-4 Turbo now demonstrates better capabilities in writing, math, logic, and coding. If you use ChatGPT Plus, you should already be benefiting from this smarter model.

Where can I learn more?

Follow the official announcement post on Twitter / X.

Follow the coding benchmarks post on Twitter / X.

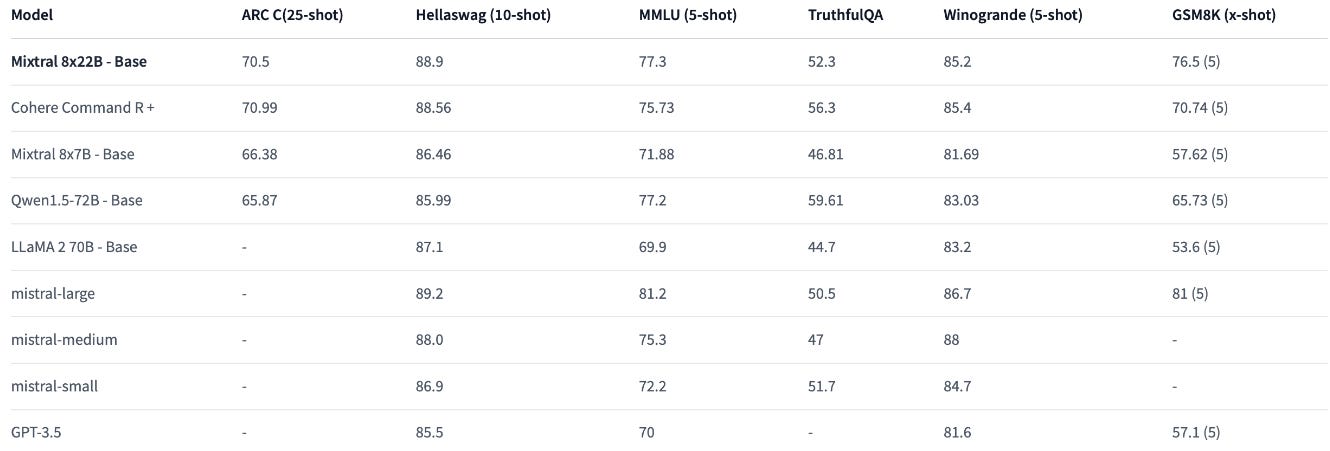

4. Mixtral 8x22B is an open-weight beast

What’s up?

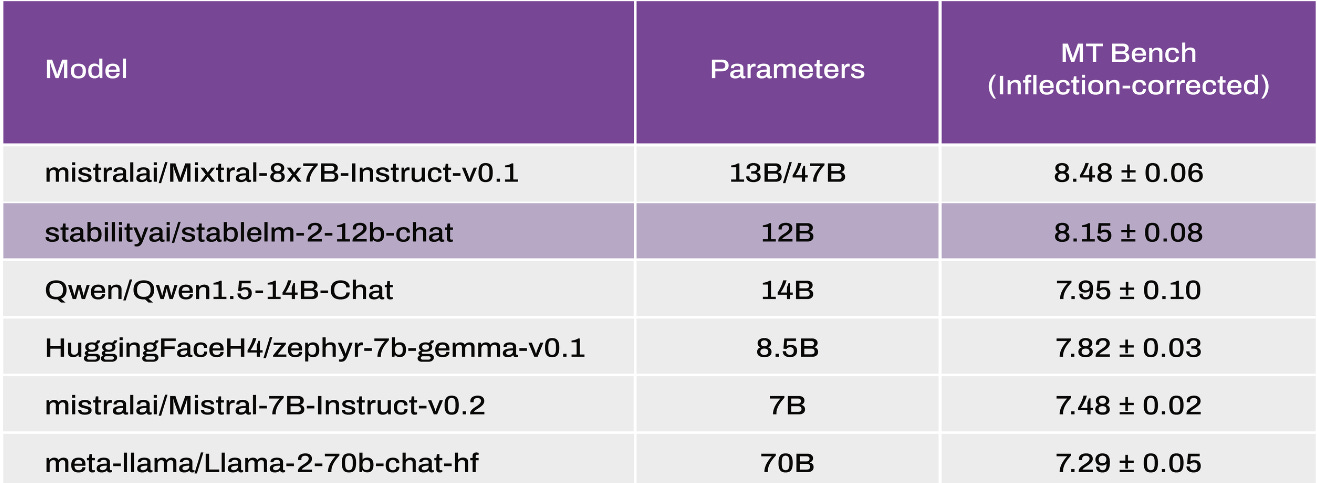

Mistral’s newest mixture-of-experts (MoE) model, Mixtral 8x22B, is leading the open-weight model charts.

Why should I care?

Open-source and open-weight models are constantly pushing the envelope, and Mixtral 8x22B is the next step on that journey. (Soon, we’ll see Llama 3.) It’s using the same MoE architecture as Mixtral 8x7B but with each “expert” having three times the parameters. As a result Mixtral 8x22B outperforms other open models on practically every measured LLM benchmark.

Where can I learn more?

Read this article by VentureBeat.

Grab the model weights at Hugging Face.

Try chatting to Mixtral 8X22B at Perplexity Labs.

Watch this walkthrough by Prompt Engineering:

5. Stable LM 2 also gets an upgrade

What’s up?

Stability AI upgraded Stable LM 2 1.6B and released a bigger model called Stable LM 2 12B. Catchy!

Why should I care?

The small Stable LM 2 1.6B maintains its low system requirements while being better at “conversation abilities.” The medium-sized Stable LM 2 12B speaks 7 languages and “can handle a variety of tasks that are typically feasible only for significantly larger models, which often necessitate substantial computational and memory resources.”

Where can I learn more?

Read the official announcement post.

Try the Stable LM 2 12B Hugging Face demo.

6. Google expands its Gemma family

What’s up?

Google released CodeGemma and RecurrentGemma within its smaller-model family.

Why should I care?

CodeGemma, which comes in a 2B and 7B variant, is a solid lightweight assistant for tasks in popular coding languages like Python, JavaScript, and Java.

RecurrentGemma has a unique non-transformer architecture that is tailor-made to get better performance out of devices with limited memory.

Where can I learn more?

Read the official announcement post.

7. Spotify introduces AI playlists

What’s up?

Spotify Premium users can now create tailored playlists from a descriptive text prompt.

Why should I care?

You’re no longer limited to vague, pre-curated playlists. Now you can describe exactly what you’re after (e.g. “an indie folk playlist to give my brain a big warm hug” or “relaxing music to tide me over during allergy season") and watch Spotify put together a fitting playlist with the help of AI. Avaialble now to Premium users in UK and Australia with more to come.

Where can I learn more?

Read the official announcement post.

8. Create cool time-lapse videos with MagicTime

What’s up?

A global team of researchers showcased a new MagicTime model that can generate realistic time-lapse videos from text prompts.

Why should I care?

This “metamorphic time-lapse video generation model” is trained on time-lapse videos. This allows MagicTime to “learn” real-world physics and then reliably generate similar time-lapse videos from text prompts. As a result, the model is perfect for this niche type of video clips.

Where can I learn more?

Visit the MagicTime project page.

Read the research paper (PDF).

Check out the GitHub page.

Try the (slow and buggy) Hugging Face demo.

9. Google’s AI photo-editing is now for everyone

What’s up?

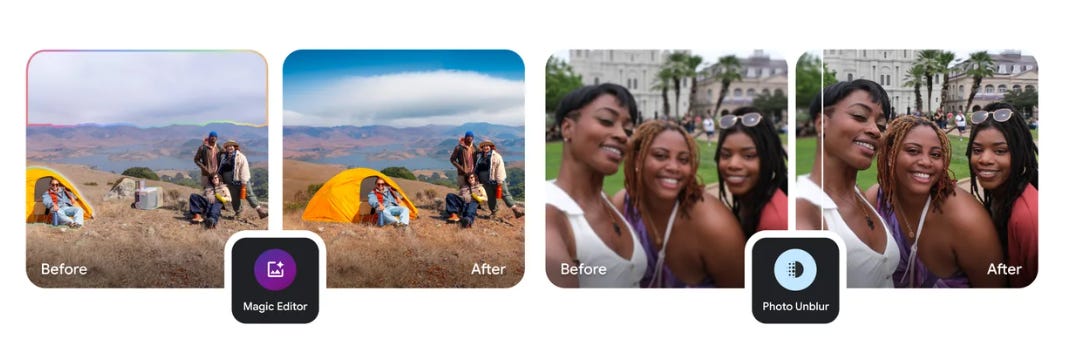

Google is making some AI photo editing features available to all Google Photos users.

Why should I care?

Previously, AI photo features were only available to those with a Google Pixel product. Starting May 15, anyone using Google Photos will be able to use the Magic Eraser, Photo Unblur, and Portrait Light, regardless of device. In addition. the Magic Editor will expand from the Pixel 8 / 8 Pro users to all Pixel devices.

Where can I learn more?

Read the official announcement post.

🤦♂️ 10. AI fail of the week

I can’t decide if this is inventive or offensive, DALL-E 3 (final cartoon is here)

Anything to share?

Sadly, Substack doesn’t allow free subscribers to comment on posts with paid sections, but I am always open to your feedback. You can message me here:

⚔️ Sunday Showdown (Issue #6) - Suno vs. Udio: Which one produces the best songs?

Today’s Sunday Showdown was a given.

I just had to see how the newcomer (Udio) fares against the incumbent (Suno).

The last time I pitted Suno against Riffusion, I used Suno’s older V2 model. Now it looks like Suno’s current best model, V3, is available to everyone.

That’s what I’ll be using.

Here’s a teaser for free subscribers:

Game on!