10X AI (Issue #39): Open AI's Sora, Google's Gemini 1.5, Stable Cascade, and a "Hand Ox?"

PLUS: ChatGPT memory, Reka Flash, NVIDIA's Chat with RTX, Slack's AI assistant, Cohere's Aya, and Amazon's BASE TTS model

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at generative AI news, tools, and tips for the average user.

Today’s yet another all-news edition.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.🗞️ AI news

Here are this week’s AI developments.

1. Gemini 1.5 can handle up to 10 million tokens

After finally releasing Gemini Ultra to the public last week, Google just followed up with a knockout punch by announcing Gemini 1.5.

Gemini 1.5. uses a mixture-of-experts architecture to achieve higher efficiency.

But the most noteworthy thing about Gemini 1.5 is the 10-million-token context window (in internal testing). For reference, the current undisputed Context Window Champion is Claude 2.1 with 200K tokens.

More impressively, Gemini 1.5 can successfully pass the “Needle In A Haystack” test, finding a specific short text string within 1 million tokens 99% of the time.

To see what the increased context window combined with Gemini’s native multimodality might mean in practice, take a look:

To begin with, Google will release a Gemini 1.5 Pro version with a more modest context window of 128K tokens. However, some early testers will get to experience up to 1M tokens before Google makes this option available as a paid upgrade.

2. OpenAI’s Sora ushers in a new era of text-to-video

Sadly, Google got to bask in its PR glory for just a couple of hours before OpenAI completely stole the show with this:

What you’re looking at is a compilation of clips generated by OpenAI’s new text-to-video model: Sora.

To say that Sora sets a new standard for AI video is an understatement. For comparison, here’s the current crop of text-to-video models.

It’s not even close.

Sora blows the competition out of the water on every imaginable metric, creating 1-minute-long videos in one go. (Today’s models generate roughly 4 seconds at a time.)

Sora is also capable of understanding and following long, detailed prompts, thanks to the “better captions” approach from DALL-E 3 that I explained in great detail.

In addition to text-to-video generation, Sora can:

Create high-quality photographic images

Animate any input image

Combine text and image input into a video scene

Extend any generated video backward or forward

Make changes to existing videos via text commands

Connect two unrelated videos by “imaging” entirely new, natural transition segments (this one made me channel my inner Keanu and go “Whoa!”)

I encourage you to check out the research report with many impressive examples.

For now, Sora is just at the research stage, and there’s no indication as to when any of us will be able to try it out.

But it’s certainly enough to kick off a new “arms race” in text-to-video.

In the words of Cristóbal Valenzuela, CEO of Runway (the current AI video leader):

3. Stable Cascade is fast and pretty

Finally, something we get to play with right away!

Stability AI launched a new text-to-image model called Stable Cascade, built on a new three-stage, “cascading” architecture inspired by Würstchen.

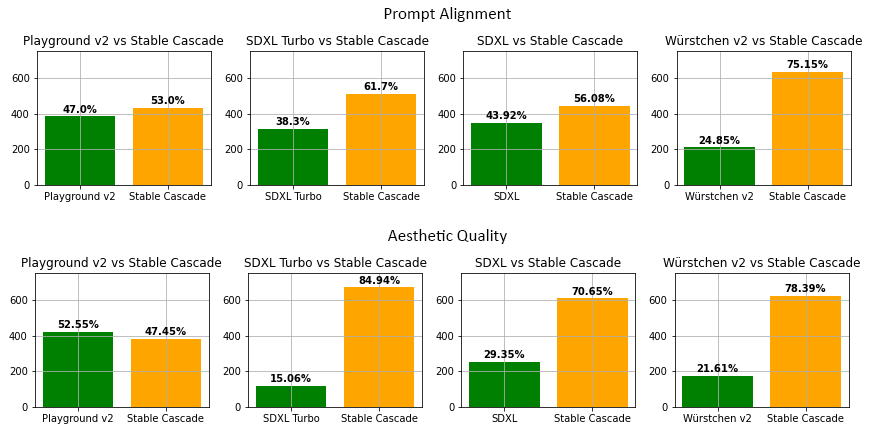

The model outperforms almost every challenger on both prompt alignment and output quality:

Stable Cascade is also faster at generating images than both Stable Diffusion XL and Playground 2 (but not quite as instant as SDXL Turbo).

Want to try it for free?

Just head to Stable Cascade on Hugging Face.

On the right-hand side, pick any available space that uses the model:

I used this space to generate this image of a chicken wearing a tuxedo, because I have the mind of a toddler:

What will you make?

4. ChatGPT gets long-term memory

The leak from January is confirmed: ChatGPT will soon be able to remember facts about you and your preferences across all chats.

This will enable ChatGPT to become more helpful as a truly personal assistant. You can let ChatGPT pick up key facts on its own or explicitly ask it to remember specific things during your chats.

Don’t worry: If this creeps you out, you can turn the memory feature off outright. There’ll also be a “Temporary chat” option, which is the equivalent of “Incognito” mode in Google Chrome where your current session isn’t saved.

Memory is being rolled out to a subset of users this week, with a wider release to follow soon.

5. Reka Flash gives contenders a run for their tokens

Reka AI released a new multimodal and multilingual model called Reka Flash.

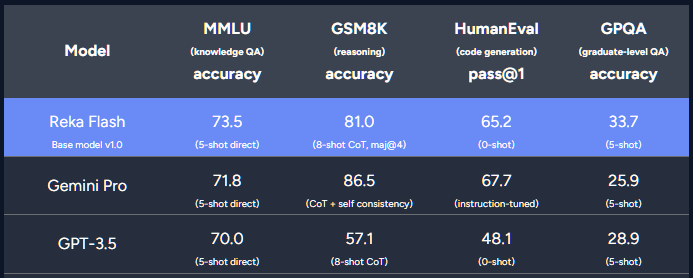

Despite its modest size (21B), Reka Flash goes neck-and-neck with mid-tier models like Gemini Pro and GPT-3.5 on several LLM Benchmarks:

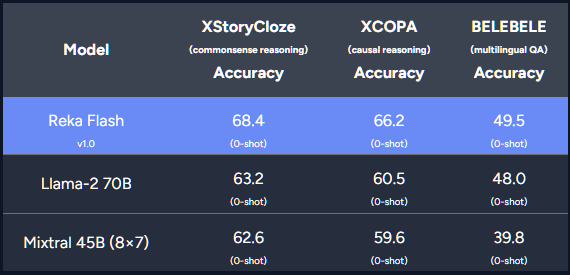

When it comes to multilingual performance, Reka Flash also outperforms Mixtral and Llama 2:

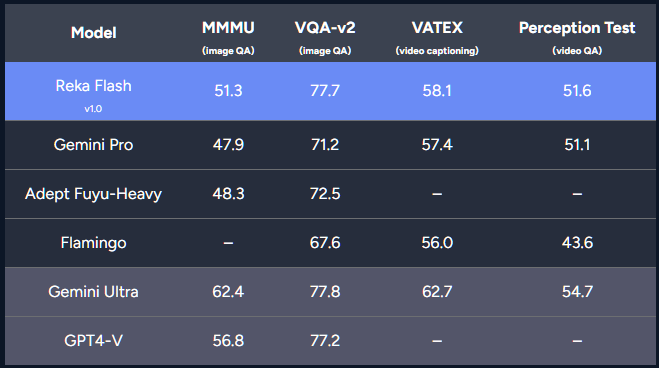

Finally, Reka Flash beats Gemini Pro on multimodal benchmarks, approaching Gemini Ultra levels on some of them.

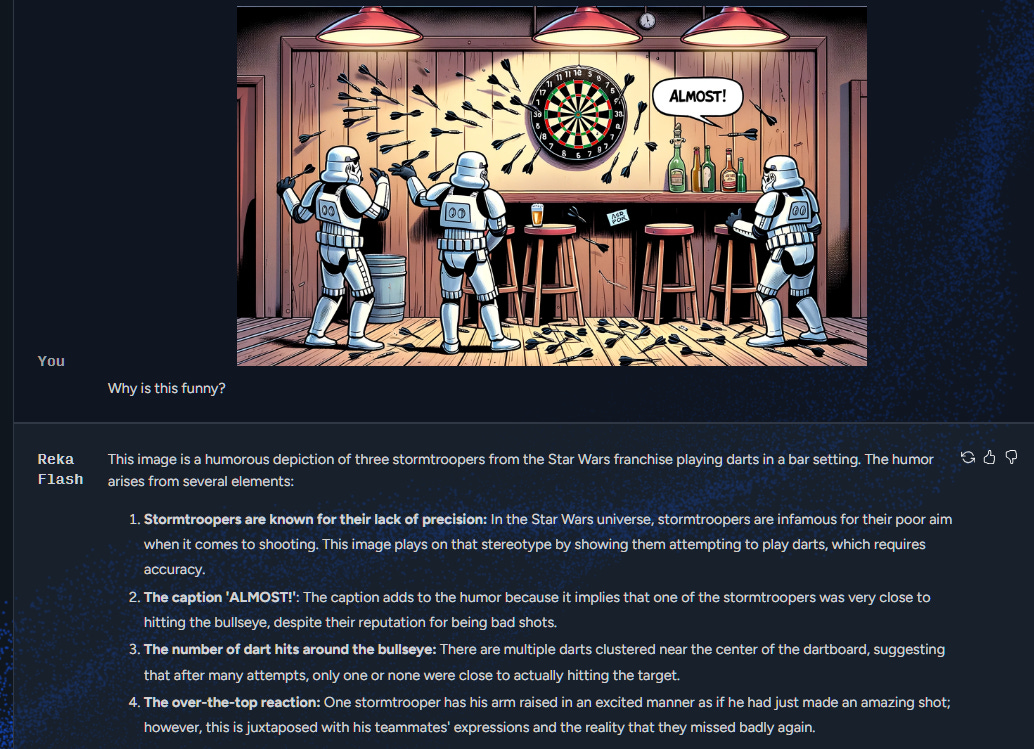

But don’t take Reka AI’s word for it: They have a playground where you can test the models for free. (You will need to sign up though.)

I fed Reka Flash this AI Jest Daily cartoon, and it did reasonably well:

6. NVIDIA lets you securely chat with your data

Great, yet another “Chat with your documents” app, right?

Yes, but…

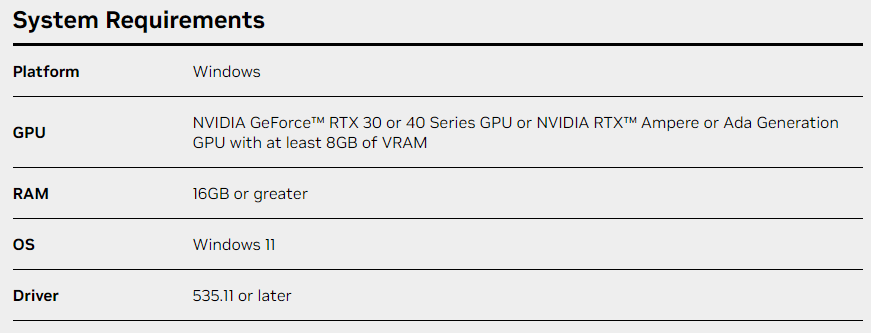

NVIDIA’s Chat with RTX does this quickly and securely because it runs locally on your Windows RTX PC or workstation. Here:

Chat with RTX lets you pick an LLM (currently Llama and Mistral), then upload any docs, notes, videos, or other data to analyze and chat about.

The demo app is available to download for free, but note the minimum requirements:

7. Slack’s AI assistant can catch you up

Slack is great for workplace communication, but sometimes you end up drowning in the sea of messages, threads, and channels.

Enter Slack’s new AI assistant, the primary function of which is to keep you up to speed by:

Summarizing long threads in case you’ve missed the conversation

Generating recaps with key highlights across all channels

Answering questions related to your Slack content

You can learn more here.

8. Cohere’s Aya speaks over 100 languages

What do you get when you combine the work of 3,000 independent researchers across 119 countries?

A massively multilingual open-source model called Aya.

The already impressive Reka Flash above speaks 32 languages.

Aya can handle 101 languages while achieving state-of-the-art performance on multilingual benchmarks.

Much like Reka AI, Cohere has a playground where you can test Aya for free.

9. Amazon’s best-in-class text-to-speech model

Amazon has a TTS model called BASE TTS, which stands for Big Adaptive Streamable TTS with Emergent Abilities. (Yeah, it’s a mouthful.)

BASE TTS is currently the largest text-to-speech model in existence. It’s been trained on 100K hours of public domain speech data, which Amazon claims gave BASE TTS the emergent ability to sound more natural.

It doesn’t look like we can test the model just yet, but you can check out over a dozen audio samples shared by Amazon.

🤦♂️ 10. AI fail of the week

Enjoy this visual history lesson from Gemini:

Shared by

of .Have an AI fail of your own? Send it to me for upcoming issues!

Sunday poll time

Previous issue of 10X AI:

10X AI (Issue #38): Gemini Ultra, Redesigned Copilot, Apple's MGIE, and a Headless Sloth

Happy Sunday, friends! Welcome back to 10X AI: a weekly look at generative AI news, tools, and tips for the average user. I’m traveling with my wife and kids, so this will be a condensed, all-news edition. Let’s get to it. This post might get cut off in some email clients.

Thanks for the great breakdown. I've been playing around with Gemini but hadn't seen that incredible video. Let's hope it's not a scam like the last demo

Sora is certainly impressive. I read something about how it sort of figures out the rules of physics as it goes along... I'm not sure what all that's about, but have you read anything to that extent/in that direction?