10X AI (Issue #38): Gemini Ultra, Redesigned Copilot, Apple's MGIE, and a Headless Sloth

PLUS: Stable Video Diffusion 1.1, Hugging Face assistants, MetaVoice-1B, Smaug-72B, BUD-E voice assistant, and background removal by Bria AI.

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at generative AI news, tools, and tips for the average user.

I’m traveling with my wife and kids, so this will be a condensed, all-news edition.

Let’s get to it.

🗞️ AI news

Here are this week’s AI developments.

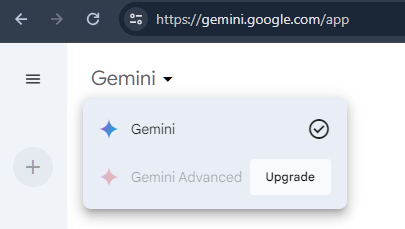

1. Gemini Ultra has arrived

After teasing Gemini Ultra—Google’s answer to GPT-4—in early December, Google finally made it available to the broader public.

In parallel news: Bard is no more. It’s now called Gemini and is found under gemini.google.com.

By default, Gemini is powered by what used to be called the “Gemini Pro” model.

But for $19.99 per month, you can upgrade to Gemini Advanced, which uses the newly released Gemini Ultra 1.0 model. (Yeah, it’s a bit of a naming mess.)

Google is clearly positioning Gemini Advanced as an alternative to ChatGPT Plus. The monthly price also includes 2TB of storage and several upcoming integrations:

Google is currently offering a two-month free trial of Gemini Advanced to let everyone get a taste.

So does Gemini Ultra live up to the hype?

In short: Opinions are currently a mixed bag.

referred to it as “clearly a GPT-4 class model” after weeks of testing (he had early access).Others have been far less generous, and

has a few interesting takes on why that might be.I intend to do some testing of my own, but this overview by AI Explained is worth a look:

No matter what, Gemini is here to stay.

Bard is dead. Long live Gemini.

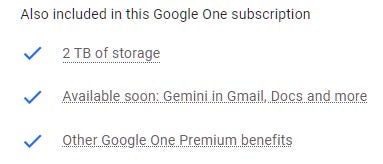

2. The new(ish) Copilot

Microsoft isn’t sleeping, either.

Its Copilot (formerly Bing) just underwent an overhaul. Here’s everything that’s new:

Redesigned look and feel to make the first experience more intuitive

Ability to make minor changes to generated images directly in Copilot

A new fine-tuned Deucalion model that should make the Copilot better and faster in “Balanced” mode

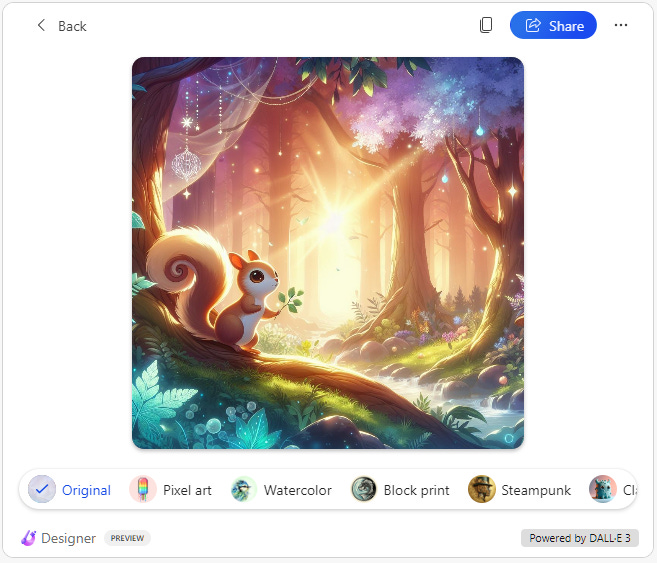

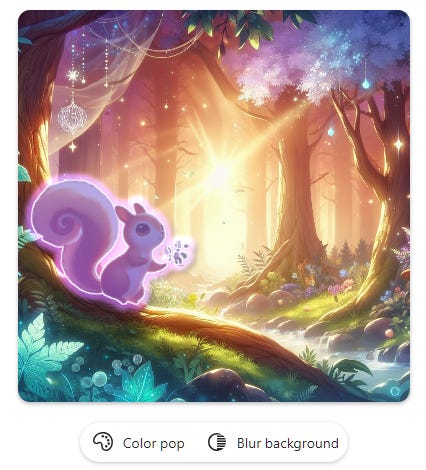

I tested the image-editing options. After making an image of a squirrel in a magic forest, I can re-imagine it in different styles by simply picking them from the ribbon:

Here’s “Pixel art,” “Block print,” and “Art deco”:

I can also select specific objects in the image…

…to blur the background...

…or use “color pop” and make everything else black and white:

The editing features are so far only available in the US, UK, Australia, India, and New Zealand.1

Finally, Microsoft’s Super Bowl ad showcases the company’s vision for its Copilot:

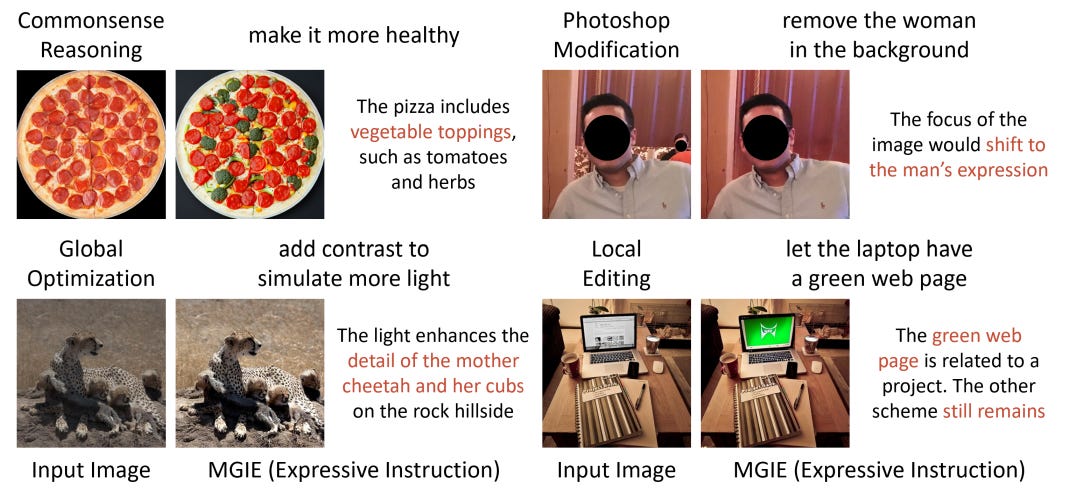

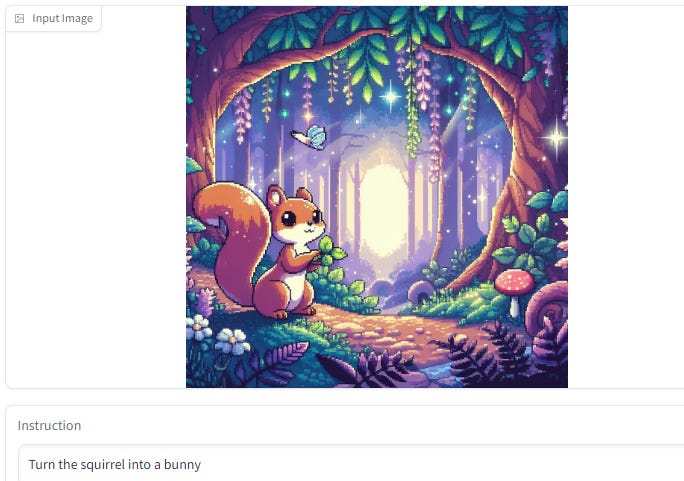

3. Apple’s MGIE lets you edit images using words

Apple released a paper on MLLM-Guided Image Editing (MGIE), which is Apple’s version of InstructPix2Pix that lets you manipulate images by talking to a multimodal language model.

Here are some examples of what it can do:

You can access the project on GitHub.

For the less tech-savvy of us, there’s a free Hugging Face demo.

I tested it by uploading one of our squirrel images and asking for a bunny instead:

It worked! (Except the bunny decided to keep the squirrel’s tail.)

This was with the default settings and no attempts to optimize the output further.

I’m impressed by how it kept many of the details intact, even if the colors appear somewhat washed out.

Give it a try and see if MGIE works for you!

4. Stable Video Diffusion 1.1

Stable Diffusion first came out in November last year.

Now, Stability AI CTO Tom Mason announced the new and improved SVD 1.1, “optimizing the quality of outputs from the original model to achieve better motion and more consistent generation.”

Here’s a side-by-side comparison:

As far as I can tell, SVD 1.1 is clearly better in some cases but not as convincing in others.

You can download the model on Hugging Face.

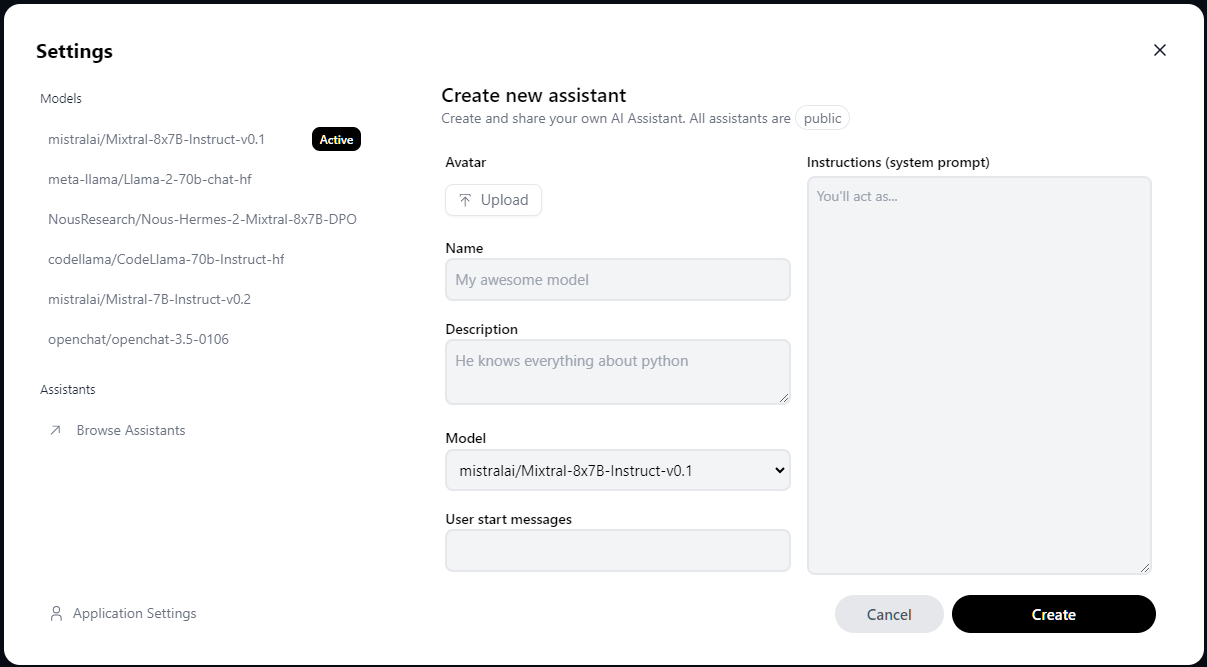

5. Hugging Face lets you build free AI assistants

Hugging Face is now offering a poor man’s version of the GPT Store.

It’s called “Assistants,” and anyone can create a new custom chatbot by picking one of several available open-source models:

Unlike Custom GPTs, you can’t upload documents for Assistants to use or integrate them with third-party apps via APIs.

But it’s a robust free offering, so take it for a spin!

6. Meta’s new text-to-speech model

Meta’s released MetaVoice-1B, a text-to-speech model capable of zero-shot voice cloning from just 30 seconds of input audio (for American and British voices).

I tried feeding it the same input clip as for my MyShell OpenVoice experiment.

I think MetaVoice-1B did marginally better than OpenVoice, but it still didn’t quite nail my tone of voice and gave me a somewhat posh accent to boot:

You can grab the code on GitHub or check out the public demo (that’s what I used above).

7. Smaug-72B is the best open-source LLM

Smaug-72B, a new open-source model by Abacus AI now sits atop the Hugging Face Open LLM Leaderboard, outperforming other models across most LLM benchmarks.

It’s also the first open-source LLM to climb above the average score of 80.

8. BUD-E voice assistant can chat in real-time

Nonprofit LAION just released a new voice assistant called BUD-E.

According to LAION, BUD-E can respond in real-time, handle interruptions and thinking pauses, and keep track of long conversations.

Here’s a demo:

You can grab the code on GitHub.

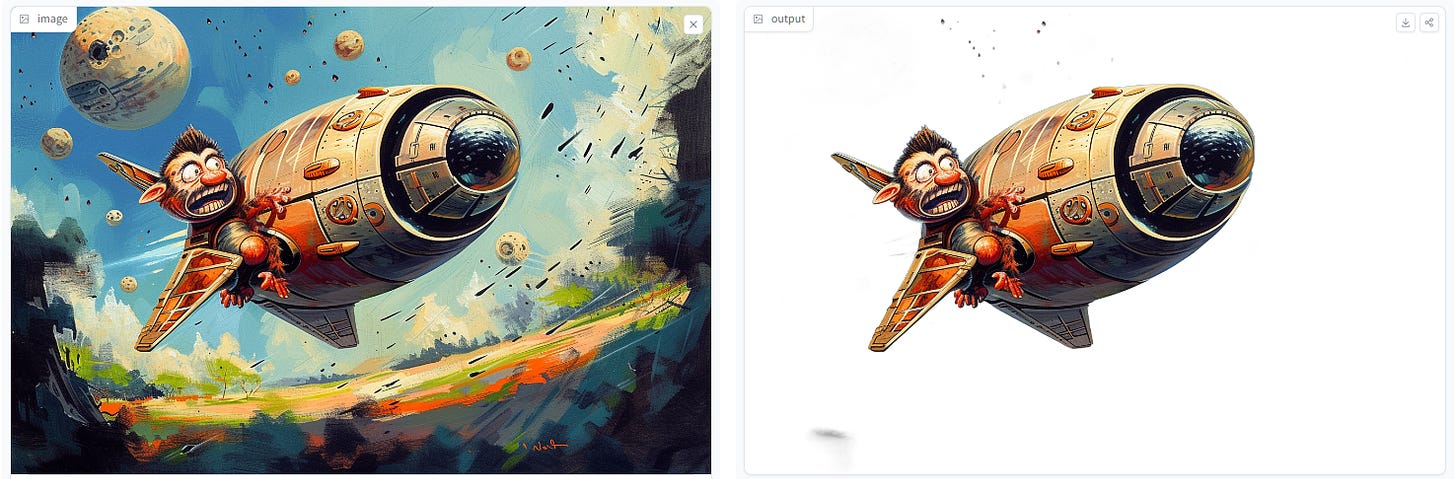

9. Background removal by BRIA AI

BRIA AI released a background removal tool with the catchy name RMBG v1.4.

You can use it for free on Hugging Face.

It worked pretty well for me:

And:

See if it does the trick for you as well.

🤦♂️ 10. AI fail of the week

Before this final AI Jest Daily cartoon, there was this horror:

Previous issue of 10X AI:

10X AI (Issue #37): Bard Upgrades, Midjourney News, Arc Search, and a Rocket Man

Happy Sunday, friends! Welcome back to 10X AI: a weekly look at generative AI news, tools, and tips for the average user. Let’s get to it. This post might get cut off in some email clients. Click here to read it online. 🗞️ AI news Here are this week’s AI developments.

All hail VPN!

I just now upgraded to Gemini Advanced! The "dumb" version was already pretty useful. Really looking forward to this.

Where did you guys travel?

Bud-E is definitely interesting - I have seen many attempts at this, so this will happen due to the massive interest.

I think Bud-E looks as one of the more "legit" ones. Excellent find Daniel.

I'd be interested to see a side-by-side comparison of Microsoft's Copilot and Midjourney or Dalle - take your squirrel example and do it side by side. While the results will be interesting, I'd like to know which one works faster/easier with your concept of Minimal Viable Prompt (great concept btw)

I wonder what people think of Google's Gemini to use it long term - unfortunately hearing that they "killed" Bard and now they are doing Gemini, this looks on par with Google's business practices where they will pull the rug from under large infrastructure type projects.