10X AI (Issue #32): Midjourney Alpha, Google Imagen 2, and a Klingon Santa

PLUS: Mixtral 8x7B, Microsoft Phi-2, Meta Audiobox, Google MusicFX, Runway text-to-speech, Stability AI Zero123, and selecting text to discuss in ChatGPT.

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips.

This is the last 10X AI issue of 2023.

The next two Sundays are Christmas and New Year’s Eve, and we all deserve a bit of time with the family, away from AI.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments.

1. Midjourney is soon on its way out of Discord

It begins!

The new Midjourney website is no longer just for browsing images.

Power users who have generated more than 10K images in Midjourney can now create new ones directly on alpha.midjourney.com.

And because I’m a psychopath with more than 15K images…

…I officially have access!

Here’s how on-site image generation looks:

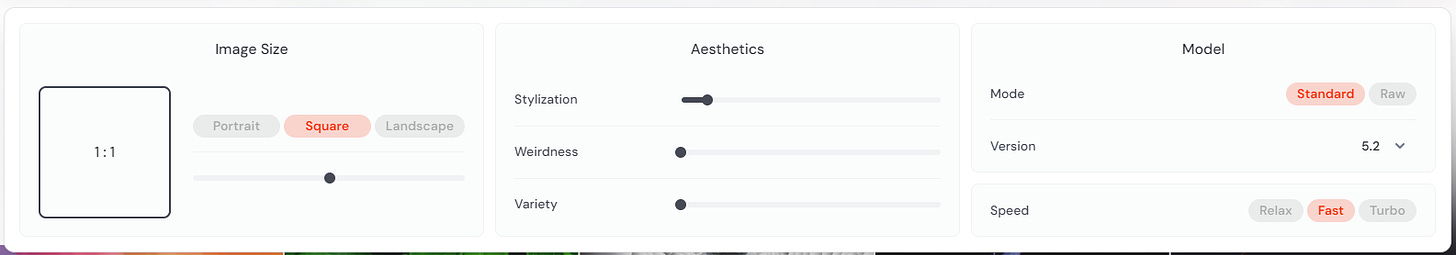

You can still manually add any parameters to your prompt (e.g. “--ar” for aspect ratio). But you no longer have to: Clicking on the right-hand “Options” icon brings up a menu where you can use sliders and buttons to make your selections:

For individual images, you can access many of the same options as on Discord:

But some advanced features like Vary (Region) and /blend aren’t yet available.

The plan is to test the Alpha site on hapless guinea pigs people like me, then gradually roll it out to those with fewer generated images, and eventually to everyone.

This should finally make Midjourney accessible to a broader, Discord-averse audience.

Let’s hope this happens soon!

2. Google’s Imagen 2 is out (for Vertex AI customers)

Google is releasing the next generation of its text-to-image model, Imagen 2. At the moment, Imagen 2 is only available to “Vertex AI customers on the allowlist,” so most of us won’t get to enjoy it just yet.

But damn, does it look incredible:

From prompt adherence to text rendering to the overall composition and image quality, this appears to be state-of-the-art.

As I said in my Thursday article, the first version of Imagen was already impressive, especially when it came to writing. (Try it in SGE by writing “draw a picture of [prompt]” to see for yourself).

By an uncanny coincidence, Google showcased the model using an “Ice cream shop logo” prompt, similar to the one from my post.

There’s no word on when Imagen 2 will have a wider public release, but I can’t wait to take it for a spin. If you happen to have access through Vertex AI, I’d love to hear your opinion about the model.

3. Mixtral 8x7B outperforms GPT-3.5

French company Mistral AI just released Mixtral 8x7B.

It’s a Voltron of smaller models, or a “sparse mixture of experts” (SMoE) if you want to ruin my giant robot analogy.

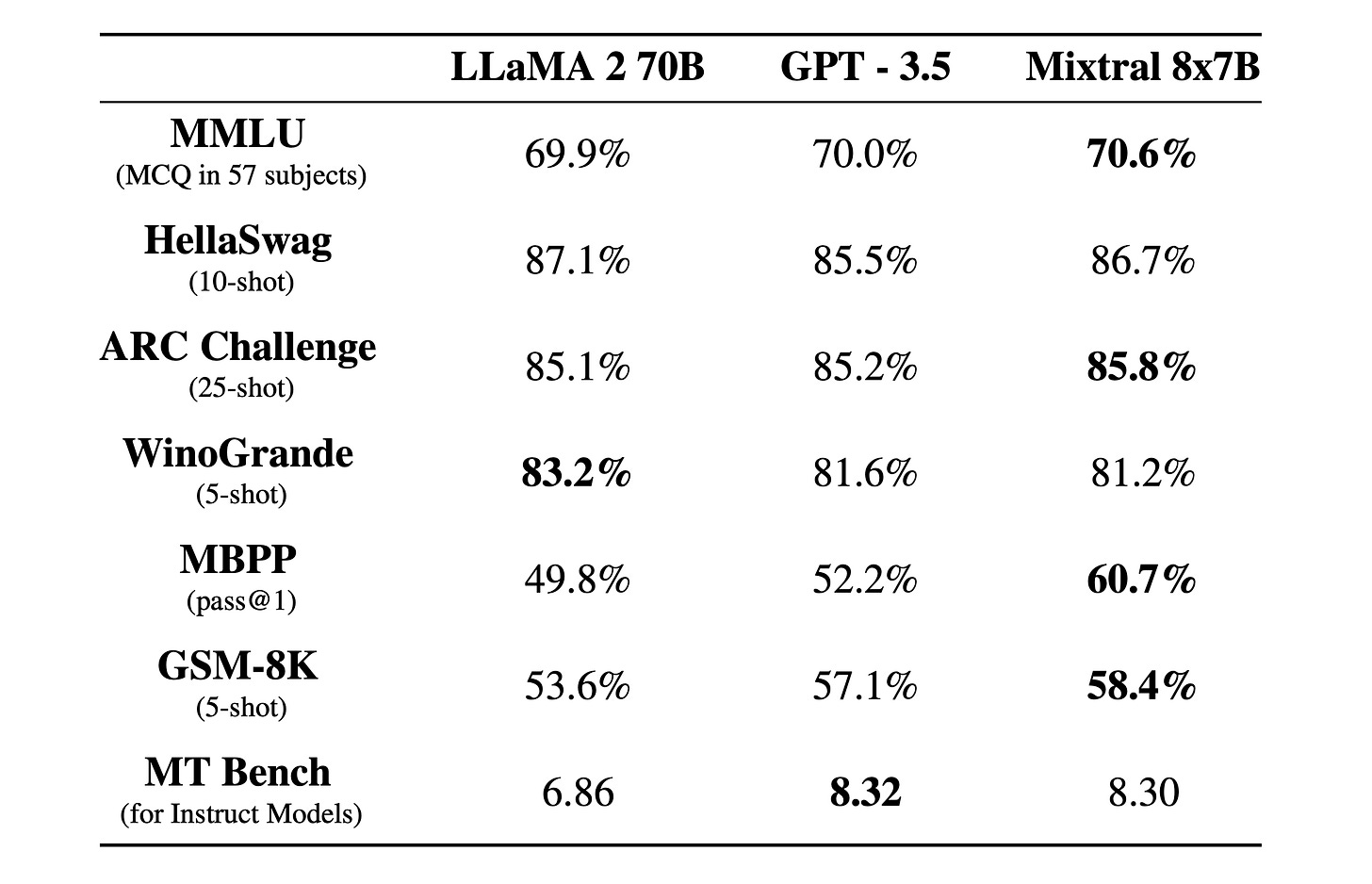

Mixtral 8x7B matches or beats LlaMA 2 (70B) and GPT-3.5 on most measured LLM benchmarks:

It’s fast, multilingual, great at coding, and can whip up a mean crème brulée if you ask it to (citation needed).

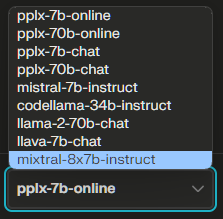

You can try chatting to Mixtral 8x7B for free over at Perplexity Labs.

Just select it from the dropdown:

Enjoy!

4. Microsoft’s Phi-2 is tiny but mighty

Microsoft released Phi-1.5 back in September.

Now we have the next version: Phi-2.

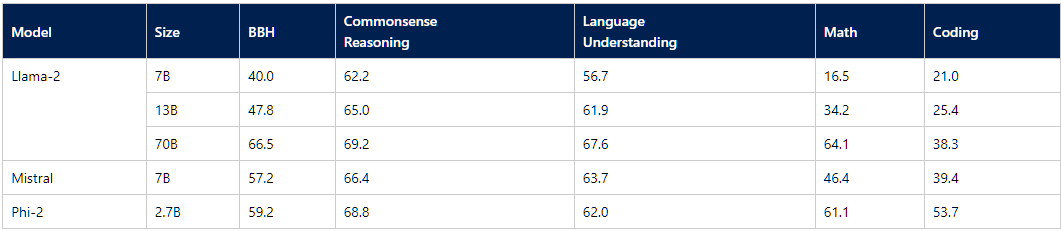

It’s a small model with only 2.7 billion parameters, but it can dance with the big boys like the much larger Llama-2 (70B):

Phi-2 is almost on par with Llama-2 in every benchmark and well ahead when it comes to coding. It can also beat Mistral-7B (1/8th of the above Voltron model from Mistral).

There’s no public demo, but the model can be accessed on Hugging Face.

5. Audiobox from Meta AI

Audiobox is Meta’s new audio generation model.

It can turn voice and text input into sound effects or speech.

The free demo gives you access to six features:

Your Voice: Record a voice sample, then turn any text into speech that sounds like you.

Described Voices: Input your text, then describe the characteristics of the voice that should speak it.

Restyled Voices: Upload a voice sample and tweak it using text prompts.

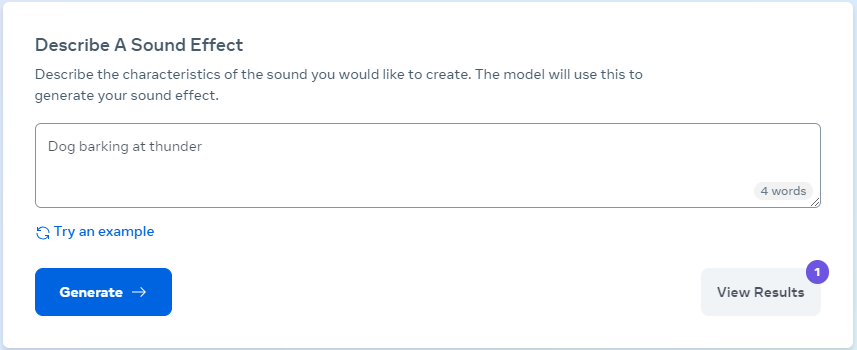

Sound Effects: Describe a sound to produce.

Magic Eraser: Remove noise from voice recordings.

Sound Infilling: Insert sound effects into existing audio.

I asked for a clip of a dog barking at thunder using the Sound Effects feature:

Here’s what I got:

Not bad at all.

If you’re feeling especially ambitious, Meta offers an Audiobox Maker that lets you generate an entire audio story using a combination of the above standalone features.

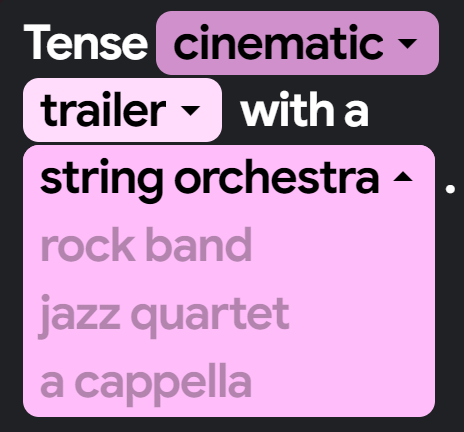

6. Google tests MusicFX for music generation

Remember MusicLM, Google’s music model nobody was allowed to see?

It appears to have been rebranded as MusicFX and is now available inside the AI Test Kitchen (for US residents and VPN users).

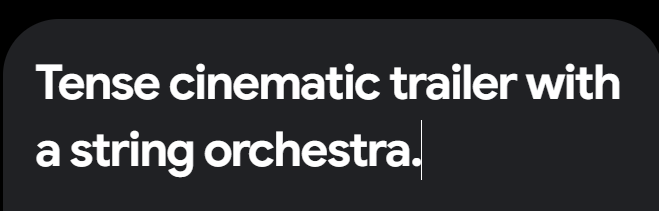

You input a text prompt…

…and after a few moments, you’ll have a 30-second track:

MusicFX then converts relevant parts of your original prompt into handy dropdowns that you can use to switch up the instruments or genre:

We’ve had other new music models come out recently (e.g. Stable Audio), so I’m tempted to do another Battle of the Bands post in the coming year.

7. Stable Zero123 turns images into 3D objects

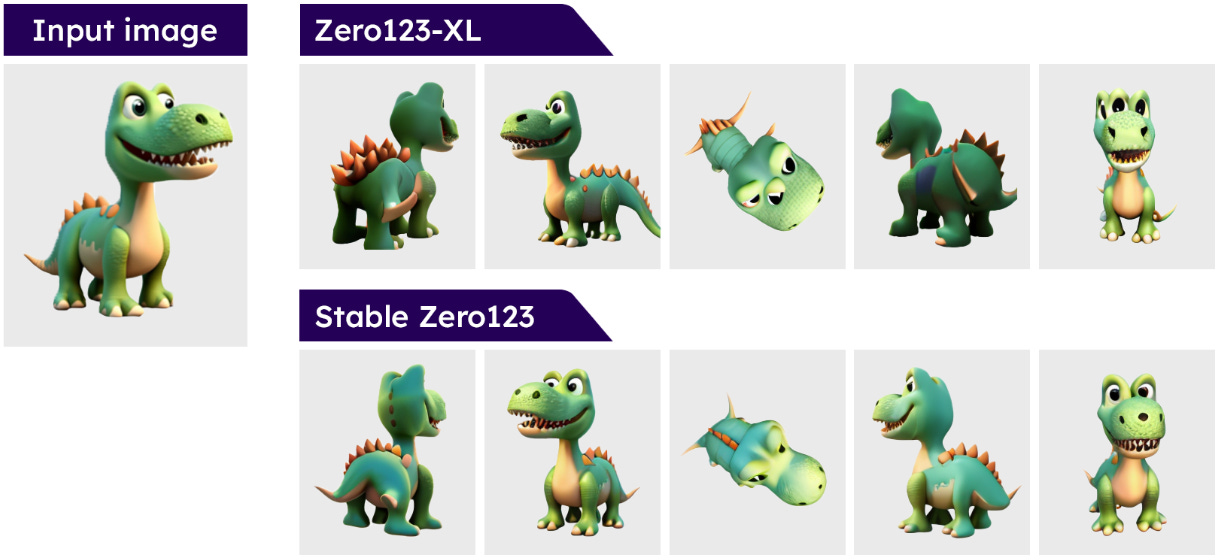

Stability AI has a new model called Stable Zero123 that can turn a single image into a high-quality 3D object.

Built on Zero123-XL, the model easily outperforms its predecessor:

You can download the Stable Zero123 checkpoint from Hugging Face if you know what to do with it.

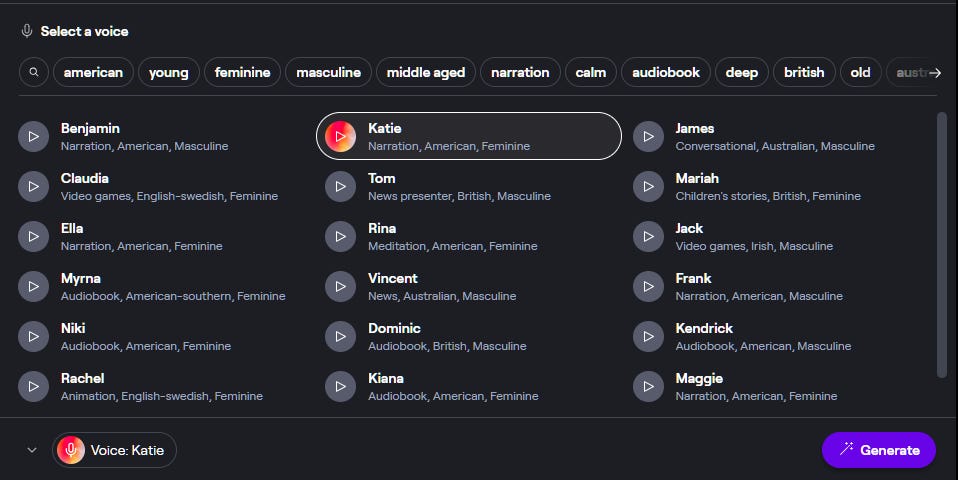

8. Runway adds text-to-speech to its arsenal

Having conquered AI video, Runway is branching out into text-to-speech.

On its ever-expanding list of tools, you’ll now find this option:

Here, you type what you want to say:

Then you select one of the 30 or so voices:

Here’s Katie:

I do wish she sounded more sure of her recommendation, but I’ll take it.

While we’ve already seen this functionality from companies like ElevenLabs, it’s convenient to have text-to-speech available directly within Runway. Creators can e.g. add a voiceover to their project without leaving the tool.

💡 AI tip

Here’s this week’s tip.

9. Discuss highlighted responses in ChatGPT

I don’t know exactly when, but OpenAI quietly shipped a useful little update to ChatGPT.

You can now highlight a part of a ChatGPT response to focus on it.

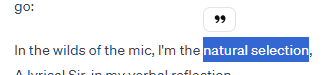

Say we have this smooth rap verse:

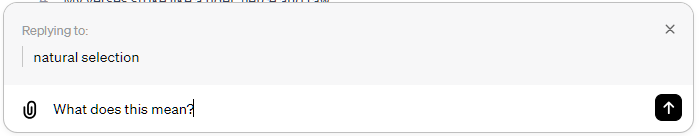

If I don’t understand the “natural selection” wordplay, I can select it:

Notice the little quote icon? If I click it, I get to chat about the highlight:

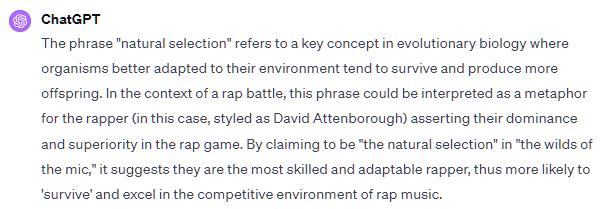

ChatGPT now knows exactly which part I’m asking about and enlightens me:

You can use this to rephrase specific words, expand the context, or do pretty much anything else that involves being more precise with your questions.

🤦♂️ 10. AI fail of the week

“Evil Santa” = “Klingon Warrior With A Fake Beard.” Apparently.

Sunday poll time

Previous issue of 10X AI:

10X AI (Issue #31): Google Gemini, Live AI Drawing, and an "Everybody" Knight

Happy Sunday, friends! Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips. Let’s get to it. This post might get cut off in some email clients. Click here to read it online. 🗞️ AI news Here are this week’s AI developments.

Team psychopath too haha ("just" 13k though). Too bad MJ Alpha doesn't include the fvcking inpainting feature, that alone is enough to keep me glued to Discord for now... Although I'll definitely switch to the official website once they add it.

I am a little in awe that you have over 15K generations - that's crazy! Do you expect to keep using the Discord tools or will you migrate to the browser once that's more robust?