10X AI (Issue #31): Google Gemini, Live AI Drawing, and an "Everybody" Knight

PLUS: Meta's standalone text-to-image site, Microsoft Copilot updates, Playground 2, and getting DALL-E 3 to generate more images per request.

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments.

1. Google Gemini is finally here…in spirit

It’s happened at last, folks!

Google rose from the dead and officially destroyed OpenAI:

Pack up your bags, we can all go home now.

Except, of course, that’s not quite what happened.

What happened is Google announced its next-generation model called “Gemini.”

It comes in three sizes:

Gemini Nano: The smallest model, optimized for personal devices. If you own a Google Pixel 8 Pro, you should already have it.

Gemini Pro: The standard model, roughly comparable in performance to GPT-3.5 on free ChatGPT accounts. Google’s Bard is now powered by it.

Gemini Ultra: The model that “destroyed” GPT-4. Want to try it? Easy! Grab your time machine and travel to 2024, because it’s not actually out yet.

On paper, Gemini Ultra beats GPT-4 across practically every measured benchmark (even if only marginally in some cases). Google released this sleek demo video to showcase Gemini’s multimodal capabilities:

Unfortunately, the focus quickly shifted away from Gemini’s abilities to the fact that the above video wasn’t filmed in real time.

Google wasn’t outright lying—it simultaneously published a companion post explaining how the video was made—but the public doesn’t take kindly to these sorts of misleading shenanigans.

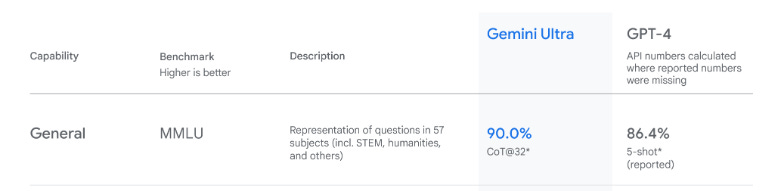

People also pointed out that the comparison for MMLU, one of the main LLM benchmarks, didn’t appear to be done in good faith:

The score for Gemini Ultra is achieved via chain-of-thought prompting while GPT-4 uses a 5-shot approach. So this isn’t a one-to-one comparison.

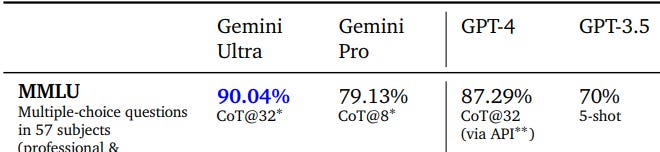

The thing is, Google’s official report has the comparison where GPT-4 is prompted with chain-of-thought, and Gemini still comes out ahead:

So this discrepancy might just be another unforced error.

Still, it’s not a good look.

Having said that, there are a few things that make Gemini—again, on paper—genuinely promising.

As

pointed out in his post, Gemini is natively multimodal. This means that unlike GPT-4, which is trained purely on text and gets its multimodality from add-on modules, Gemini is trained on different modalities from the start.This should make it far more capable of switching effortlessly between many types of input and output. Personally, I find this somewhat overlooked1 video far more exciting than the “fake” multimodal demo:

In the above, Gemini codes entirely new interfaces on the fly to address the user’s questions. If that’s what we’ll see in practice, it’s a new paradigm in how we interact with LLMs.

Gemini also convincingly beats GPT-4 on all multimodal benchmarks, especially video and audio2. Finally, the Gemini-powered AlphaCode2 model reportedly outperforms 85% of humans on evaluated coding tasks.

The bottom line is: We won’t know how Gemini Ultra truly fares until more of us get to experience it in 2024. For now, all we have are reported scores and flashy demonstrations.

So keep your bags unpacked, people. We’re not going home just yet.

2. Meta’s Emu is now a standalone tool

Meta’s text-to-video model Emu launched over a month ago on Facebook and WhatsApp. But it wasn’t rolled out to everyone. (It never showed up for me.)

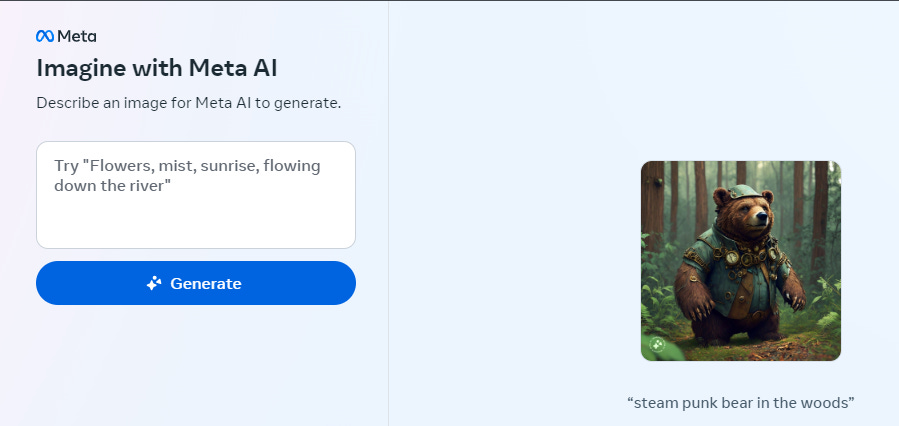

Now, it’s available via a standalone site called Imagine with Meta AI:

It’s completely free, although you’ll need a Meta account.

For now, Imagine is only open to US residents, but I got it working with a bit of VPN magic. It’s pretty great, especially considering the price (zero moneyz):

Imagine only spits out square images and there are no advanced options or customizations, but let’s see how the tool evolves in the future.

3. Microsoft unveils a slew of Copilot upgrades

Microsoft is wrapping up the year with lots of upcoming Copilot features:

GPT-4 Turbo: Copilot will soon run on OpenAI’s latest model.

Code Interpreter: The power of the Code Interpreter is also coming to Copilot.

Inline Compose: Edge users can now pull up Copilot from any website to help write or rewrite text.

Multi-Modal + Search: Asking questions about an uploaded image will now use the combined strength of GPT-4 Vision, Bing image search, and web search to give better responses.

Deep Search: Bing will now be able to automatically convert your vague search query into a more comprehensive one based on your answers, helping you find exactly what you need.

4. Playground v2 is better than SDXL?

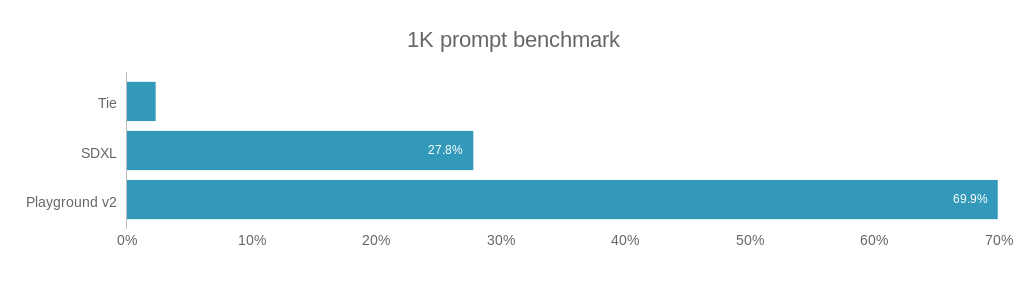

Playground unveiled its latest model: Playground v2.

The company claims that people overwhelmingly pick v2 over Stable Diffusion XL based on thousands of tested users:

Here’s my quick test, using the same prompts as the Meta Emu ones above:

You can test it yourself by creating an account at playground.com, which gives you 500 free credits per day.

5. More Meta stuff

In addition to the standalone Emu site, Meta AI made several smaller announcements, including:

Riff with friends: You can create and edit an image in a back-and-forth chat with a friend, watching it evolve together.

AI-curated Reels: You can ask Meta AI to help you automatically find relevant Reels for inspiration or info.

Drafting text: Meta’s assistant can help you draft and edit Facebook messages.

🛠️ AI tools

SDXL Turbo is not the only near-instant AI image maker around.

Live image generation is an exploding field. It’s surreal to think that my very first article was about turning starting images into AI art, but now this entire process can happen in real time.

Here are three live AI drawing tools you can check out for free.

6. Freepik’s Pikaso

Freepik has evolved from a library of free pictures to encompass videos, icons, 3D assets, AI images, and more.

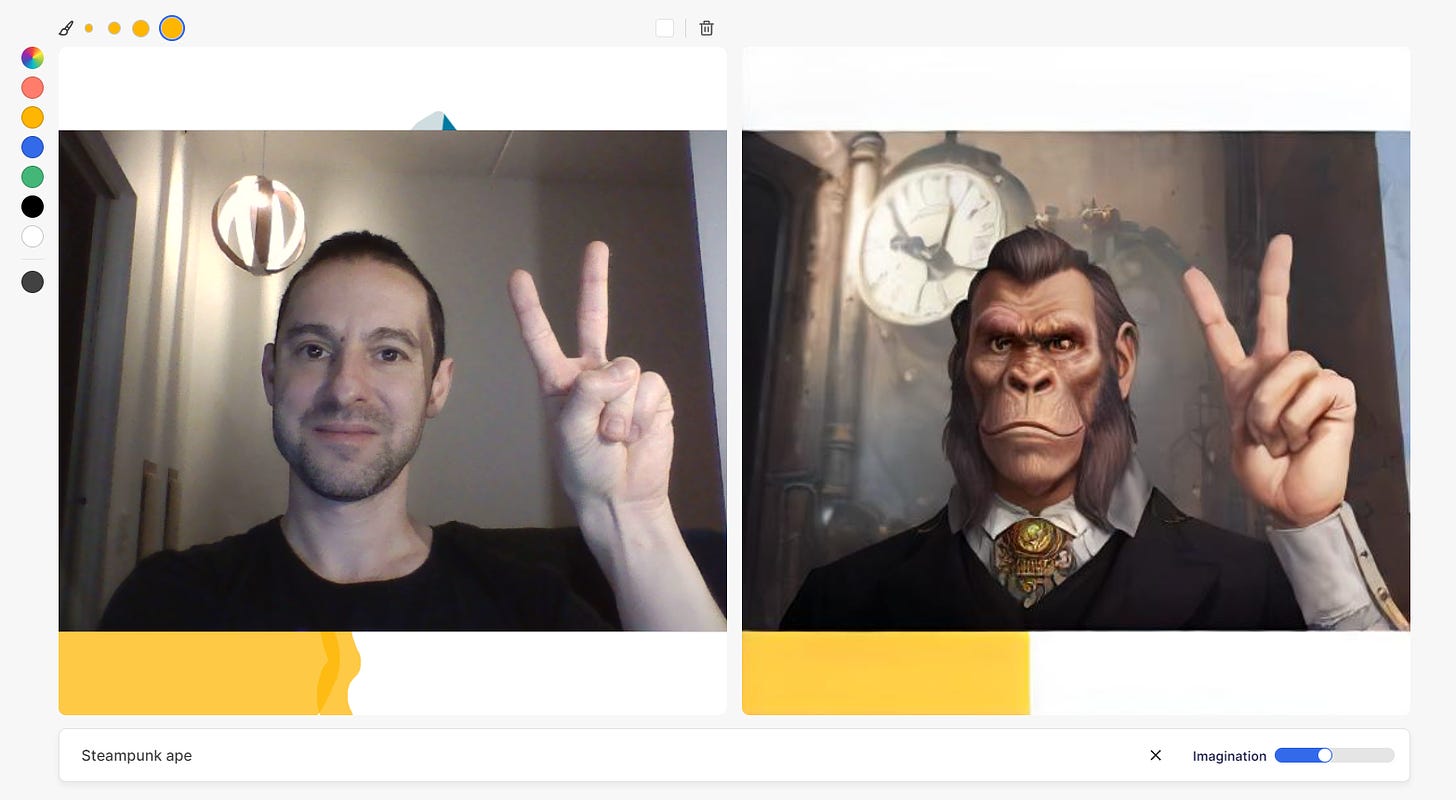

Their latest AI tool, Freepik Pikaso, lets you draw on a canvas and instantly see an AI-generated image that uses your doodles as a starting point:

Here are some additional things you can do:

Adjust the “Imagination” slider to tell AI how closely it should stick to your initial sketch.

“Copy” the right-hand AI image to the left panel and use it as the starting one.

“Re-imagine” the AI image by re-rolling its seed.

Finally, you can use whatever’s on your screen as the input image, as well as your webcam, which is quite freaky:

Use the invite code “BEN”3 when signing up to get a bunch of free daily credits.

7. Leonardo’s Live Canvas

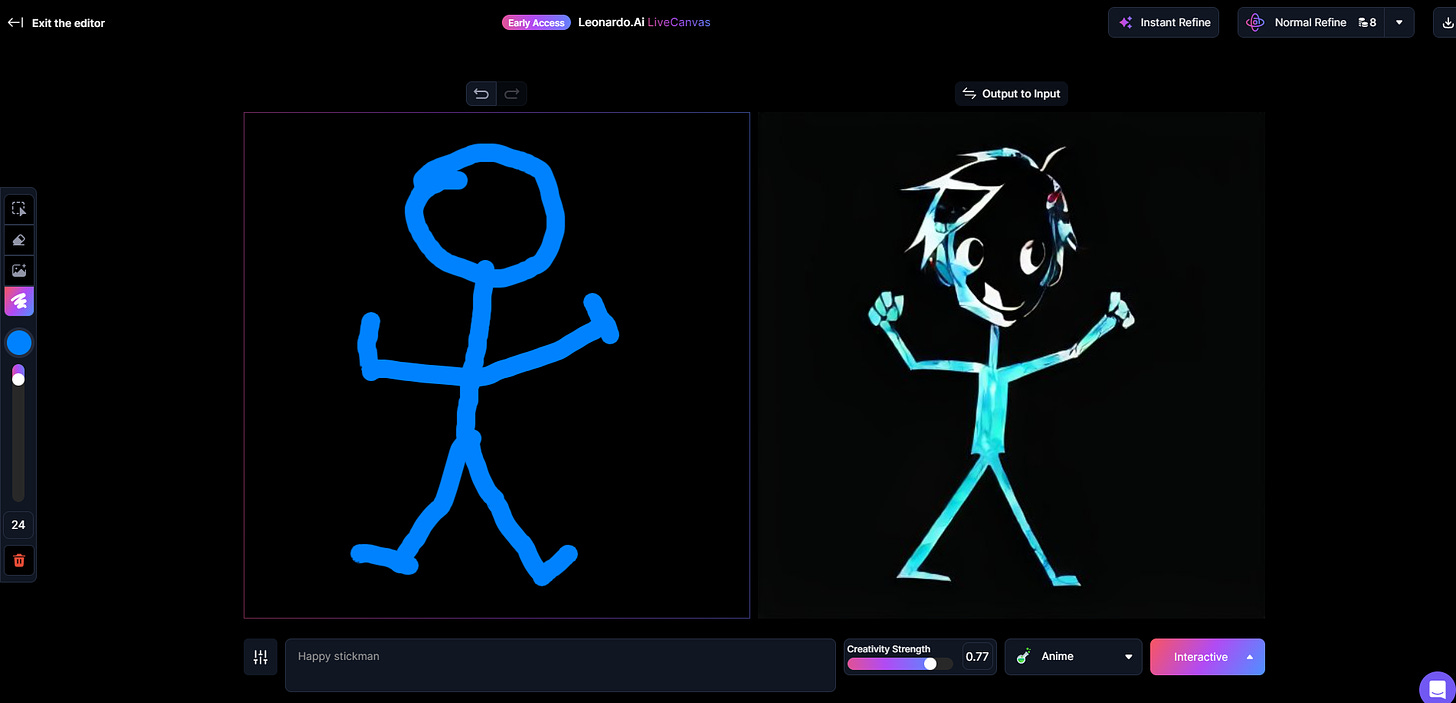

Leonardo Live Canvas is an awesome new addition to the already excellent Leonardo site. The concept and the layout are very similar to Pikaso:

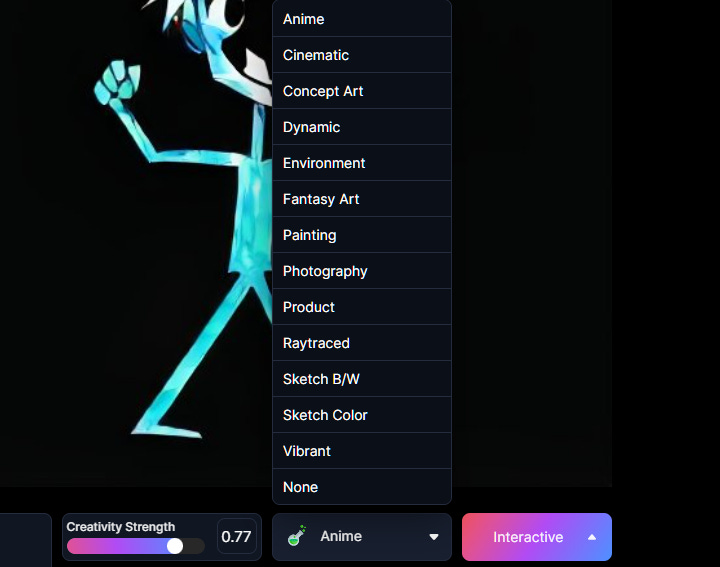

In Leonardo, you can adjust the “strength” of your text prompt to determine how much impact it has. There’s also a dropdown for picking your preferred style:

The generous free plan gives you 150 free generations per day, so go try it!

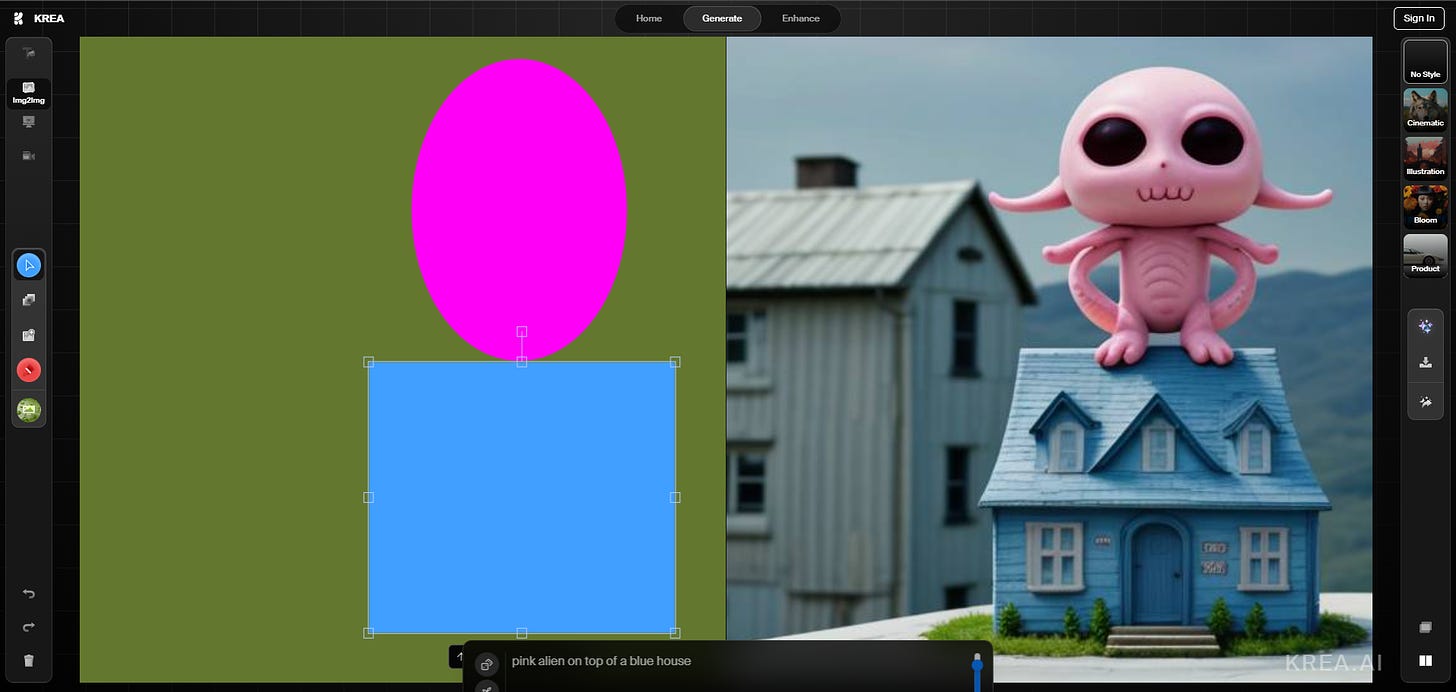

8. KREA’s real-time generation

I wrote about KREA’s “patterns” back in early October.

Now, KREA also added a real-time generation feature, which works largely the same way:

KREA lets you add shapes and images to the canvas, as well as use the screen or webcam as input, just like Pikaso.

You can test it for free before signing up. When you do sign up, use the invite code “KREA-DISCORD-FAM” to skip the waitlist.

💡 AI tip

Here’s this week’s tip.

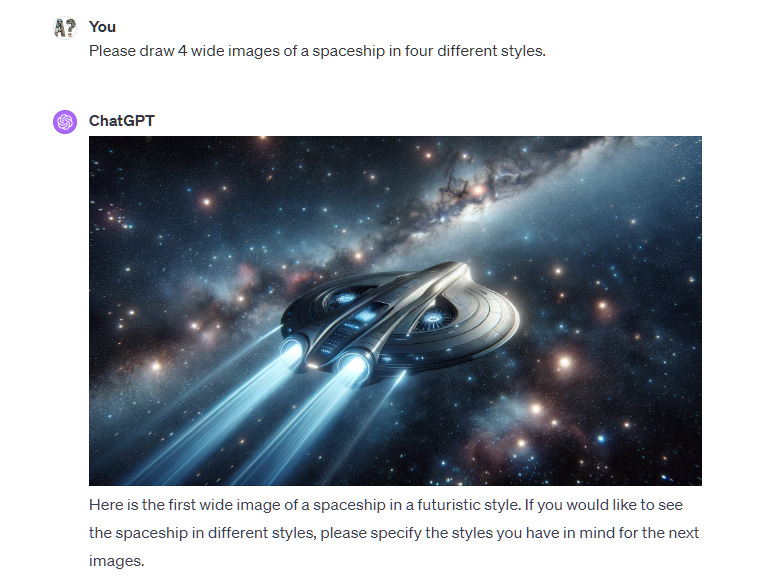

9. Get DALL-E 3 to create multiple images in a row

When DALL-E 3 first came to ChatGPT, it would generate a grid of four differently styled images for every request.

Now, likely due to bandwidth issues, it only creates a single image at a time, even if you ask for more:

But you can circumvent this simply by telling ChatGPT not to wait for your response. Something like this:

Please generate four wide images of a spaceship in a row, and apply a different style of your choice to each. You don't need to wait for my response to generate any of the images.

As you can see, this replicates the original functionality, giving you a wider range of stylistic choices with a single prompt:

Enjoy!

🤦♂️ 10. AI fail of the week

I asked “Mootion” for a Riverdancing knight. This…is not that:

Sunday poll time

I’m thinking of creating a deep-dive AI course. Help me figure out which topic to tackle first:

Previous issue of 10X AI:

10X AI (Issue #30): Pika Labs 1.0, SDXL Turbo, Meal Roasts by AI, and Pillow Snow

Happy Sunday, friends! Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips. Let’s get to it. This post might get cut off in some email clients. Click here to read it online. 🗞️ AI news Here are this week’s AI developments.

At the time of writing, it had 146K views compared to 2.2M for the main demo.

This is all the more impressive when you consider how good OpenAI’s Whisper already is at recognizing speech even with dialects, noise, etc.

Courtesy of Ben Tossel of Ben’s Bites fame.

This is a good little nugget: "Please generate four wide images of a spaceship in a row, and apply a different style of your choice to each. You don't need to wait for my response to generate any of the images."

I need to start using this trick to save time.

Gemini really dominated this cycle!