10X AI (Issue #27): OpenAI's Big Day, One-Click Fun Apps, and an Elaborate Tuba

PLUS: Zapier AI Actions, YouTube's new generative features, Runway's Motion Brush, AI follow-up stories, Grok, and editing specific images with DALL-E 3.

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments.

1. OpenAI’s many DevDay announcements

OpenAI’s first-ever DevDay was absolutely jam-packed with releases.

Many of these were aimed at developers building on top of OpenAI’s models. For the full picture, read this excellent summary by

()But even us “regular” users got exciting toys to play with:

GPT-4 Turbo: ChatGPT Plus is now powered by this latest iteration of GPT-4, which is faster, better1, and has a whopping 128K-token context window, surpassing the current leader Claude 2’s 100K tokens.

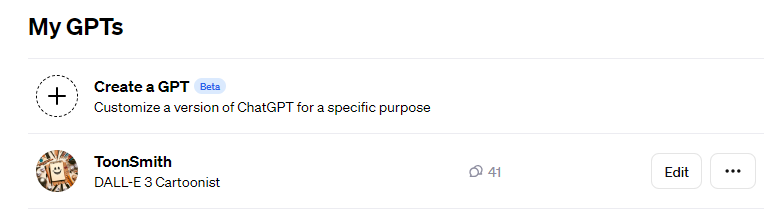

“Create a GPT”: To me, the coolest new thing is the ability for anyone to create custom versions of ChatGPT:

These GPTs can access the same toolkit as ChatGPT Plus. You can also upload proprietary documents for GPTs to work with and connect them to third-party apps to perform specific actions.

The process is as no-code as it gets. You even can start by simply talking to ChatGPT about what you’re trying to build.

So far, I’ve made ToonSmith to help me with my side project:

. But I have a list of no fewer than 10 other ideas that I’m excited to build with these newfound powers.2. Zapier launches “AI Actions”

In parallel, Zapier came out with a complementary feature: AI Actions.

These let third-party AI tools (like GPTs) use Zapier’s integrations to interact with 6,000+ apps. Zapier even released a dedicated article about using AI actions for custom GPTs.

If you’ve been putting off learning Zapier until now, this might be a great time to start. Provided you’re interested in building custom GPTs, that is.

3. YouTube’s experimental AI

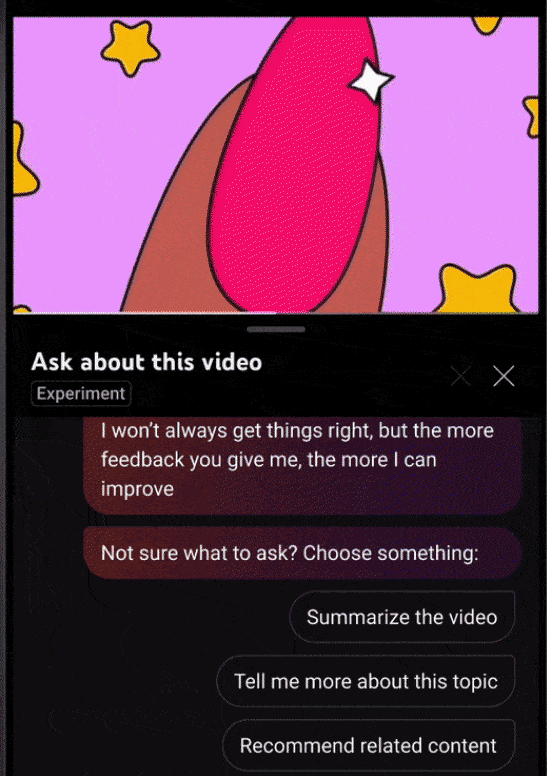

YouTube is starting to test a few additional AI-powered features for a small subset of users.

This includes a “Topics” tab under popular videos that essentially summarizes the comments section, letting people get the gist of the most important discussions:

The second feature lets users ask an AI chatbot about the content of a video they’re watching:

This sounds similar to what’s offered by third-party tools like Transcript.LOL but built directly into the platform.

If you’re a YouTube Premium user, you should be able to opt-in to test the above features.

4. Runway’s “Motion Brush”

Runway is on a roll!

Just last week, the platform made major improvements to video quality and coherence. This week, Runway teased an upcoming Motion Brush feature.

Motion Brush lets AI video creators select specific areas to animate, giving them an unprecedented level of control.

Watch this:

Yeah, it looks pretty damn cool!

5. xAI teases a chatbot

xAI is about to enter the game with a chatbot called Grok.

Grok is currently behind GPT-4 and Claude 2 on all measured LLM benchmarks. Its main claim to fame seems to be that it:

Is going to be plugged into X (Twitter), thereby having real-time knowledge of the latest developments.

Has an edgy personality and will answer “spicy” questions that other AI chatbots won’t. (A clear dig at ChatGPT’s “wokeness.”)

Grok should soon become available to a limited subset of US users with a Premium+ subscription to X.

6. Three extra developments

To make space for a few tools and today’s tip, I’ve combined a few minor news items into a single entry.

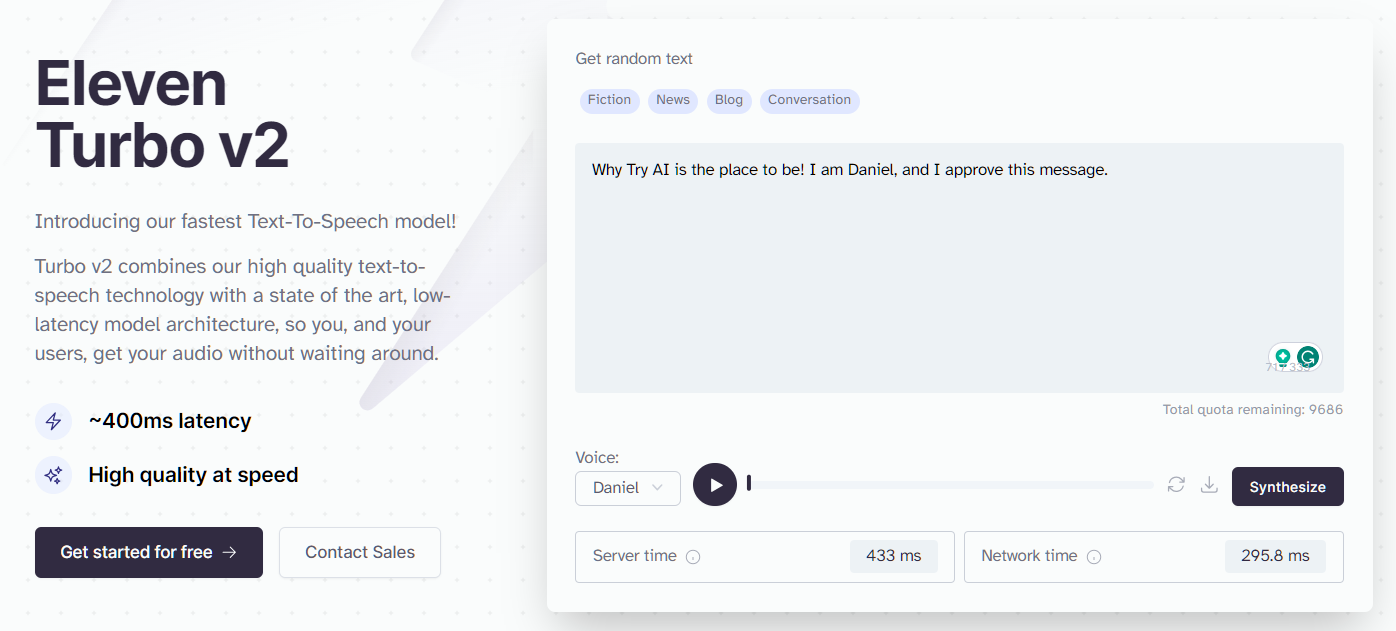

1. A faster ElevenLabs model

ElevenLabs released an even speedier text-to-speech model called Eleven Turbo v2. It doesn’t seem to quite match the insane speeds of PlayHT 2.0 Turbo, but it sure is fast.

My test below took just about 400 ms:

Bonus: ElevenLabs Daniel sounds far more sophisticated than the person-writing-this-Daniel.

You can test Eleven Turbo v2 for yourself.

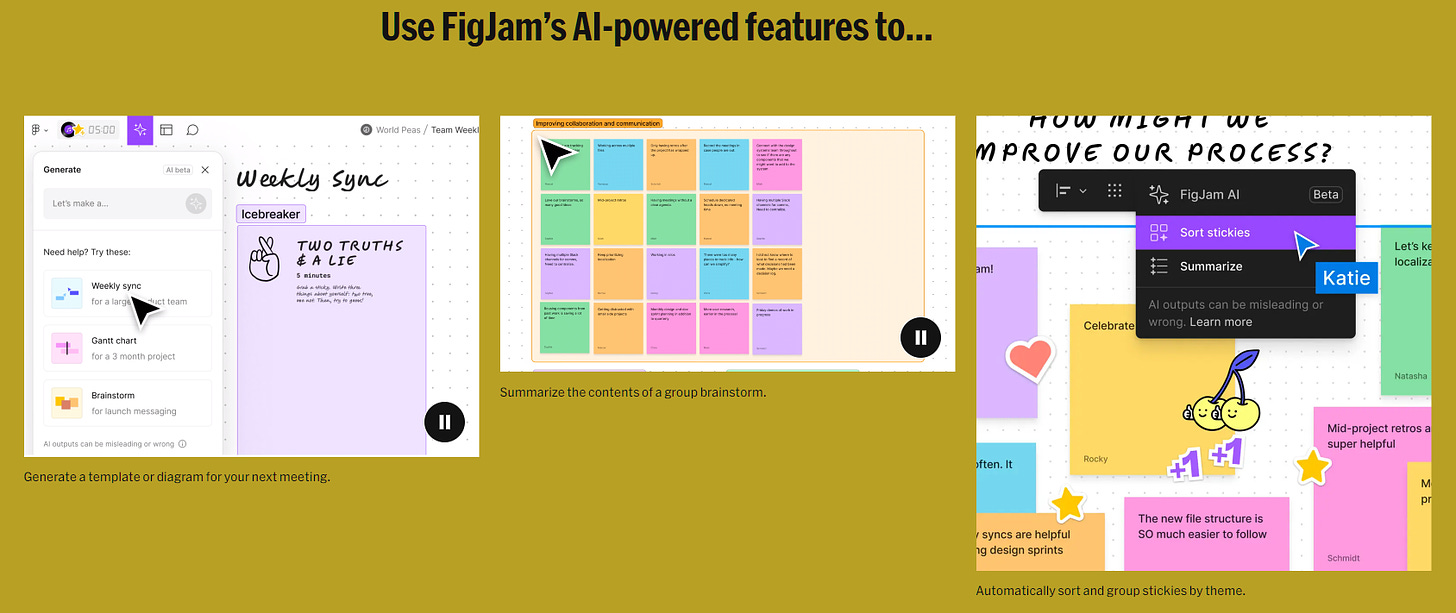

2. FigJam with more AI

I first wrote about Figma’s Jambot assistant in August.

Now, Figma is adding more AI features to their FigJam solution:

AI will help summarize and sort any group insights and automatically generate templates for upcoming group sessions.

3. Google SGE in more countries

Google is expanding its Search Generative Experience to 120 new countries, none of which are Denmark (predictably).

Google is also adding the ability to ask follow-up questions directly from the search results page.

🛠️ AI tools

Today, I showcase two tools that take just a single click to produce something entertaining.

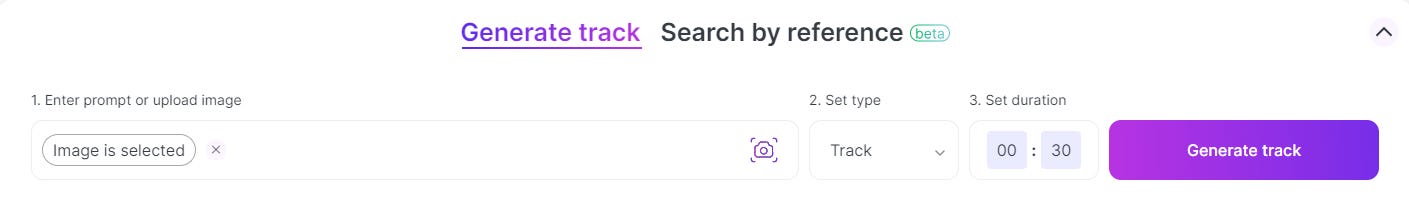

7. Mubert

Mubert makes AI music.

It has many of the same features as Stable Audio and MusicGen, letting users describe their desired track, genre, mood, duration, etc. It even has tools for professional music makers to e.g. create loops and mixes.

But the one-click part comes from a fun image-to-music feature:

You simply upload an image of your choice and click “Generate track.” Mubert will use the image as inspiration for its output. I tried this image:

And got this:

The chill, atmospheric vibe feels quite fitting, reflecting the vastness of the ocean on a calm sunny day.

Mubert gives you 25 free tracks per month, which is plenty to play around with. The tracks will all have an audible watermark and other restrictions, though. So if you want to actually use the music for commercial purposes, you’ll want to upgrade to a paid account.

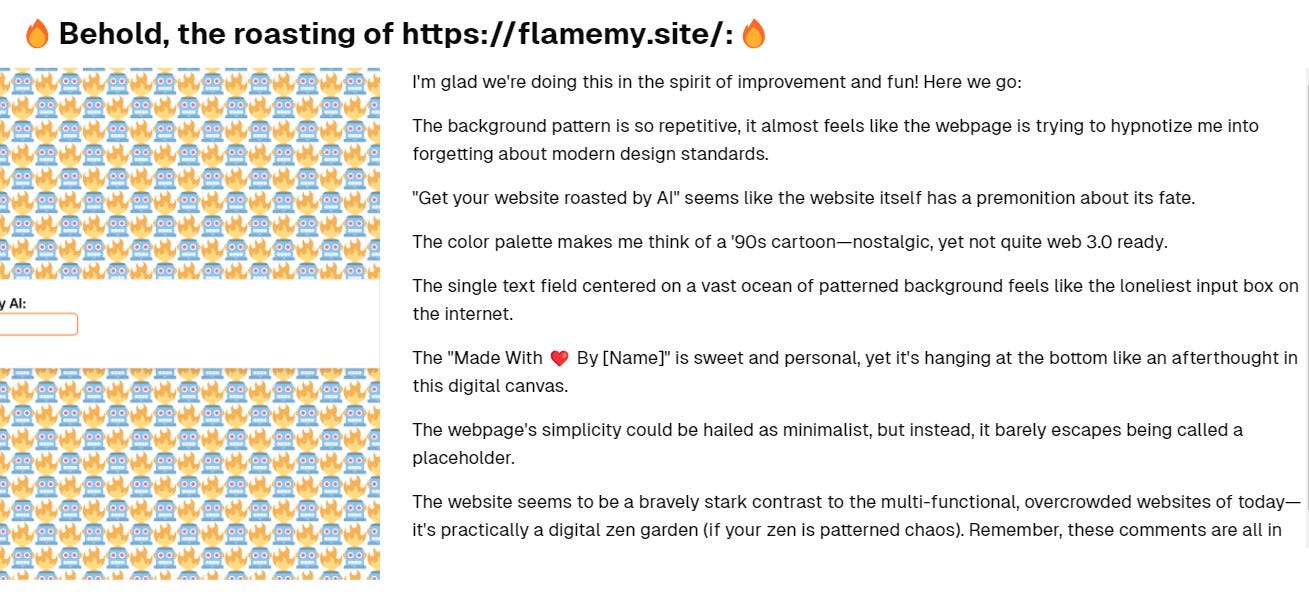

8. Roast A Site

Roast A Site lets you…uh…roast a site.

You simply input the URL of the site you want to roast, and it gets to work:

Being the evil genius that I am, I asked Roast A Site to roast itself:

Even though it’s mostly for shits and giggles, you do end up getting useful insights to improve the site in question.

💡 AI tip

Here’s this week’s tip.

9. Make adjustments to the same image in DALL-E 3

When DALL-E 3 first came to ChatGPT, it didn’t know how to alter the image seed. So it would return the same exact image for the same prompt, no matter how many times you rolled the dice.

Now, DALL-E 3 does use seeds.

This means two things:

You can now get different results, even when using the same exact prompt.

You are able to make minor changes to a specific image you like.

Here’s how that works:

1. Generate your image

Start by making an image in DALL-E 3. Here’s one for a simple prompt:

Cartoon illustration: Funny duck with a red hat

Now let’s say we really like the exact pose and the “okay” sign, but we want a different hat.

2. Get the image details

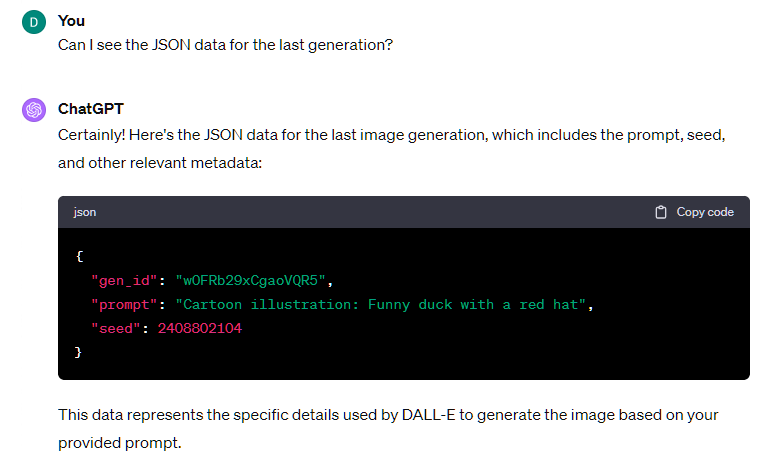

To get the exact seed, gen_id, and prompt used, ask ChatGPT for the JSON data for your image:

You now have everything you need to “lock” your image and make minor tweaks.

3. Ask for adjustments

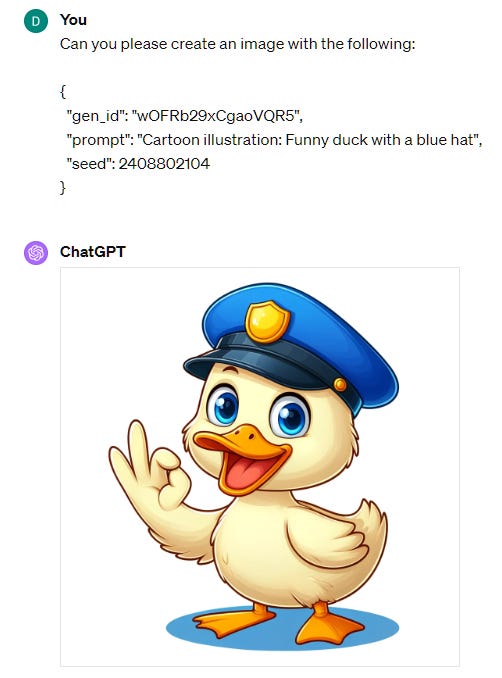

Now you can tell ChatGPT to keep every other parameter fixed while adjusting the prompt slightly:

I repeated the process for a “green” hat and got another very similar image. Here are the three of them side-by-side:

It’s not 100%, because even slightly modifying the text prompt introduces randomness into the generation. But it’s much closer than what you’d get with a different seed.

Tip: You can try to skip step #2 altogether and simply tell DALL-E 3:

Create a new image with the same seed and the following prompt: “Cartoon illustration: Funny duck with a [chosen color] hat.”

But I find that giving ChatGPT the exact details is more reliable, as it otherwise tends to improvise.

🤦♂️ 10. AI fail of the week

Tuba…by Rube Goldberg.

Sunday poll time

Previous issue of 10X AI:

10X AI (Issue #26): All-In-One ChatGPT, MJ Style Tuner, and an AI-Style Jump Rope

Happy Sunday, friends! Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips. It’s been a week full of launches, so this is yet another news-only edition. Let’s get to it. This post might get cut off in some email clients.

Several studies have cast doubt on whether GPT-4 Turbo actually outperforms GPT-4.

Great post! One question: what would you say the difference is between Zapier's own ChatGPT plugin (https://zapier.com/blog/announcing-zapier-chatgpt-plugin/) and their new AI actions? Based on their doc page, equipping it looks much more involved than the plugin:https://actions.zapier.com/docs/platform/gpt

Yo dawg, we heard you liked AI, so we made some AI to help you understand all the AI news coming out!

Seriously, I'm gonna need an implant before too long to even halfway keep up.