10X AI (Issue #26): All-In-One ChatGPT, MJ Style Tuner, and an AI-Style Jump Rope

PLUS: Runway's massive upgrade, Google stuff, Phind's coding LLM, LinkedIn's job hunt AI, Luma Labs 3D, new Perplexity models, and Meta's Emu model

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips. It’s been a week full of launches, so this is yet another news-only edition.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments.

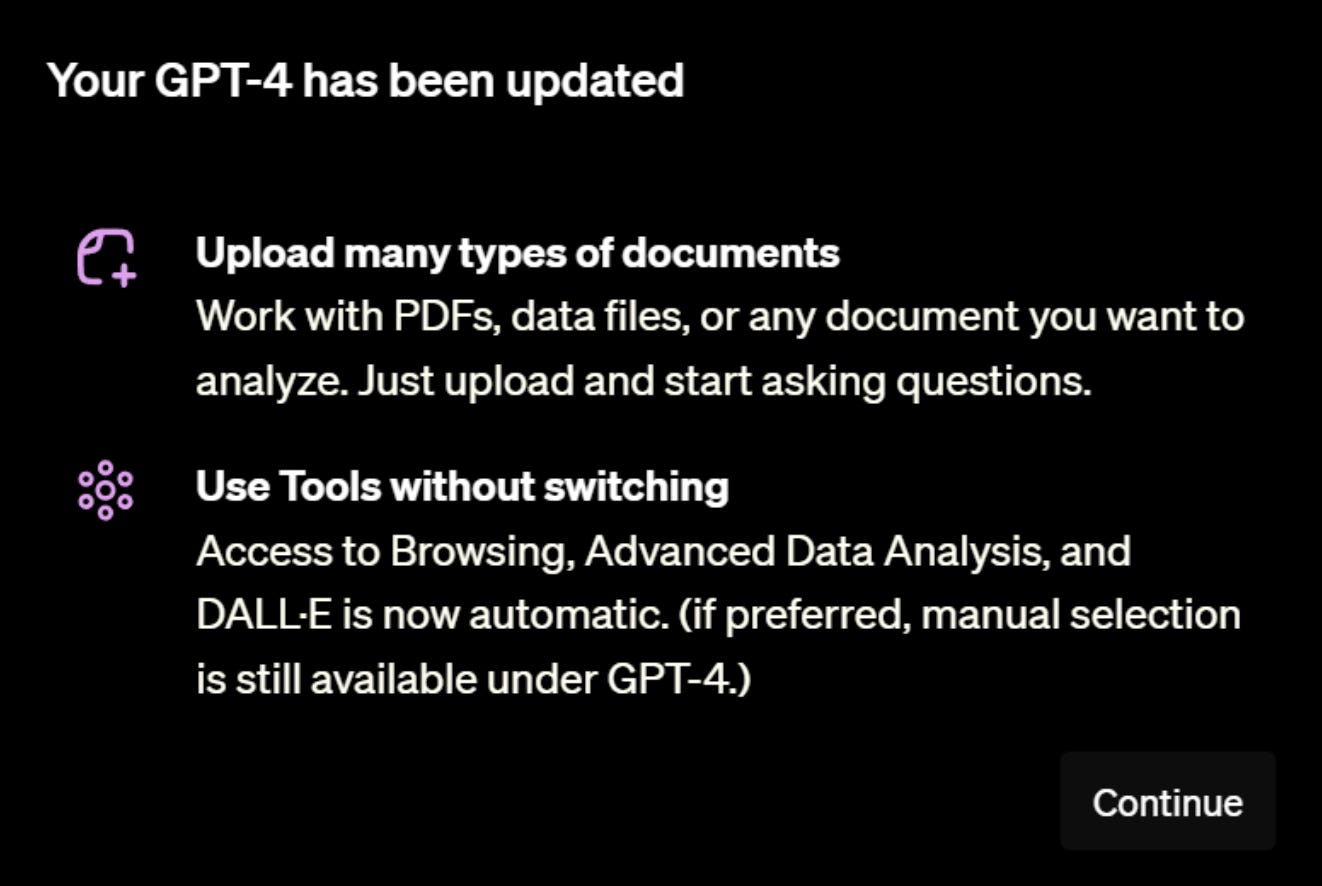

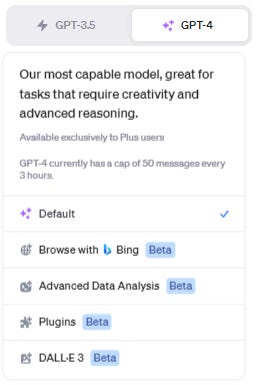

1. ChatGPT Plus consolidates its features

A few weeks ago, I pointed out the inconvenience of ChatGPT Plus tools being disconnected from each other:

I concluded with this:

I expect that once all of these features drop the “Beta” tag, they’ll be rolled into the default GPT-4 model.

Well, it looks like exactly that is slowly beginning to happen. Some users are getting access to what ChatGPT is appropriately calling “GPT-4 (All Tools”).

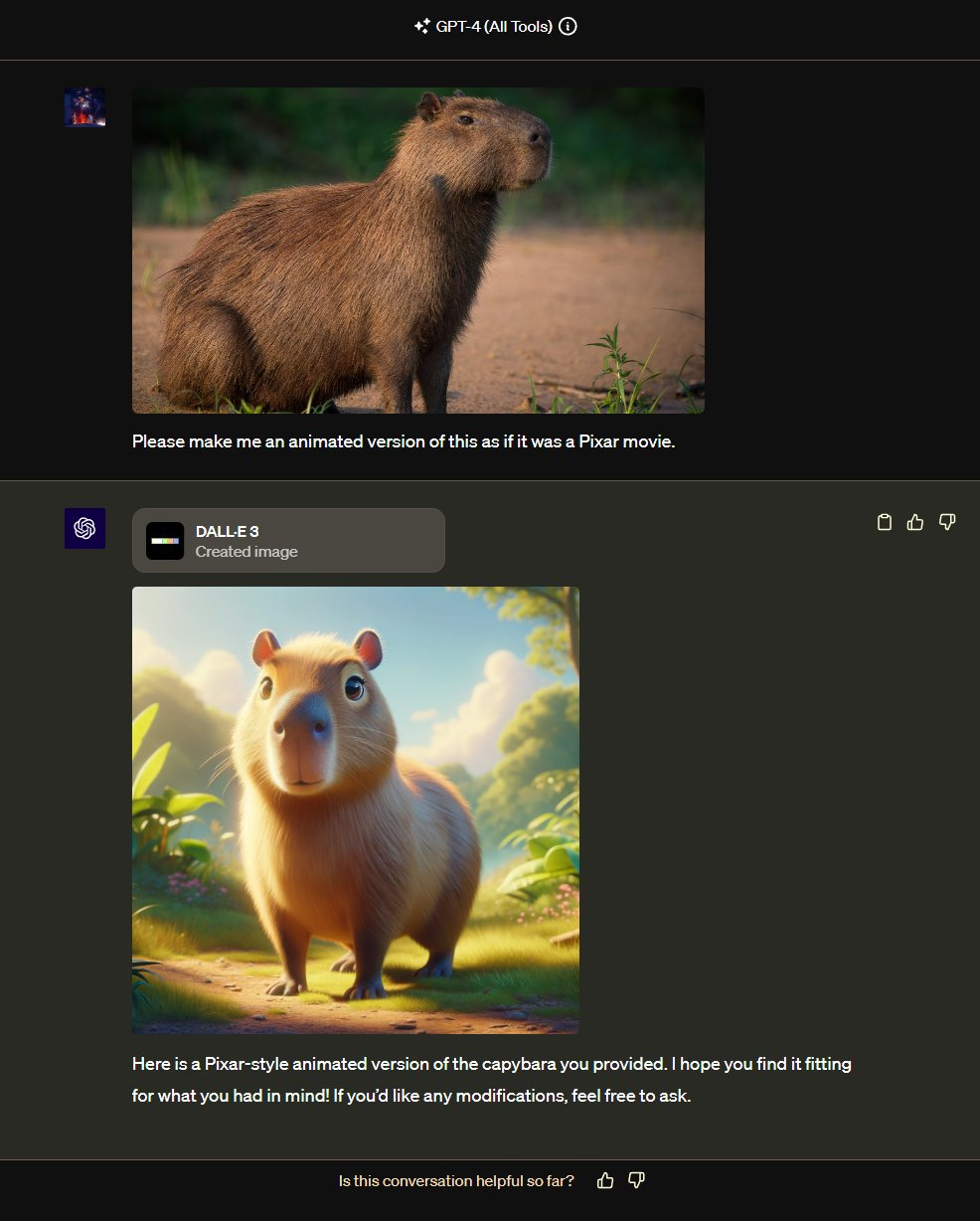

The scenario I described of having GPT-4 Vision “see” an image and immediately feed it to DALL-E 3 to reimagine is now a reality:

I can’t wait to get access myself so I can test it out. If you’re one of the lucky ones to have tried it, I’d love to hear about your experience!

2. Midjourney’s Style Tuner unlocks personalization

Without much fanfare, Midjourney released one of its coolest features to date: the Style Tuner. This lets you “tune” a unique personalized aesthetic to apply to your images.

The Style Tuner calls for a dedicated post. There’s simply too much ground to cover. So that’s what I’ll dive into on Thursday.

For now, here’s an excellent quick intro from Shane McGeehan, who also developed a useful Midjourney Prompter that I covered in an earlier post:

3. Runway approaches cinematic video quality

Runway was already ahead of the pack when it comes to realism, as I showcased in my text-to-video comparison post.

But the company just announced “major improvements to both the fidelity and consistency of video results." Here’s the kind of output you can expect:

Remember, you get 125 free credits upon signup.

So if you haven’t already, check out Runway’s next-level video generation.

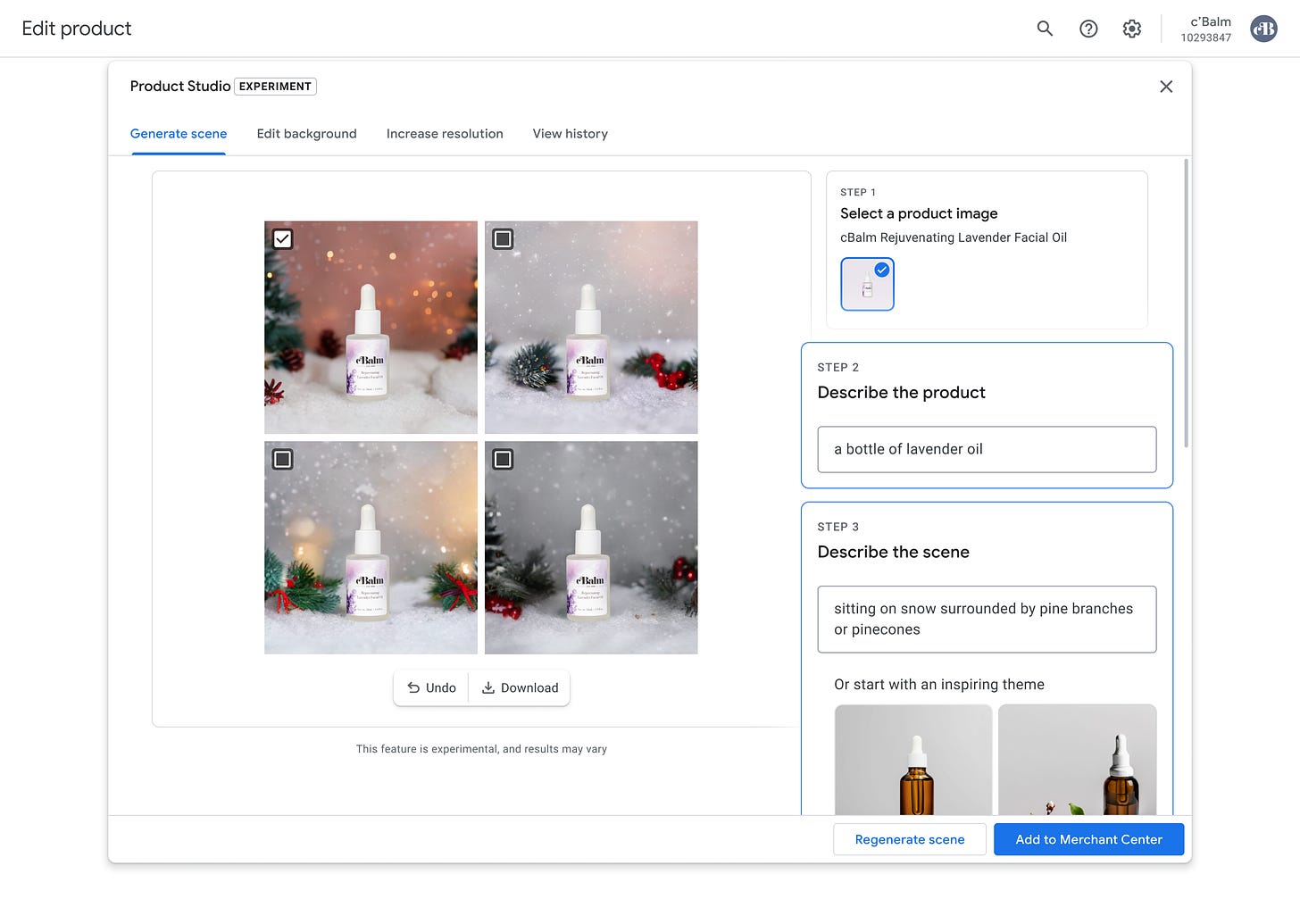

4. More Google releases

First off, Google finally released its “Product Studio” (first announced in May) that is very similar to what Amazon did just last week.

Product Studio lets merchants and advertisers create brand imagery for their products using AI:

Also, Google added a real-time response feature to Bard, so you can start seeing its answers instantly:

You can toggle the new setting off, but I’m not sure why you’d want to.

5. Phind’s new best-in-class coding model

Phind announced its 7th-generation Phind Model V7, which outperforms GPT-4 on coding tasks, scoring 74.7% on HumanEval (learn more about LLM benchmarks).

It’s also about 5x faster than GPT-4.

And the best part? You can try it for free directly on Phind’s website.

6. LinkedIn’s job hunt AI

LinkedIn is apparently starting to roll out an AI-powered “job seeker coach” to Premium members. It runs on GPT-4 an helps applicants to assess and increase their chances of applying for specific jobs.

I’m not a LinkedIn Premium member myself, but if you’ve had any hands-on experience with the new chatbot, let me know!

7. Luma Labs releases a text-to-3D “Genie”

3D generation has been making massive leaps forwards this year. Just compare my first mention of it in January to Common Sense Machines’ image-to-3D in July.

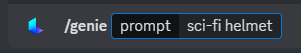

Now, we have a Discord bot called Genie from Luma Labs:

Simply type “/genie” followed by what you’d like to see:

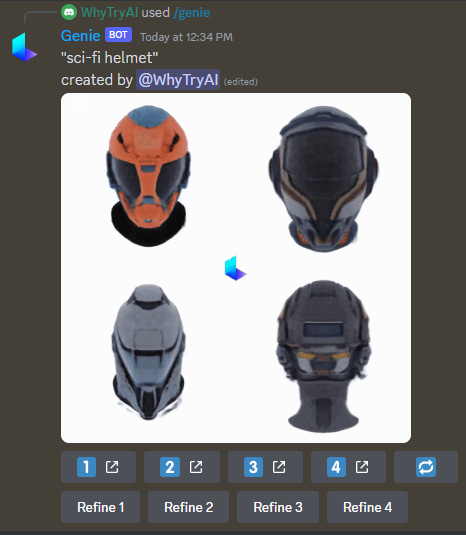

In about 10 seconds, you’ll get 4 different rotating previews of your 3D object:

Then you pick one to refine by clicking the appropriate button:

And within 20 minutes (it took about 10 in my case)…

…you have a detailed, refined version:

You also get a link to view and download the asset for further work. Unfortunately, Luma’s site links currently appear broken, including the advertised lumalabs.ai/genie one.

But you should still be able to use this direct Discord link. It’s 100% during the research phase.

8. Perplexity’s in-house language models

Perplexity released its own models called pplx-7b-chat and pplx-70b-chat.

The models’ stand-out feature is that they “prioritize intelligence, usefulness, and versatility on an array of tasks, without imposing moral judgments or limitations,” which Perplexity chose to demonstrate with this kindness-murdering comparison against Llama:

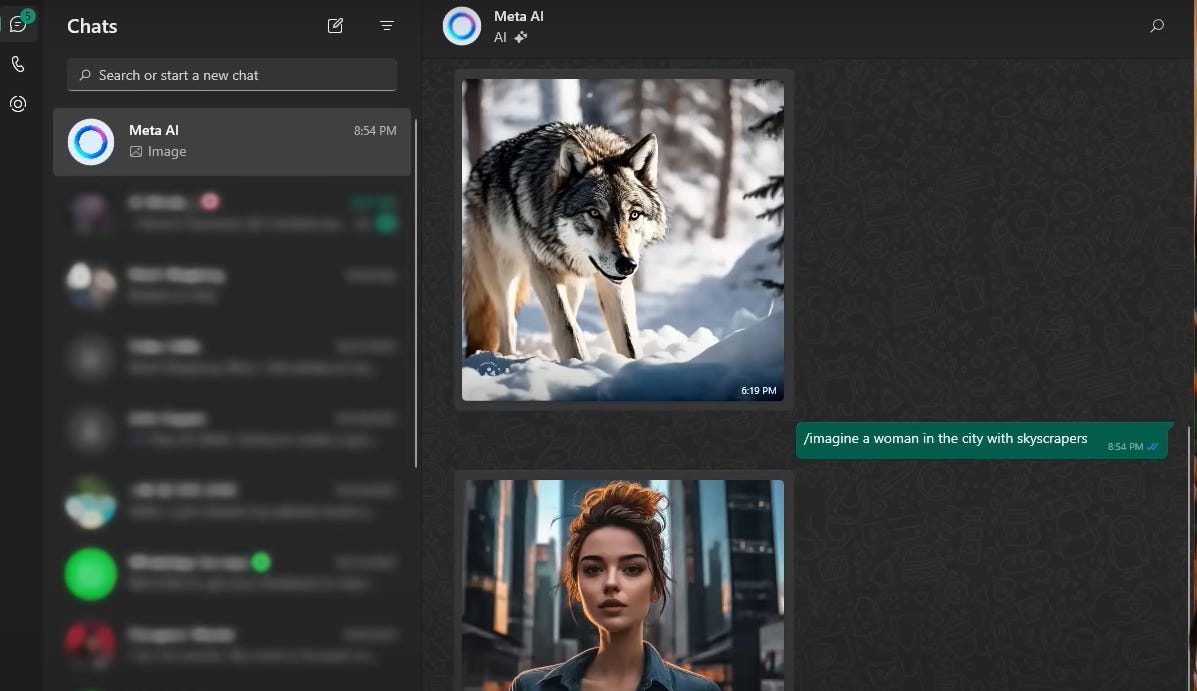

9. Meta’s Emu is here

Meta’s text-to-image model Emu, first announced over a month ago, is apparently starting to roll out to select users.

It should become accessible in your Facebook Messenger or WhatsApp:

Use the Midjourney-inspired /imagine command to request images, and they’ll appear directly in the chat.

This is yet another release I haven’t personally gotten, so if you have any experience with it, I’d love to hear from you.

🤦♂️ 10. AI fail of the week

Ah, jump ropes. Exactly as I remember them!

We were both right about ChatGPT becoming the superapp over time. I'm sure others will follow suit, too.