10X AI (Issue #18): Falcon-180B, Chirp v1, AI Video Tools, and a Trippy Focus Group

PLUS: Zoom's AI Companion, Open Interpreter, Canva's ChatGPT plugin, and spicing up your older Midjourney images with the new Vary (Region) command.

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at beginner-focused AI news, tools, and tips.

Let’s get to it.

This long post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments.

1. Falcon-180B nudges out Llama 2

Another week, another benchmark-breaking LLM.

This time, it’s the Falcon-180B from Technology Innovation Institute (TII).

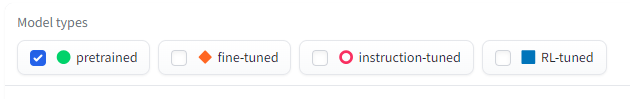

It’s now the largest openly available language model (180 billion parameters) and is the highest-scoring pre-trained LLM on the Hugging Face Open LLM Leaderboard.

Falcon-180B narrowly beats Meta’s recent Llama-2 across most LLM benchmarks.

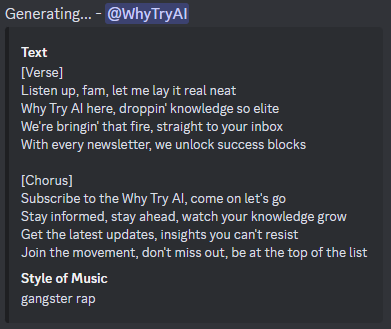

You’ll want to untick “fine-tuned,” “instruction-tuned,” and “RL-tuned” model filters to see the apples-to-apples comparison:

There you go:

Want to know more about the benchmarks in that table?

You might want to revisit my recent post on that exact topic.

And yes, you can chat with Falcon-180B in this free demo on Hugging Face.

2. Suno’s Chirp v1 is here

I’ve been following Suno AI’s music-making bot on Discord for a while, as it seemed promising.

Now, they’ve released v1 of the Chirp Bot.

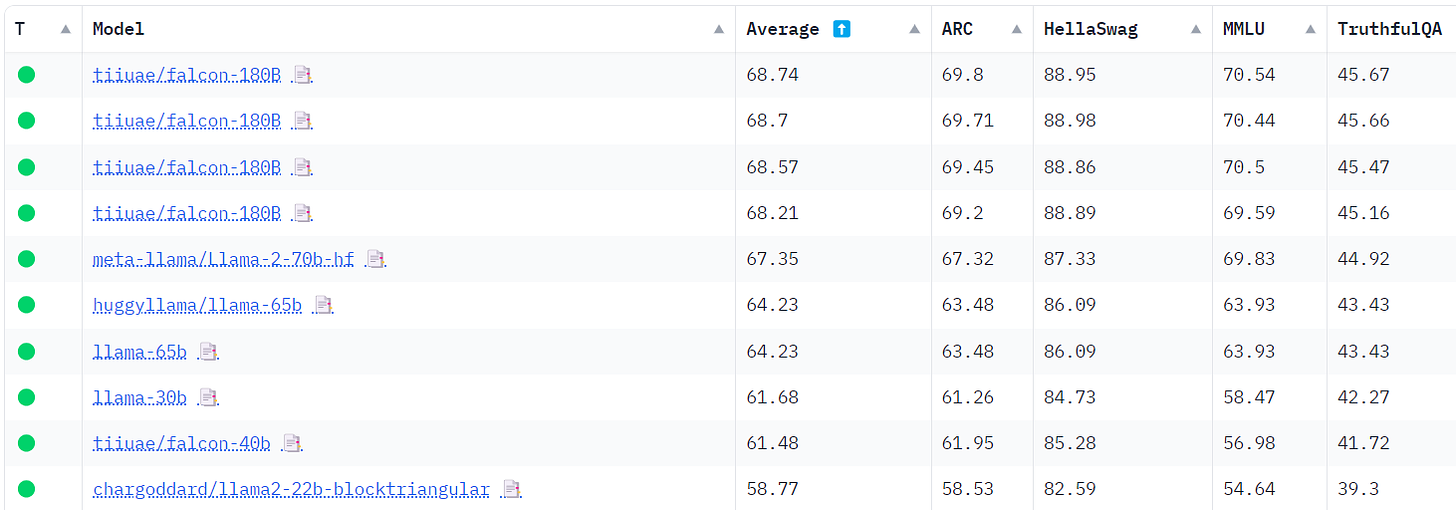

It generates short song clips in 50+ languages and is very simple to use.

You type in the /chirp command on Discord, then fill in the details:

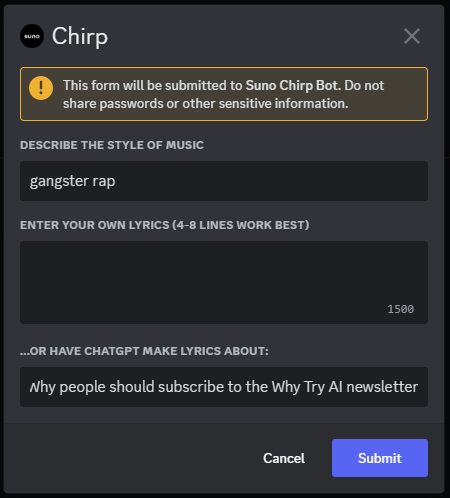

You can paste in your own lyrics or have ChatGPT to generate them behind the scenes, as I did above. Shortly after that, Chirp will write out the lyrics:

And a while after that, you’ll get your finished track:

Join the Discord channel right here to try making your own tracks.

3. More generative AI features from Zoom

Not to be outdone by seemingly every other single company jumping head first into AI, our favorite pandemic-era buddy Zoom is expanding its suite of AI features.

Zoom AI Companion (formerly Zoom IQ) is there to offer real-time transcripts, catch you up on meeting moments you might have missed, and much more:

For now, Zoom’s AI features are only for those on a paid plan, but we’ll see if some of them might trickle down to free users.

4. We just got a “poor man’s” Code Interpreter

In July, I experimented with the Code Interpreter and showcased some of its capabilities. (It’s since been renamed to the more apt “Advanced Data Analysis.”)

But the Code Interpreter / Advanced Data Analysis requires a paid ChatGPT Plus subscription.

You know what doesn’t?

The new Open Interpreter by Killian Lucas.

It’s an open-source implementation of the Code Interpreter that sits on your computer and can do all sorts of cool things, like interacting with your system, analyzing documents, creating functioning apps, and more:

If you know what you’re doing, you can install the code and start using it right away.

You can also check out this interactive Google Colab demo.

Or you can sign-up for early access to the upcoming desktop app.

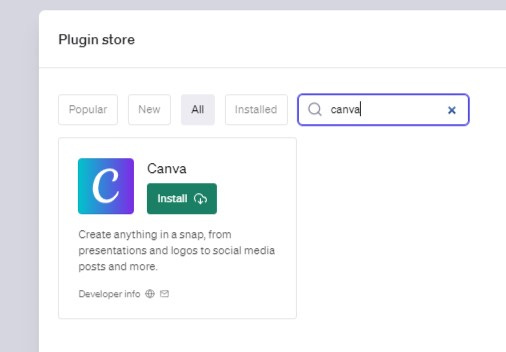

5. Canva launches an official ChatGPT plugin

Canva just released a plugin for ChatGPT that makes finding appropriate design templates easier.

You find it in the Plugin store:

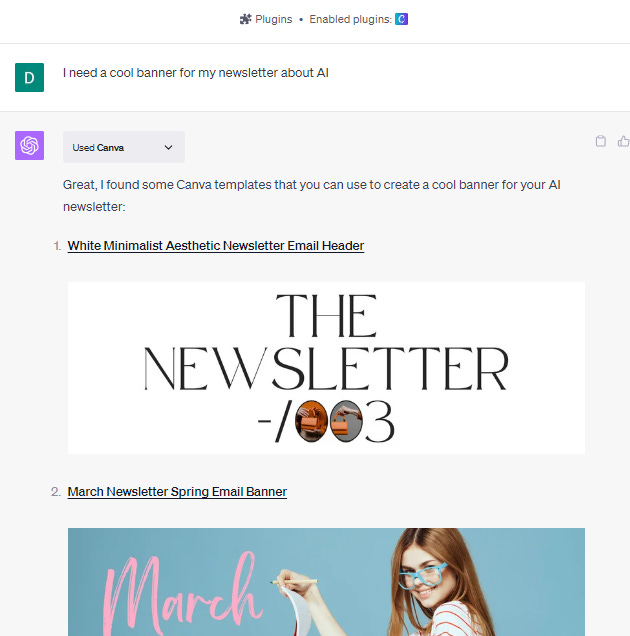

Once installed, ChatGPT will automatically lean on the Canva plugin whenever your query is related to graphics, like so:

Clicking on a suggestion will take you directly to Canva for further editing:

Now, if you’re already a regular Canva user, you probably won’t see much value in this plugin in its current form.

All it really does is sort through the Canva template library and pull the most relevant suggestions based on your prompt.

But it’s great for non-visual folks like yours truly. I can now get quick inspiration directly in the ChatGPT interface without needing to head to Canva.

I look forward to seeing whether Canva will release more options for the plugin (e.g. editing templates using natural language).

🛠️ AI tools

Today I’ll focus on AI-powered video apps.

6. Jupitrr

Jupitrr is a stupid simple way to add B-roll footage to an existing video.

You make changes and generate footage by literally editing the transcript itself.

Like so:

Jupitrr automatically injects relevant stock footage, adds charts, and more.

If you don’t mind the Jupitrr watermark on your videos, the free plan gives you 10 minutes a month of AI Video transcription and access to unlimited stock library.

7. Neural Frames

When I first talked about video interpolation in October last year, the process required quite a bit of legwork and knowledge of how Google Colabs work.

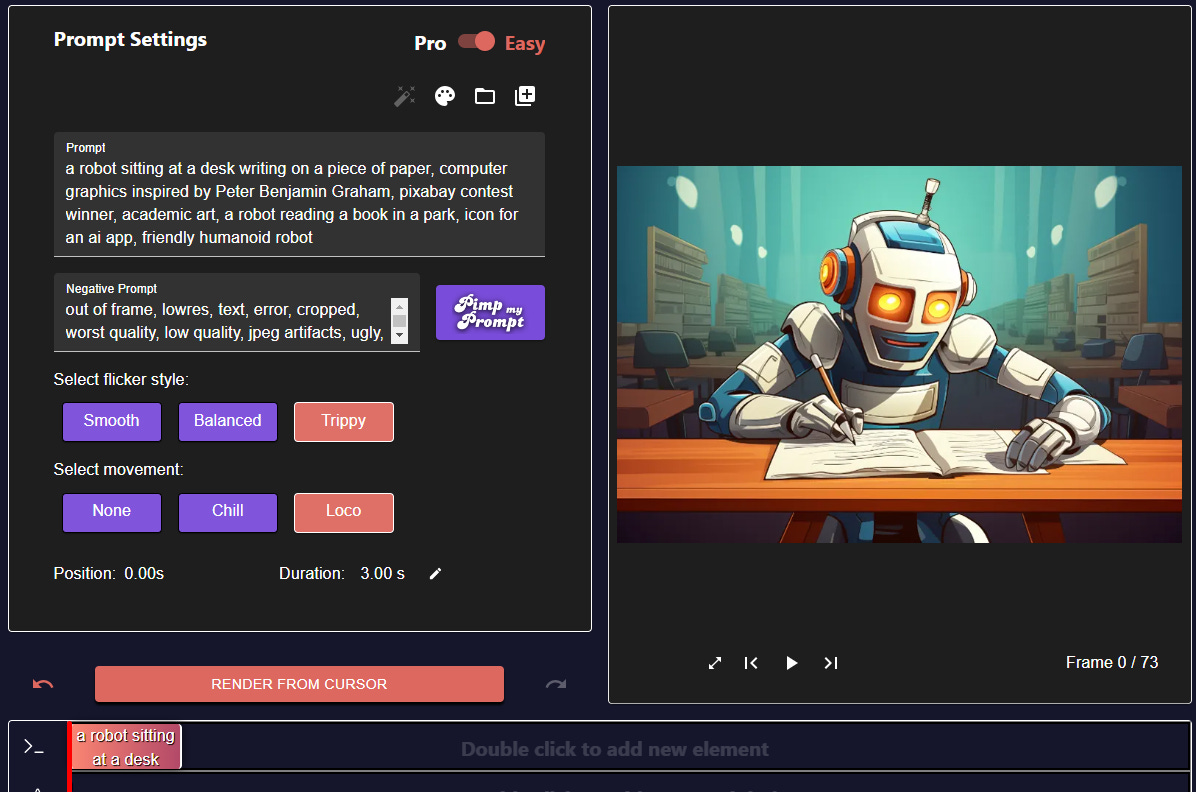

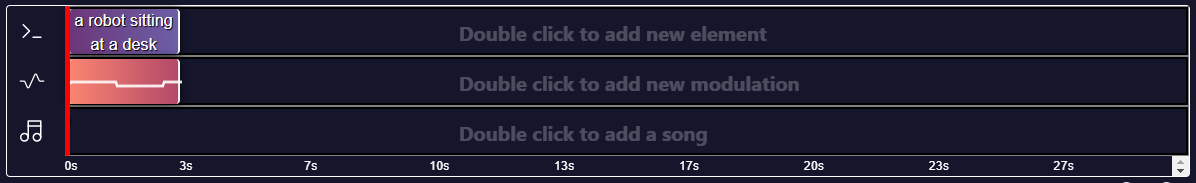

Neural Frames can make interpolated videos from any image you upload.

For my quick test, I dropped the featured image from my recent post into the tool:

As you can see, Neural Frames immediately generated a pretty accurate placeholder prompt based on the image. All I did was crank up the settings to “Trippy” and “Loco,” which resulted in these three seconds of absolute madness:

It’s definitely possible to do something far more purposeful. There’s even a robust editor that lets you string video snippets together, as well as add modulations and music tracks:

The free plan gives you six seconds of interpolated video a month, which is just enough for a quick taste of what it can do.

8. Invideo AI

Invideo AI can generate an entire video from a single text prompt. This includes:

Writing the script

Creating a voiceover using text-to-speech

Finding and adding relevant stock footage

Selecting appropriate music

Putting all the elements together

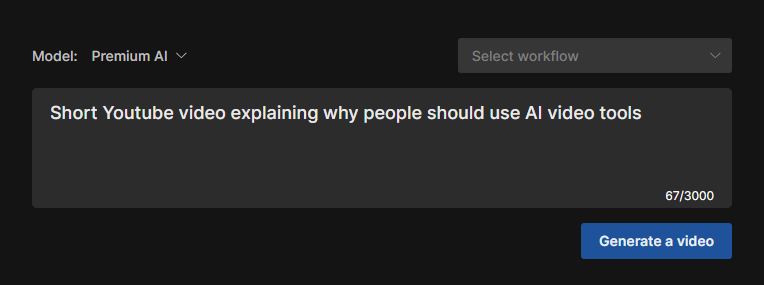

To test it, I tried the following prompt:

Invideo AI then asked me for just a bit more input:

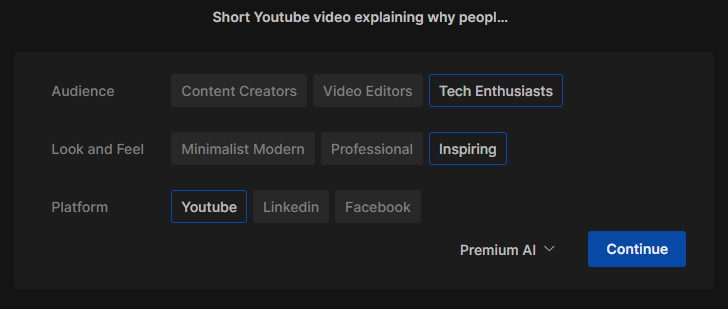

After about a minute, it then spit out this complete video:

To be fair, the video definitely needs quite a bit more work and refinement, if only to remove the haunting images of soulless robots.

Thankfully, Invideo AI lets you directly edit the script, pick different stock footage, ask AI to make further changes, and so on.

It’s free to try if you don’t mind all the watermarks.

💡 AI tip

Here’s this week’s tip.

9. Remix old-school Midjourney images with Vary (Region)

Midjourney Version 5 is pretty damn great.

At the same time, many people enjoy the less polished, painterly feel of older iterations like V3.

But why choose?!

You can actually do pretty cool mashups by inpainting things into V3 images using newer versions.

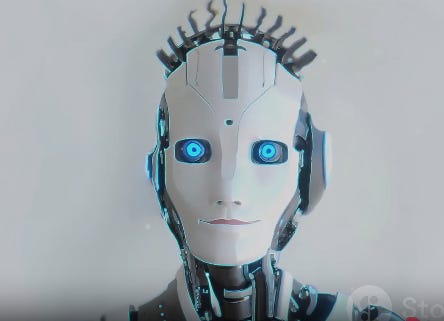

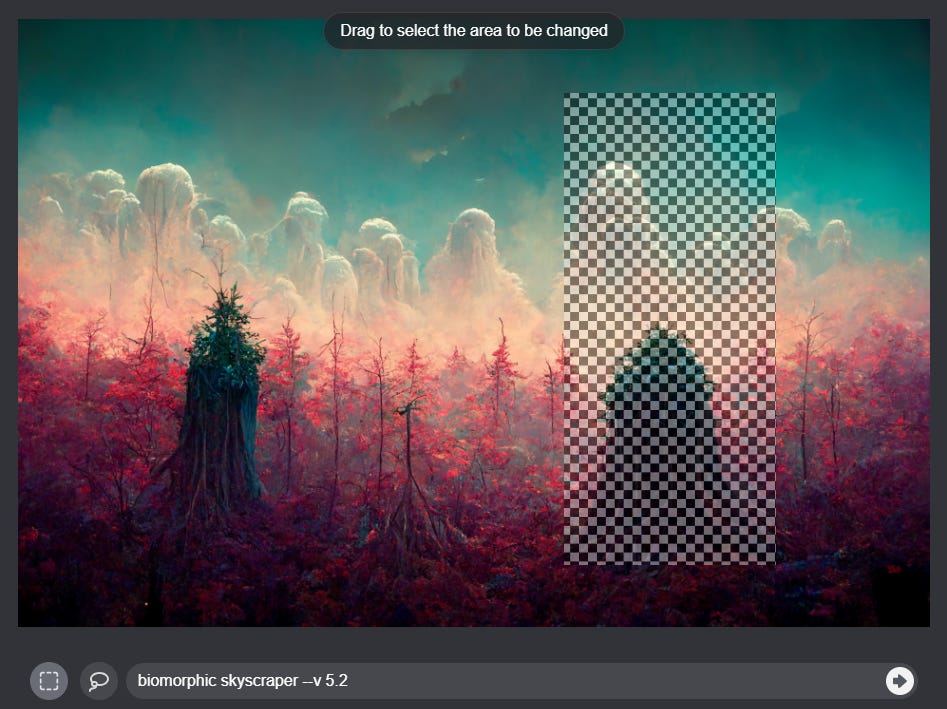

Say you have this V3 image of a surreal forest:

You can now use the Vary (Region) button to ask for a V5.2 addition, like so:

The end result is something that keeps your old image as a base but seamlessly incorporates a more polished object:

Try it!

I would love to see what cool results you get.

Thanks to Shane McGeehan for inspiration for this tip.

🤦♂️ 10. AI fail of the week

Midjourney insists this is a “focus group.” Live and learn.

Great article! I found Invideo AI to be quite a gamechanger. I think content like that will absolutely FLOOD Youtube. The voiceover is just believable enough and the stock footage is just relevant enough that it will be possible to generate seemingly authoritative videos on any subject.

Also, I'd love to see you post a deep dive on Open Interpreter. Powerful stuff there, but the limitless system access seems like a bit of a privacy concern.

I don't know about you, but I do a fair amount of Zoom meetings. An accurate transcript would be incredibly helpful.