We’re doing it again, folks!

The improved “Search” mode in ChatGPT hasn’t been out for a second and we’ve already buried Google:

Man, how I wish we’d cut this out.

Google’s been killed more times than Kenny from South Park.

I also don’t see why ChatGPT’s overhauled search is treated like a revolution.

Perplexity has been around for two years.

Granted, ChatGPT has better name recognition.

And yes, having an AI-powered search that integrates with the rest of ChatGPT’s growing suite of features is super convenient.

But let’s stop treating this launch like OpenAI just single-handedly invented whatever the modern equivalent of sliced bread is.

Venting aside, I absolutely get the appeal of AI-powered search.

It truly is a promising new paradigm.

But I think we’re overlooking an important side effect of this fundamentally different way of getting information.

Let me try to explain.

The appeal of AI search

Look, I get it.

We’ve all grown increasingly tired of Google.

From the ever-expanding amount of ads crammed down our throats to the general “enshittification” of search results, we’re craving something better.

In contrast to Google and other traditional search engines, AI-powered search promises lots of exciting changes:

Unstructured queries: Unlike Google et al., AI search lets us move from keyword-based queries to asking broad, more complex questions.

Personalized results: AI search engines adapt to our requests instead of feeding us generic lists of top results for the same topic.

Many sources at once: AI can almost instantly parse and synthesize multiple sources of information to provide a coherent and structured summary.

No ads (for now): The clean and uncluttered interface of an AI search engine feels like a breath of fresh air. (I wouldn’t bet on this ad-free model sticking around though.)

Hell, even Google is embracing the shift and expanding its “AI Overviews” to more countries.

So AI search is on track to become the new normal.

And that might be an issue.

AI search and the illusion of knowing

You know what’s frustrating about Google’s long list of search results?

It forces us to manually visit each site ourselves.

Ugh!

But on the other hand…it forces us to manually visit each site ourselves.

That way, we get to know if the information is legit or simply your uncle’s unhinged Tumblr ramblings.

AI-powered search—by its very nature—removes this critical interaction between the reader and the source.

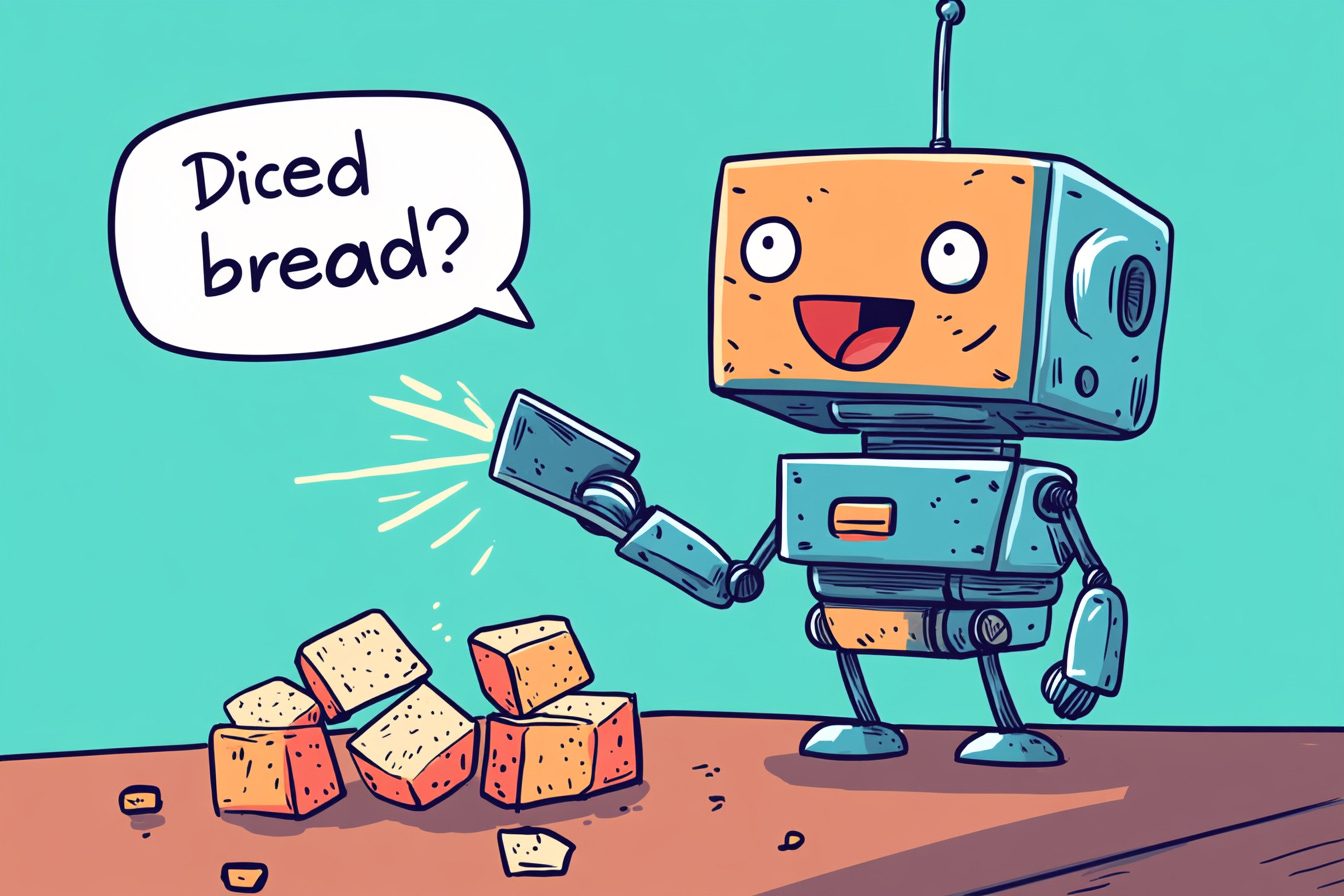

“Uh, Daniel, you do know that both Perplexity and ChatGPT search provide sources for their answers, right?”

I do, my dear imaginary friend.

Here are some counter-questions:

How many people proactively click links to sources in an article?1

When you come across information on social media, are you in the 23% that verify it? (Some estimates are even lower at just 14%.)

When asked where you got your facts, how often do you simply say “I saw it on Facebook / TikTok / [insert your social media platform of choice]”?

By some estimates, at least 59% of Twitter users have shared stories without ever reading them.

Most people will only read the headline of a news article.

I think you get the picture.

“Chill, bro,” you say, “I might not click every link, but I’ll always super-duper-triple-check the facts when they look fishy.”

First off, ease up on the whole “bro” thing, pal. You don’t know me like that.

Second, that’s exactly the point: You might not even know that anything’s off.

It’s easy enough to spot glaring errors for general knowledge topics.

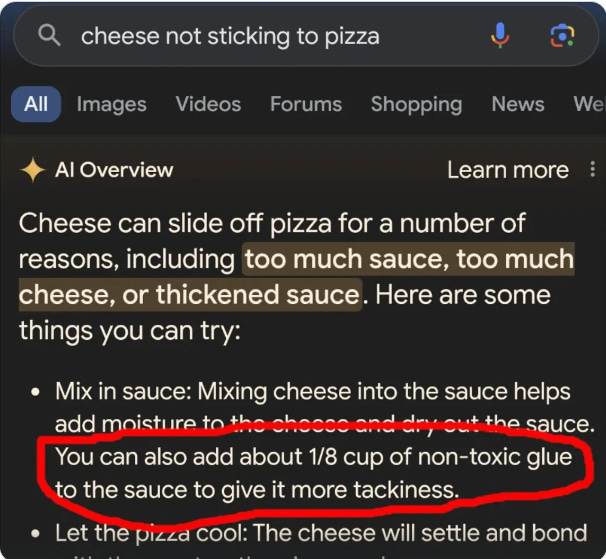

When Google’s AI mistakenly tells you to add glue to your pizza sauce, you don’t have to be a nutritionist to call bullshit:

But what if it’s something more subtle and insidious like ChatGPT citing a non-existent legal case or a specialized chatbot providing harmful eating disorder advice?

Look, we’re already too busy to check every source.

The Internet gave us information overload and a fragmented patchwork of sites to pull our facts from.

Navigating it all can be a nightmare.

We’re desperate for a way to cut through the noise and get fluff-free, no-nonsense answers.

So what happens when the information in front of us has been conveniently synthesized and neatly presented by a seemingly all-knowing and objective machine?

Will our first instinct be to doubt it or to welcome it?

How many will bother double-checking the individual sources, let alone the entire premise of a handy AI summary?

How many people who aren’t AI enthusiasts are even aware of inherent biases in AI models or that LLMs make up plausible-sounding bullshit?

And even if AI summaries are magically 100% accurate, what will be the long-term effect of us settling for condensed AI snippets instead of doing our own deep research?

My fear is that at least for a while, AI search will only amplify the Dunning-Kruger effect, giving us the illusion of knowledge by pacifying us with a seemingly authoritative filter between ourselves and the source.

On the other hand, we’re adaptable creatures and have previously adjusted to new ways of absorbing information. We’ll figure it out and our habits will evolve to account for AI’s limitations.

Right?

Maybe I’m overreacting and we should just embrace the arrival of AI-powered search.

But then again, that’s what ChatGPT would want me to think, isn’t it?

🫵 Over to you…

What’s your take? Am I underestimating our collective wisdom? Will we easily figure out how to navigate the new search paradigm?

Or is it time to panic?!

Leave a comment or drop me an email at whytryai@substack.com.

For instance: How many of you checked this footnote? And now that you did, how many of you clicked the link in this article that ended up Rickrolling you? Thought so.

Okay. So, like 5% of us are about to get a whole lot smarter with these tools. We understand reasonably well that we are the easiest ones to fool (ourselves), and so we have to verify the things we're putting out there into the world. These tools are very much designed for people like us.

At the same time, these tools may well effectively make the rest of the population dumber, simply because they haven't practiced rigorous fact-checking every day as we have.

There's going to be a pretty big divergence in the next decade or two.

Rick-rolled.