10X AI (Issue #47): Llama 3, Reka Core, VASA-1, and a Hedge-Hog

Sunday Showdown #7: Gemini 1.5 Pro vs. GPT-4 Turbo: Who writes the best flash fiction?

Happy Sunday, friends!

Welcome back to 10X AI: a weekly look at generative AI that covers the following:

AI News + AI Fail (free): I highlight nine news stories of the week and share an AI photo or video fail for your entertainment.

Sunday Showdown + AI Tip (paid): I pit AI tools against each other in solving a specific real-world task and share a hands-on tip for working with AI.

So far, I had image models creating logos, AI song makers producing short jingles, AI-generated sound effects, LLMs telling jokes, lip-syncing by Pika and Runway, and AI songs by Udio and Suno.

Upgrading to paid grants you instant access to every Sunday Showdown + AI Tip and other bonus perks.

Let’s get to it.

This post might get cut off in some email clients. Click here to read it online.

🗞️ AI news

Here are this week’s AI developments.

1. Meta starts rolling out its Llama 3 models

What’s up?

Meta has just released the first two models of its new Llama 3 family.

Why should I care?

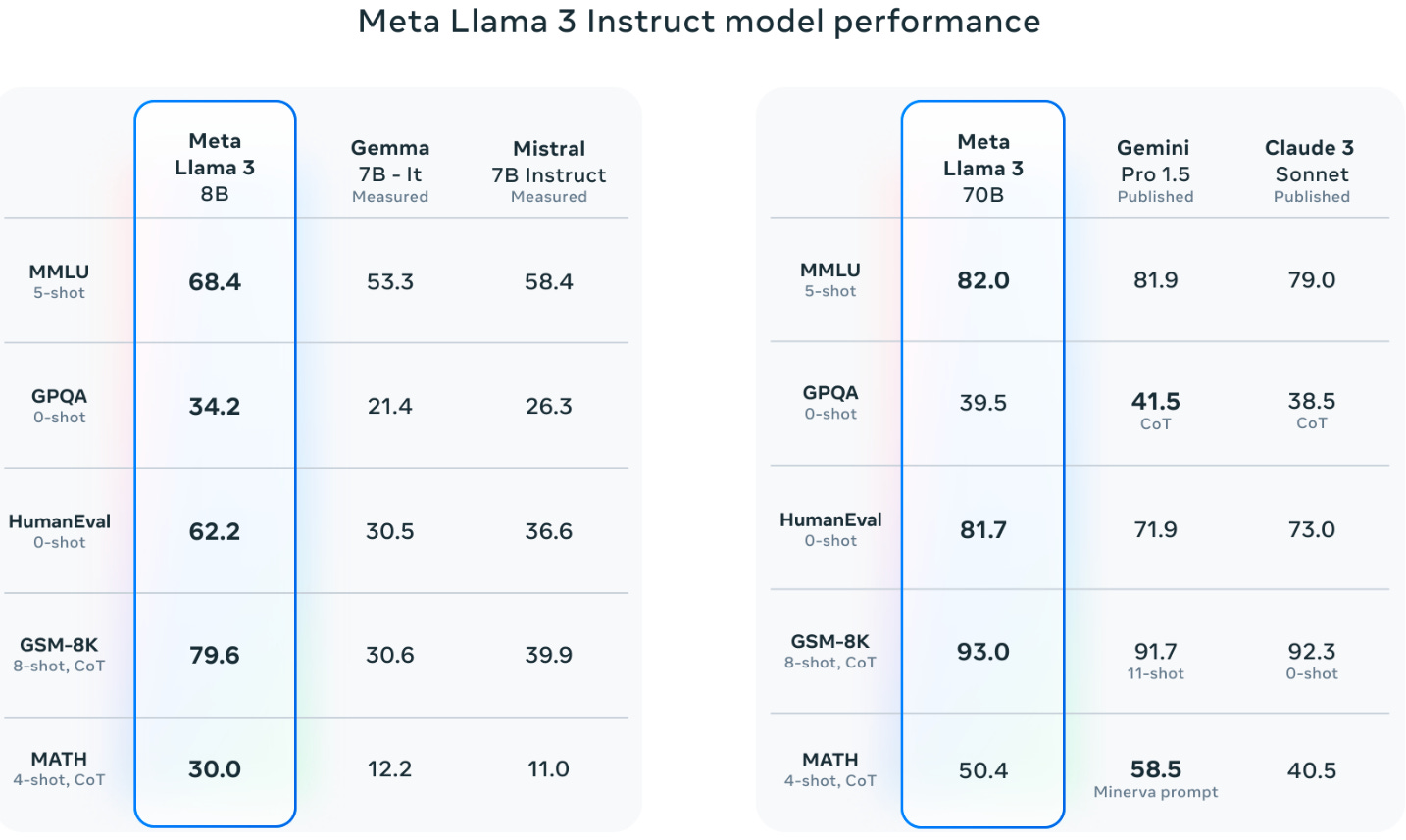

Meta continues to push the limits of open-source LLMs. The two smaller models, Llama 3 8B and 70B, are currently the best in their class. Llama 3 70B gives Claude 3 Sonnet and Gemini Pro 1.5 a run for their money. Meta is currently training larger models—the largest has over 400B parameters—and expects to make them available in the coming months.

Where can I learn more?

Read the official announcement post.

Visit the official Llama 3 page.

Request to download the model.

Try chatting to Llama 3 at Meta.ai.

2. Reka Core can process images, audio, and video

What’s up?

Reka AI, the maker of Reka Flash, has launched its most capable multimodal model called Reka Core.

Why should I care?

Reka Core boasts top-tier performance on multimodal benchmarks, going head-to-head with the big guns:

In addition to text, Reka Core accepts images, audio, and video as input and speaks 32 languages like its smaller “Flash” cousin.

Where can I learn more?

Read the official announcement post.

Follow the official announcement thread on Twitter / X.

Explore the Reka Core showcase.

Chat with Reka Core (requires sign-up).

3. VASA-1 can animate images using audio clips

What’s up?

Microsoft researchers showcased VASA-1, which combines images and audio clips to create believable talking avatars.

Why should I care?

Hey, remember Alibaba’s EMO? Well, forget EMO! VASA-1 is outright scary. Given just a single image of a person paired with an audio clip, VASA-1 makes a realistic talking head of that person. Optional controls let you adjust the speaker’s emotion, camera view, and more. Understandably, Microsoft is not planning to make the model available at this time.

Where can I learn more?

Read the VASA-1 project page with more demo videos.

4. New AI features coming to Adobe Premiere Pro

What’s up?

Adobe plans to add new AI features to its Premier Pro video-editing software.

Why should I care?

If you edit your videos in Adobe Premier Pro, you’ll soon get new AI tools to play with. You’ll be able to automatically add and remove objects in a video, extend a video shot using image-to-video models, or generate new B-roll footage from text. Adobe is also working to incorporate third-party video models like Pika, Runway, and Sora into the workflow.

Where can I learn more?

Read the official announcement post.

5. Grok 1.5V can better navigate the real world

What’s up?

xAI has just upgraded Grok to version 1.5 and gave it better visual capabilities.

Why should I care?

Grok-1.5V is becoming competitive with existing multimodal models on many benchmarks. It’s also better at seeing and understanding the real world, at least according to the new RealWorldQA benchmark introduced by xAI itself. (How convenient!)

Where can I learn more?

Read the official announcement post.

6. Stable Diffusion 3 can now be accessed via API

What’s up?

Stability AI is making Stable Diffusion 3 and Stable Diffusion 3 Turbo available on its Developer Platform.

Why should I care?

Developers can now integrate Stable Diffusion 3 into their tools and apps via Fireworks AI. Stability AI also expects to make Stable Diffusion 3 more broadly available soon.

Where can I learn more?

Read the official announcement post.

Check out SD3 on the Stability AI Developer Platform.

7. Amazon Music takes a page out of Spotify’s book with AI playlists

What’s up?

One week after Spotify introduced AI playlists, Amazon is doing the same with “Maestro.”

Why should I care?

Just as with Spotify, you can ask Maestro to create a playlist for you with a single text prompt. It even accepts emojis, so go ahead and see what a “🚽🪠” playlist sounds like. Maestro is available in beta to a small subset of US users, with more to follow.

Where can I learn more?

Read the official announcement post.

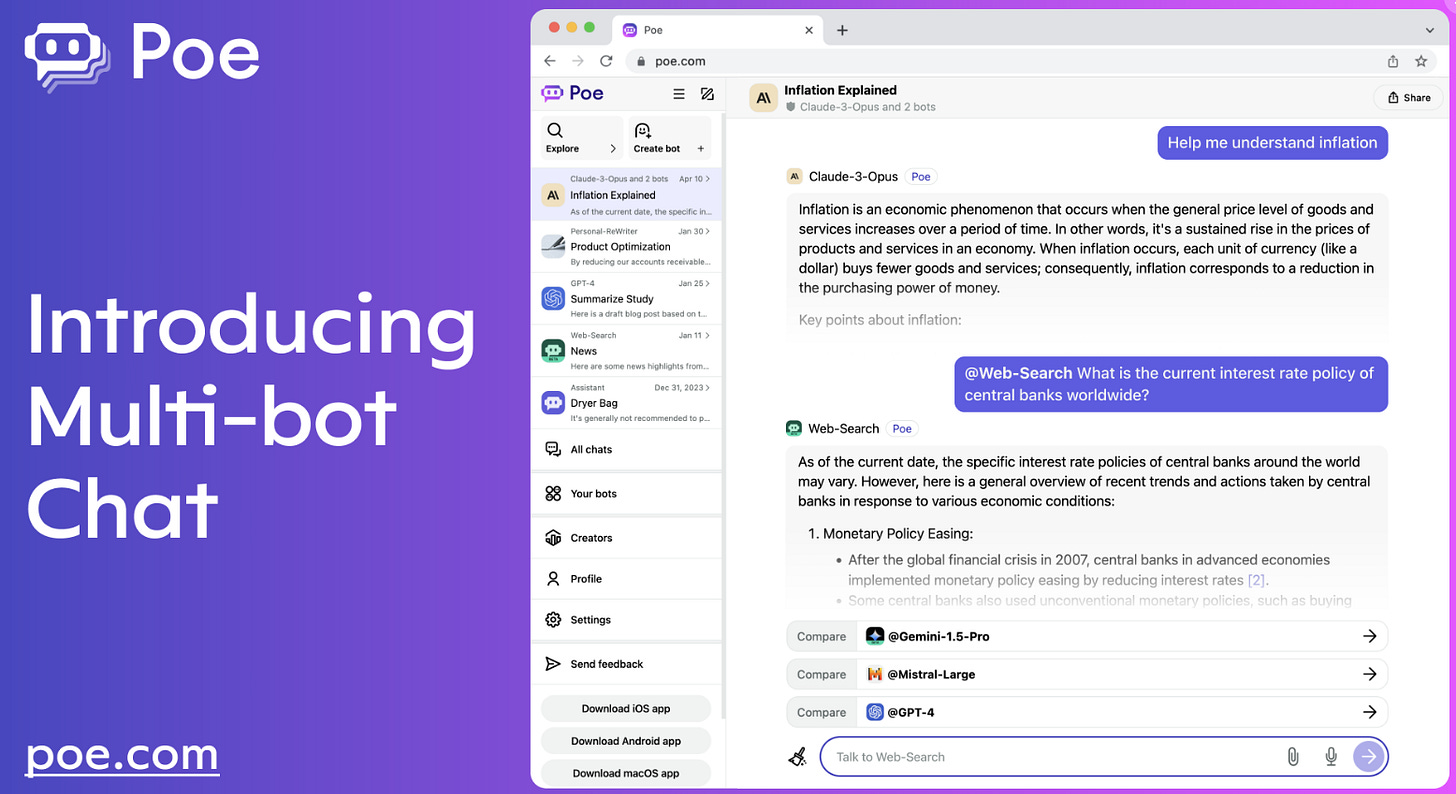

8. Poe lets you chat to many bots at once

What’s up?

Poe just launched a multi-bot chat feature, which lets you get responses from many AI models in a single thread.

Why should I care?

Large language models have their unique strengths. Being able to call upon the most well-suited model for a specific query is extremely convenient. You no longer have to switch chats and figure out ways to carry the context with you. Multi-chat also lets you easily compare how different models respond to the same prompt, so you get to learn more about them simply by chatting (an approach I strongly encourage).

Where can I learn more?

Read the official announcement post by Adam D’Angelo (Quora CEO).

9. Meta brings free AI to the masses

What’s up?

Llama 3 is now available on multiple platforms in more countries than before, as is Meta’s Emu image model.

Why should I care?

Meta’s newest Llama 3 model—likely the best LLM you can currently get for free—is already powering its AI assistant, which you can invoke on Facebook, Messenger, WhatsApp, and Instagram. In addition to the US, people in over a dozen countries can now access it.

Meta’s Emu image model can now also generate images in real time as you type. You can even create a short looping animation of your favorite image.

Where can I learn more?

Read the official announcement post.

Try chatting to Llama 3 on the web.

🤦♂️ 10. AI fail of the week

I wanted a giraffe, hedgehog, and pig in a meadow. I got more than I asked for.

Anything to share?

Sadly, Substack doesn’t allow free subscribers to comment on posts with paid sections, but I am always open to your feedback. You can message me here:

⚔️ Sunday Showdown (Issue #7) - Gemini 1.5 Pro vs. GPT-4 Turbo: Which one can write the best microfiction?

I enjoy writing flash fiction every now and then.

(The very first post I resurrected on

is a microfiction story of mine called “Nightmare.” If you’re bored, it makes for an ultra-quick Sunday read.)That got me curious: Can LLMs write compelling microfiction given a few fun constraints?

Why don’t we go ahead and find out: