10X AI (Issue #40): Stable Diffusion 3, Groq, Google's Gemma, and a Snake Genie

PLUS: Adobe Acrobat AI Assistant, reviews and ratings in GPT store, Phind-70B, AI in Windows Photos, Lexica's Aperture V4, and "Help me write" in Chrome.

Happy Sunday, friends!

Welcome to 10X AI: a weekly look at generative AI news, tools, and tips for the average user.

On this 40th-week anniversary issue, I’m shifting to a condensed and structured news format with curated links to relevant external resources for each entry.

This is in preparation for a paid-only segment coming to 10X AI next week. If you’re a paid subscriber, stay tuned!

🗞️ AI news

Here are this week’s AI developments.

1. Stable Diffusion 3 catches up to DALL-E 3 and Midjourney V6

What’s up?

Stability AI announced the next iteration of their text-to-image model: Stable Diffusion 3.

Why should I care?

Stable Diffusion 3 runs on a new “diffusion transformer architecture” and can reliably spell and handle detailed multi-subject prompts. Judging by preview images from Stability AI, the text rendering and quality are on par with DALL-E 3 and Midourney V6.

Where can I learn more?

Read Stability AI’s official announcement.

Sign up for the waitlist.

Watch the initial impressions by Olivio Sarikas:

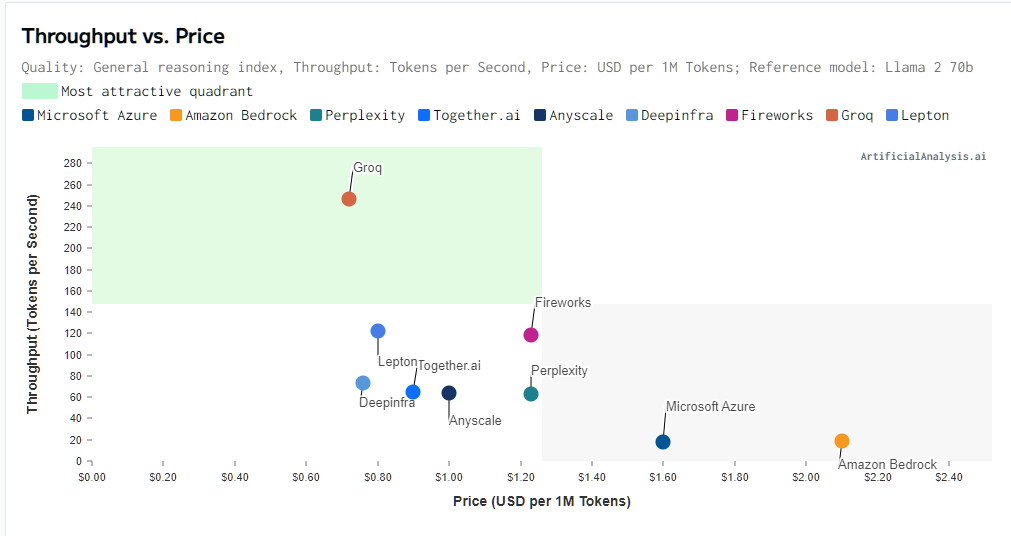

2. Groq makes LLMs go vroom

What’s up?

Groq makes specialized chips for AI models called Language Processing Units (LPUs).

Why should I care?

Large language models running on Groq’s chips are an order of magnitude faster than with traditional chips (Microsoft Azure = 18 tokens/second, Grok = 247 tokens/second).

Where can I learn more?

Visit Groq’s official site.

Try Groq for yourself (using Mixtral 8x7B or Llama 2).

Watch the explanation by This Day in AI:

3. Google’s high-performing open models

What’s up?

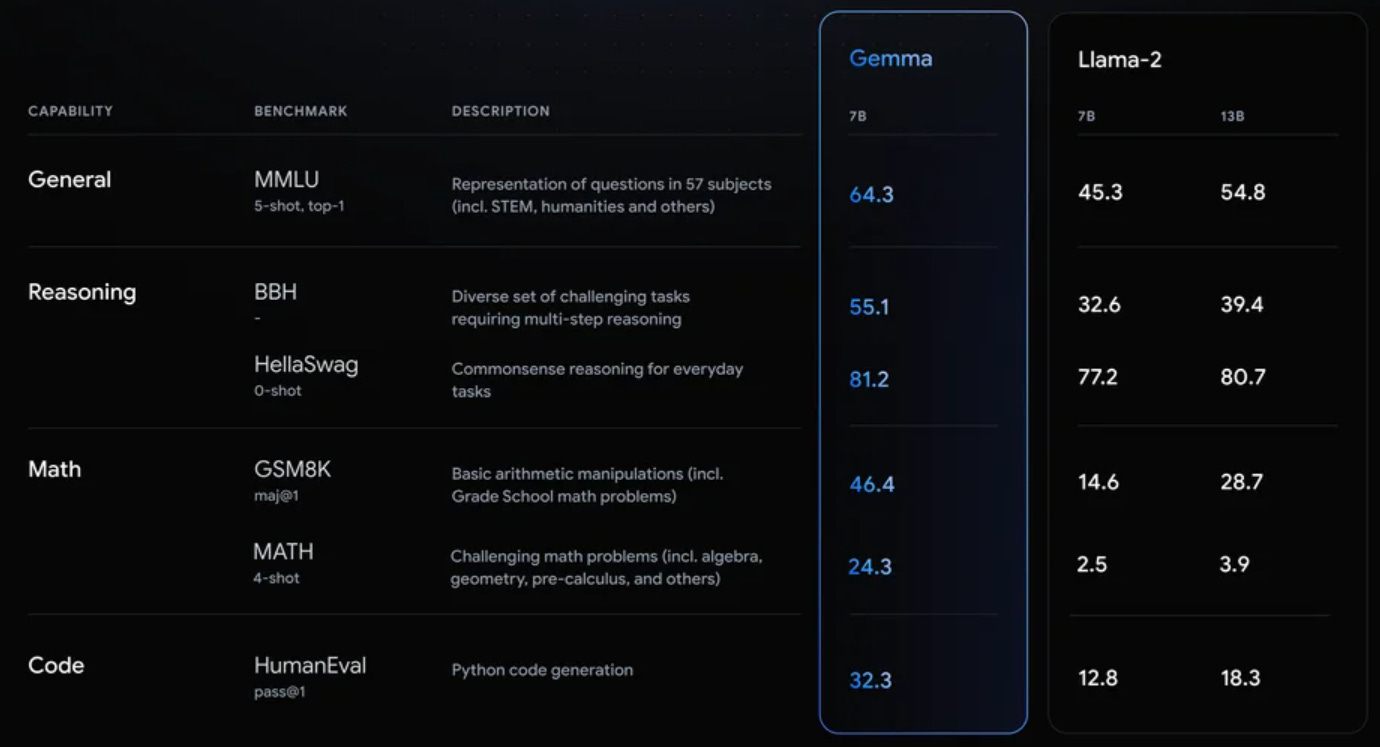

Google released two lightweight open models called Gemma 2B and Gemma 7B.

Why should I care?

Both models offer best-in-class performance on LLM benchmarks compared to similarly sized models like Llama 2. (Although some early impressions aren’t kind.)

Where can I learn more?

Read Google’s official announcement post.

Visit the official Gemma site.

Watch the intro by Sam Witteveen:

4. Adobe Acrobat launches an AI Assistant

What’s up?

It’s yet another “Chat with your PDF” app, but now from the makers of the PDF format.

Why should I care?

If you’re an Adobe Acrobat user, you no longer need third-party apps to enable AI chatbots for your PDFs. Adobe AI Assistant can help summarize documents, create outlines, and more.

Where can I learn more?

Read the announcement by Adobe.

Watch this “Sales Research” use case demo:

5. OpenAI adds review features to the GPT store

What’s up?

OpenAI added the option for people to rate GPTs and provide feedback to creators.

Why should I care?

It’s now easier for users to find the best-rated GPTs while giving GPT builders useful insights about their performance. It’s a step toward turning the current GPT space into more of a full-fledged store.

Where can I learn more?

Read OpenAI’s official tweet.

Explore GPTs and their ratings (you’ll need a ChatGPT Plus account).

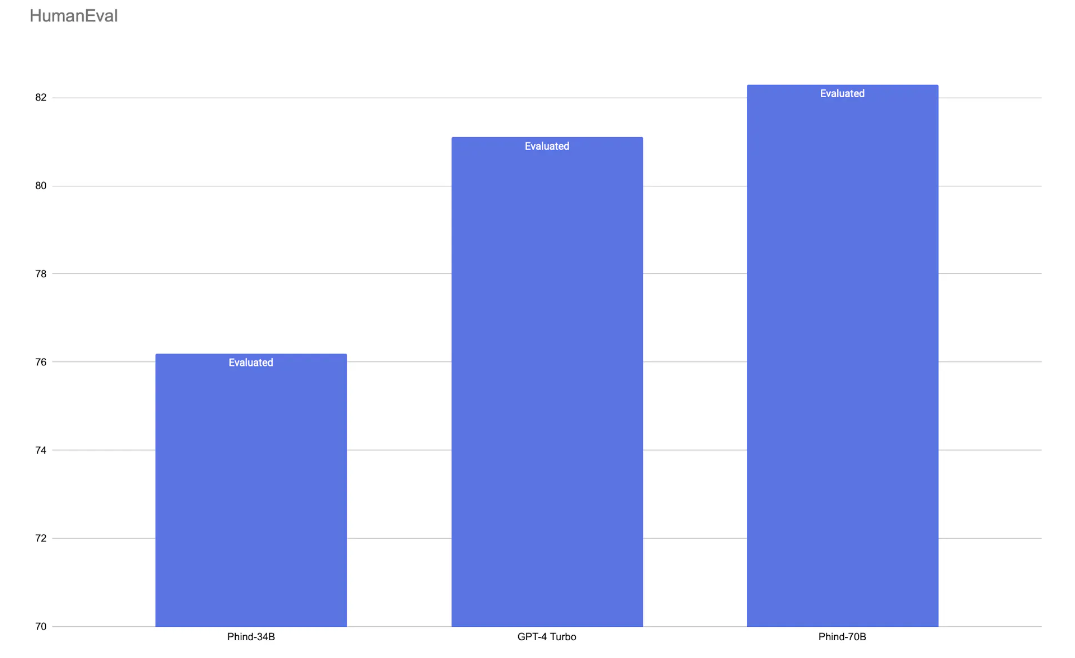

6. Phind-70B is a better coder than GPT-4 Turbo?

What’s up?

Phind released a new open-source model called Phind-70B based on Code-Llama-70B and optimized for coding and technical tasks.

Why should I care?

Phind-70B outperforms or approaches GPT-4 Turbo across multiple coding benchmarks and supports a context window of 32K tokens. It’s also faster at 80+ tokens per second compared to GPT-4 Turbo’s 20 tokens.

Where can I learn more?

Read the announcement by Phind.

Chat with Phind-70B for free.

Watch this walkthrough by World Of AI:

7. AI has come for your Windows Photos

What’s up?

The Windows Photos app is getting a few AI-assisted editing features.

Why should I care?

Windows users will have an easier time making minor edits to photos, including the ability to blur, remove, or replace the background as well as a “Spot fix tool” that lets you select and seamlessly remove specific objects.

Where can I learn more?

Read the official announcement on Windows Blogs.

8. Lexica’s Aperture V4 is pretty in 4K

What’s up?

Lexica just released the next iteration of their text-to-image model: Aperture V4.

Why should I care?

Aperture V4 offers better output quality and generates images in near-4K resolution right out of the box. You can use it to create images at no cost at Lexica.com.

Where can I learn more?

Read the announcement tweet by Sharif Shameem (founder)

Try Aperture 4 for free on Lexica’s site.

9. Get help writing on any site when using Chrome

What’s up?

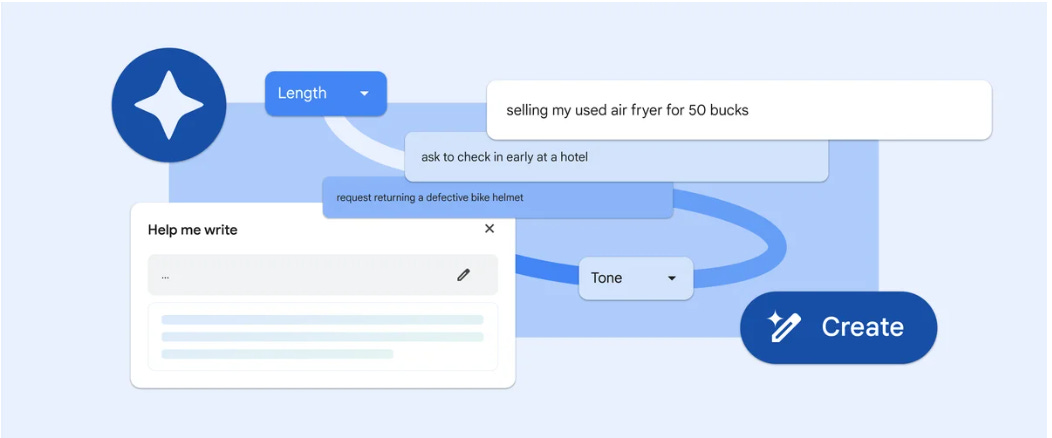

The “Help me write” feature in Chrome (announced a month ago) is now available to US users.

Why should I care?

Once activated, you should be able to call on the power of Gemini to help you write on any site or online tool that has a text box. (Go to Settings > Experimental AI and enable “Help me write”).

Where can I learn more?

Read the official announcement from Google.

Learn how to enable the feature on Google Chrome Help.

🤦♂️ 10. AI fail of the week

Ah, yes, Serpent Genie! Exactly how I remember him!

Previous issue of 10X AI:

10X AI (Issue #39): Open AI's Sora, Google's Gemini 1.5, Stable Cascade, and a "Hand Ox?"

Happy Sunday, friends! Welcome back to 10X AI: a weekly look at generative AI news, tools, and tips for the average user. Today’s yet another all-news edition. Let’s get to it. This post might get cut off in some email clients. Click here to read it online.

I like the format!

I am pretty sure I've been using the Microsoft image editing "spot fix" tool for a couple of months now. I was actually surprised to find it when I edited an image, and i've only used it twice so far, but it works well and does make image editing way easier.