Want Better AI Images? Ask a Chatbot!

How to use LLMs to brainstorm scene ideas, remix pictures, and more.

Happy Thursday, digital dingos!

Almost exactly1 a year ago, I wrote about “splatterprompting.”

That’s the practice of filling text-to-image prompts with dubious descriptors like “64K, HD, hyperrealistic, award-winning, masterpiece, best, epic, legendary, godlike, Captain-Marvelly”…you get the picture.

The gist of my argument was: “Stop that.”

My sentiment still holds.

Today, in line with the “connecting the dots” pledge I made last week, I want to demystify text-to-image prompting for those who still think it’s a complex and incomprehensible science.

Follow me, and you’ll see just how simple it can be…2

Image models have gotten way better

In my now-one-year-old article, I made the case for using natural language instead of splatterprompting:

I reasoned that image models would only get better at creating pictures based on straightforward inputs.

Now, I’m not one to toot my own horn, but *TOOT*

My prediction has come true in at least two major ways:

1. Image models make better images now

Hey, remember this meme from late 2022?

Oh, how we laughed!

Anyway, here’s Midjourney V6:

Yup.

Text-to-image models are that good now. People with three limbs and 17 fingers are largely a thing of the past.3

This means you no longer have to guide a model by telling it you want “a hand with five fingers” or using negative prompts like “low-res, bad anatomy, blurry, out of frame, deformed, mutated hands,” etc. (These are all real, by the way.)

To wit, here are two results for a simple short prompt: “Dog”:

Can you guess which one is Midjourney Version 1 and which one is Version 6?

(Hint: V6 is the one that doesn’t look like a Cronenbergian horror.)

Midjourney V6 doesn’t need a detailed specification of how many eyes dogs have to return a polished, fully realized image.

Instead, you can use the prompt space to focus on vivid descriptions of the exact scene you want.

This brings us to…

2. Image models understand you better now

The launch of DALL-E 3 marked a new era in just how many instructions and descriptors a text-to-image model can reliably handle.

Turns out it’s “many.”

From DALL-E 3 to Google’s Imagen 2 to Midjourney V6, the current generation of image models can successfully respond to long, elaborate scene descriptions written in natural language.

That’s great news for you: You can finally stop chasing “advanced prompts” all over the Internet.

Nowadays, an “advanced prompt” is just you telling the model what you want it to draw in as many words as are necessary to convey your vision.

“But Daniel,” you counter, “This assumes I’m able to describe my vision accurately and exhaustively. In case you haven't noticed, I’m not exactly Hemingway!”

I know, my hypothetical straw man friend.

I also no word so good sometimes.

Luckily, we have another AI tool to help our word-challenged selves…

Enter chatbots

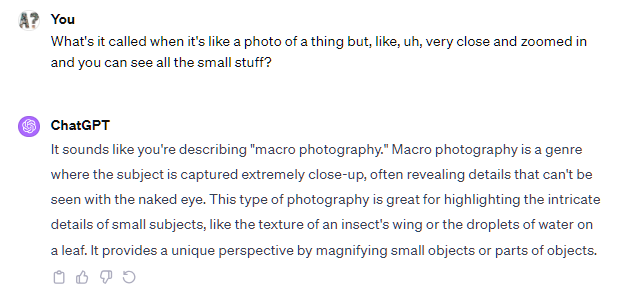

Unlike many of us, chatbots powered by large language models always know the right terminology and are pretty great with descriptions.

If you don’t know what “macro photography” is, they have your back:

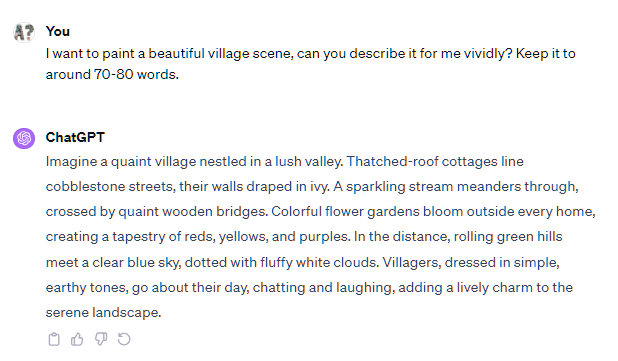

If you aren’t inspired enough to describe an interesting scene, they have your back.

This is perfect for anyone who wants to experiment with image models but doesn’t know where to start.

Chatbots are the ideal middleman between you and a text-to-image model, as expressed by this technical formula (see if you can follow):

Image models that understand natural language

+

LLMs that can describe scenes using natural language

=

Good things!

With that in mind, let’s explore a few specific ways to use AI chatbots in combination with text-to-image tools.

What chatbots can help with

For demo purposes, I’ll be using ChatGPT to generate prompts and Midjourney V6 to create images.

But the takeaways are generally applicable.

You can use your chatbot of choice and plug the resulting prompts into your preferred image model. (Don’t have one? I covered seven text-to-image models a month ago, and most of them are free to use.4)

For an all-in-one experience, you have two great options:

Paid: If you’re a ChatGPT Plus user, you can discuss what you want and generate images in the same chat using DALL-E 3.

Free: You can replicate virtually the same experience with Microsoft Copilot, which is also powered by GPT-4 and DALL-E 3.

Off we go!

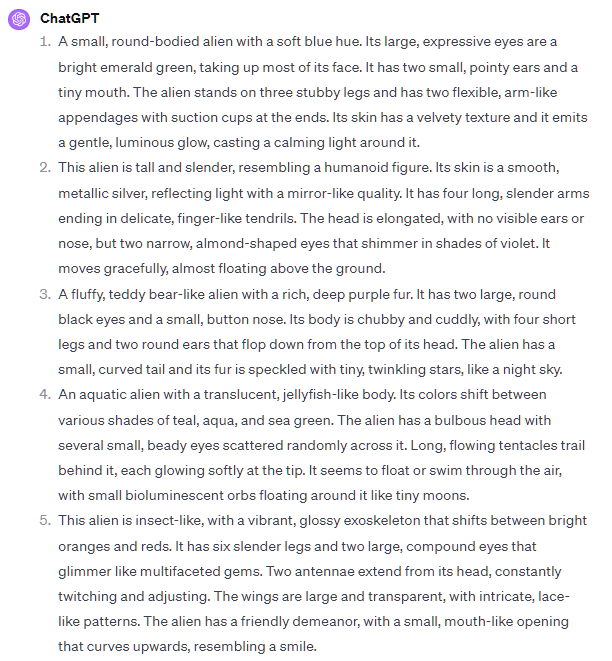

1. Brainstorming scene ideas

Let’s say I want to make a kid-friendly alien but don’t know the exact look I’m going for. I ask ChatGPT to do something like this:5

I want to draw a cute alien for my kids, but I don't know what it should look like. Please give me five very different descriptions of about 80 words each. Aim for different styles and color palettes for each. Don't start your descriptions with commands like "Imagine" or "Create." Instead, use words sparingly to describe only the visual elements that can be captured in a single image.

ChatGPT happily obliges:

I’m tempted to clean the descriptions up a bit (e.g. “Tall, slender alien” instead of “This alien is tall and slender”).

But to prove my “keep it simple” point, I copy-pasted ChatGPT descriptions directly into Midjourney V6. Here are the three most kid-friendly results:

All images reflect most of the major prompt elements, with minor exceptions.

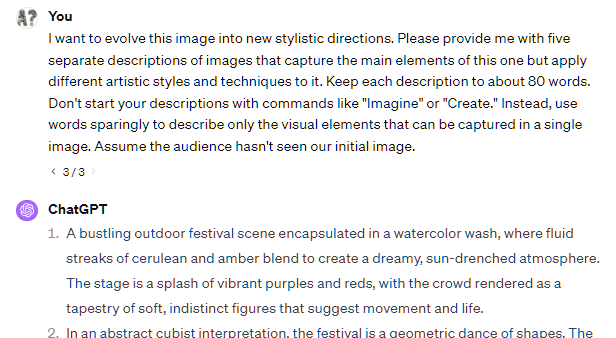

Now let’s imagine I know the exact subject I’m going for but am not sure how best to capture it. ChatGPT to the rescue:

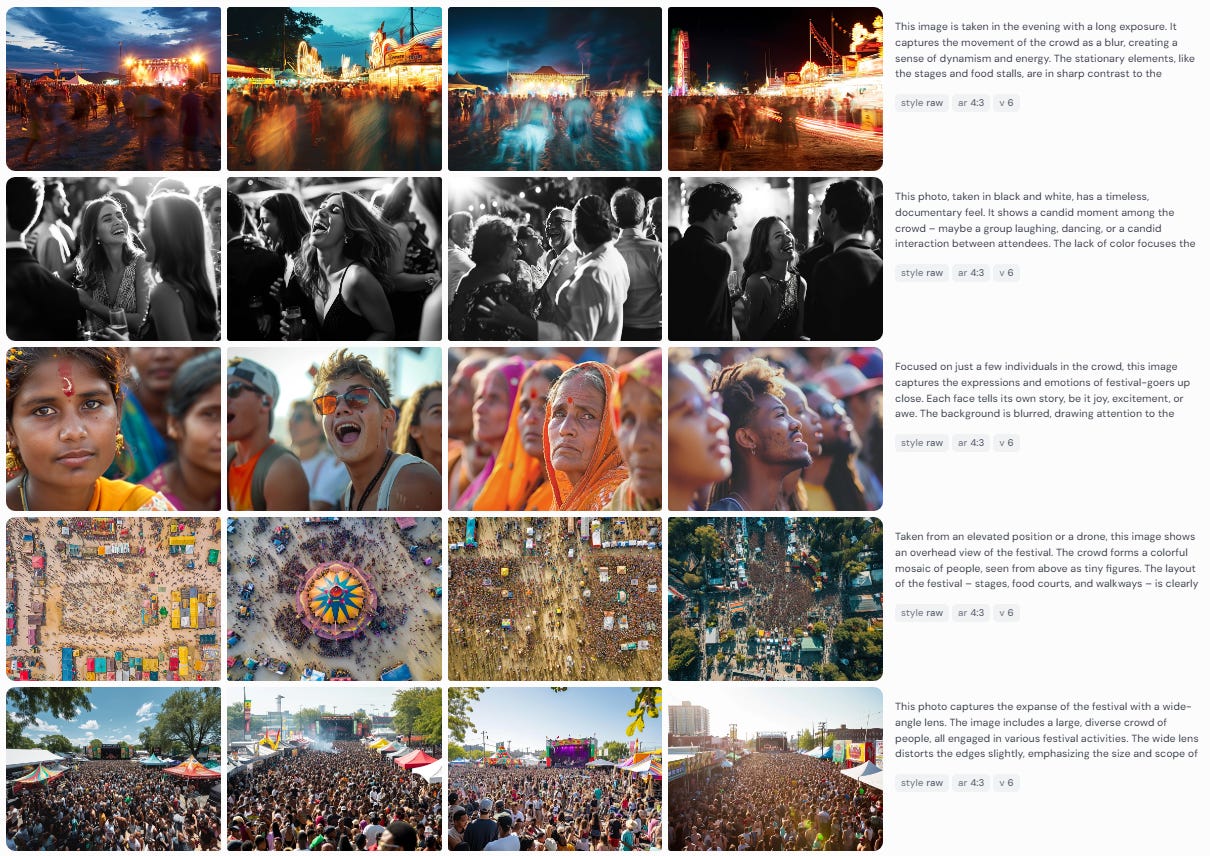

I'd like to make a photograph of a crowd at an outdoor festival, but I'm not sure of the best way to do it. Please describe five separate images that use different styles of photography, camera angles, and more. Keep each description around 80 words and only describe what's seen in the image.

Here’s what I get:

Once again, you’ll want to tighten up the descriptions or chat with ChatGPT to do so. But even if you don’t, check out what Midjourney gives me on the very first try:

Here are three handpicked images:

You can use this approach to brainstorm anything from painting types to visual styles to art materials.

Give it a go and let me know how this works for you.

2. Mimicking an image or style

For this, you’ll need a multimodal LLM with image recognition.

If you’re using ChatGPT Plus or Microsoft Copilot (my recommendations above), you’ll be fine: Both of them can see and analyze images.

Start by uploading your image. You’ll typically find the “Upload a file” button to the left of the chatbot’s text input. Here’s ChatGPT:

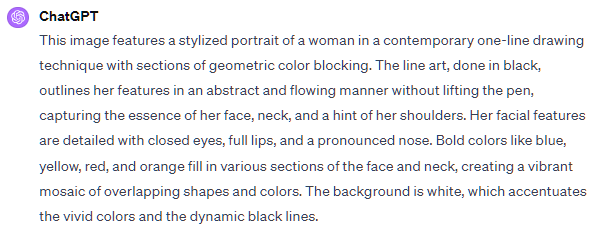

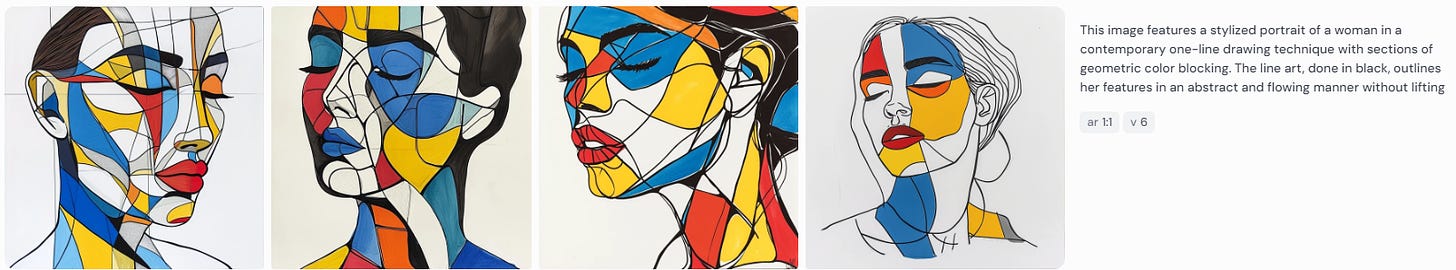

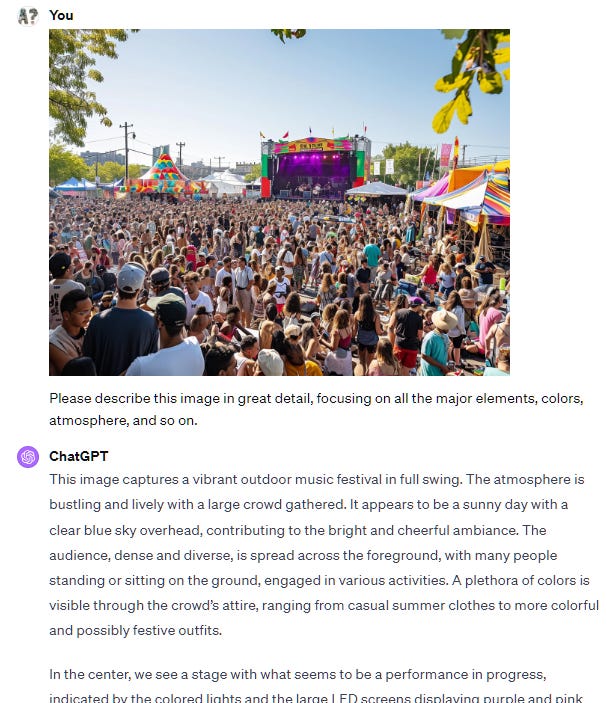

I picked this image from Midjourney’s public showcase:

Then I asked this:

I want to create an image similar to this one. Please describe it as accurately as possible, in a way that would let an artist recreate it. Keep your description to a single paragraph of about 80 words.

ChatGPT responded with:

Again, even without refining ChatGPT’s wordiness, we get pretty damn close:

If you like the style itself but want a different subject, you can try this:

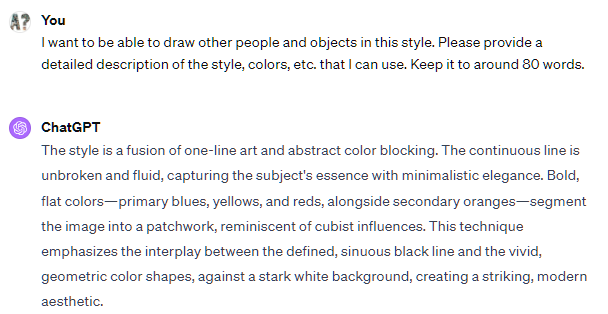

I want to be able to draw other people and objects in this style. Please provide a detailed description of the style, colors, etc. that I can use. Keep it to around 80 words.

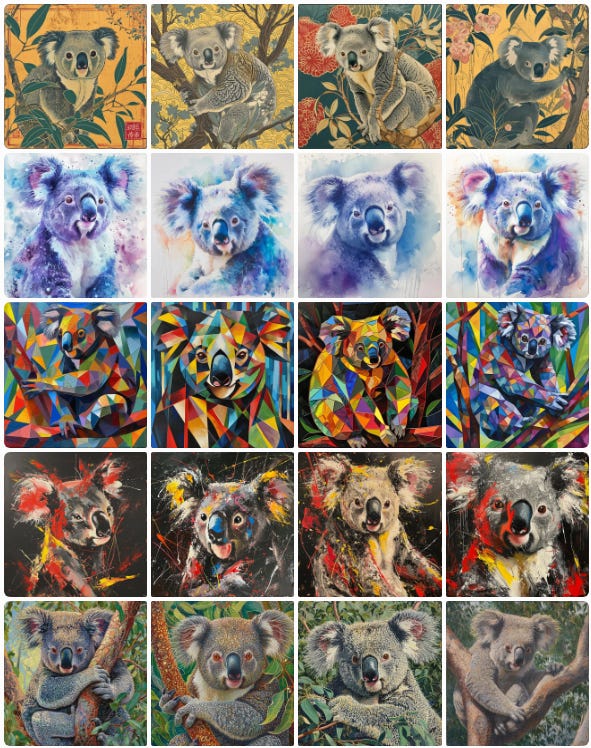

Here’s a portrait of a koala, using the exact style description above. (I just replaced “the style is a” with “koala portrait,”):

As you can see, even without transforming ChatGPT’s first draft into better, cleaner descriptions, Midjourney gives us what we need.

3. “Remixing” an image

The final fun thing to try is to reimagine an existing image. It’s a combination of the two uses above. You’ll also need an LLM with image recognition for it.

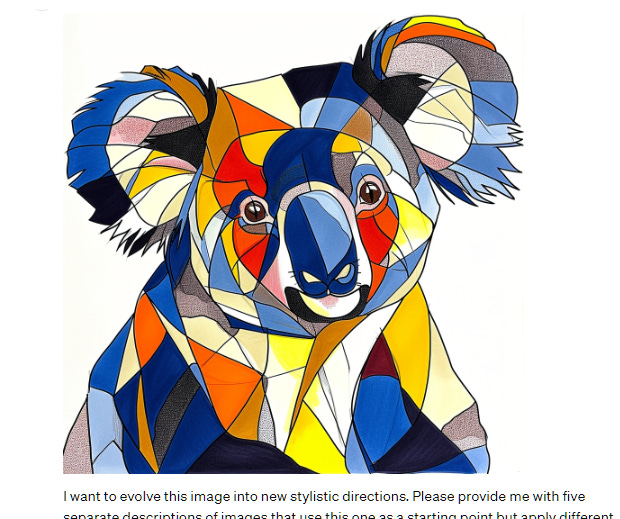

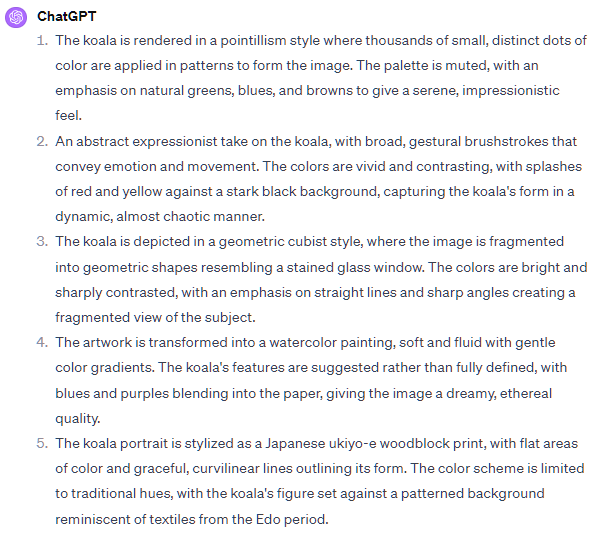

Start by uploading your starting image as I’ve shown above. In my case, I used our koala with the following prompt:

I want to evolve this image into new stylistic directions. Please provide me with five separate descriptions of images that use this one as a starting point but apply different artistic styles and techniques to it. Keep each description to about 80 words. Don't start your descriptions with commands like "Imagine" or "Create." Instead, use words sparingly to describe only the visual elements that can be captured in a single image.

Here’s ChatGPT:

As before, I plugged the unedited results into Midjourney to get a range of styles:

Here are a few close-ups:

This was a relatively simple case, because “koala” is easy enough to communicate even without ChatGPT’s help.

For more complex scenes, you can break the process down into two steps.

1. Ask ChatGPT to exhaustively describe the initial image

Here’s what I used:

Please describe this image in great detail, focusing on all the major elements, colors, atmosphere, and so on.

2. Ask for the remixed versions

I used a slightly tweaked version of the “koala” prompt:

Here’s the “watercolor wash” version:

There you have it.

You now have three cool ways to use the power of large language models to help you make better images with text-to-image tools.

Over to you…

I hope that gave you some inspiration. Can you think of other interesting ways to use the chatbot + text-to-image model combo?

Leave a comment or shoot me an email at whytryai@substack.com.

No, you’re an oxymoron!

Unintended rhyme FTW!

DALL-E 3 and Midjourney V6 are currently the best at prompt comprehension, but I do expect many others to catch up soon. (Imagen 2 already appears to be great at it, but it’s not yet widely available.)

Please treat my prompts in this article only as inspiration. I encourage you to experiment. You’ll easily find a way to get better, cleaner descriptions.

I don't have any brilliant things to add today (most of those tend to go over at AI Jest), but I would add that image generation is really fun now. It used to be amazing, but also kind of frustrating. I think we're still in the "fun but frustrating" camp today, but sliding more and more toward getting something useful and beautiful with less additional prompting and editing.

If you have a lot of text you want to break up with images, you can also ask for suggestions, and GPT4 is really good at giving them now.

Great article. We stumbled on getting LLMs to create images prompts for us when we were trying to automate a pipeline of work. We then 'illustrated' this idea of Chaining Tool AI prompts together in a project on visualizing dad jokes - for an example see https://open.substack.com/pub/thetaonpi/p/spaghetti-images?r=2unyem&utm_campaign=post&utm_medium=web